Open-Sourcing Reranker v2

- Introducing Reranker v2, our state-of-the-art, cost-effective, instruction-following, multilingual family of rerankers

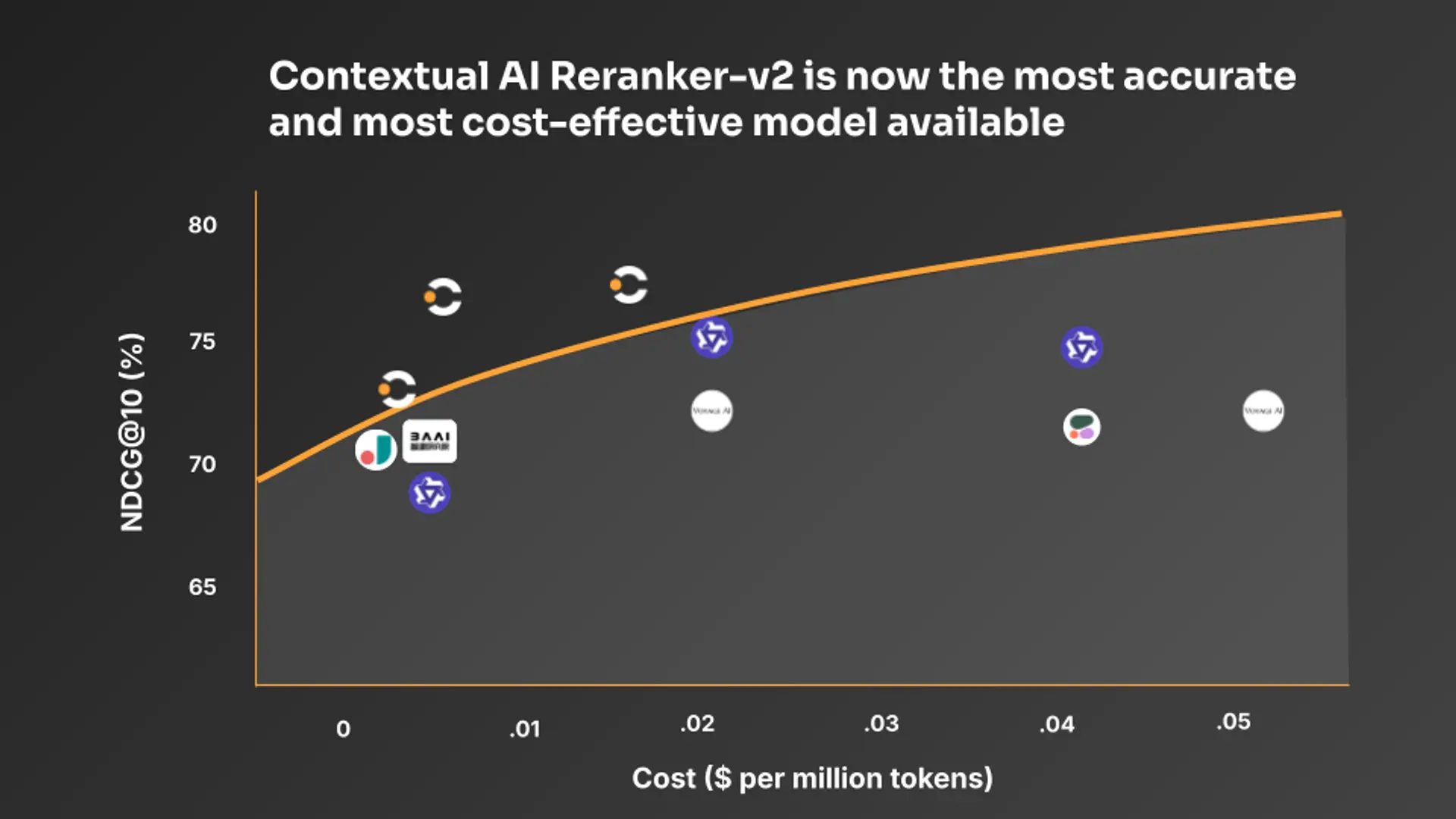

Earlier this year, we released the world’s first instruction-following reranker. Today, we’re open-sourcing our v2 family of instruction-following rerankers, advancing the state of the art and achieving best-in-class results on industry standard benchmarks as well as internal testing with real-world customers. Reranker v2 improves on our industry-leading performance, while delivering reduced latency, lower costs, and increased throughput.

Reranker v2 is available in three sizes (1B, 2B, and 6B) and their quantized versions (1B, 2B, and 6B). Along with the reranker itself, we are also providing three instruction-following evaluation datasets, so users can reproduce our benchmark results. By releasing these datasets, we are also advancing instruction-following reranking evaluation, where high-quality benchmarks are currently limited. You can access fully-managed versions using our API here.

Our open-source SOTA models excel in 5 key areas:

- Instruction following (including capability to rank more recent information higher)

- Question answering

- Multilinguality

- Product search / recommendation systems

- Real-world use cases

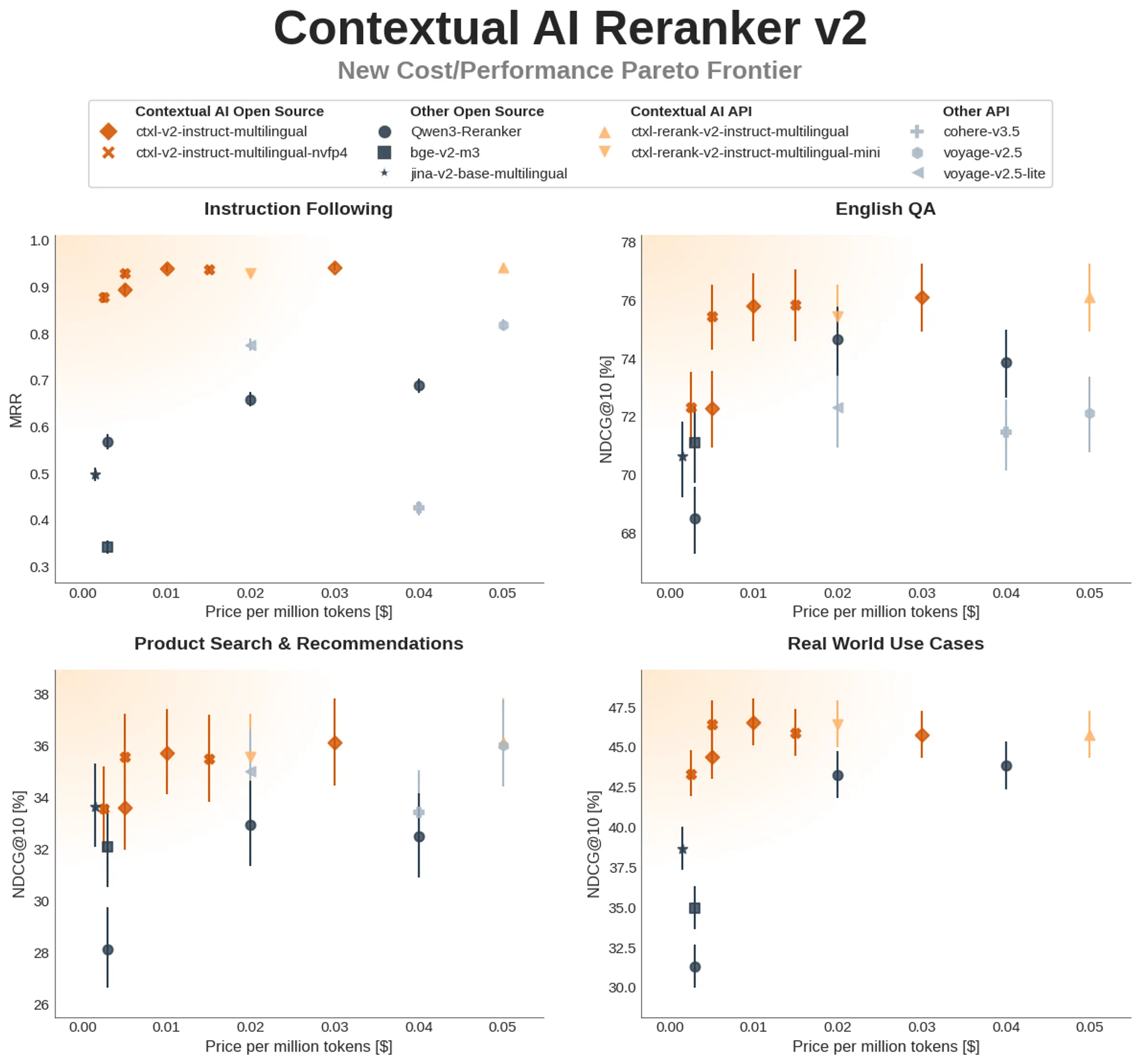

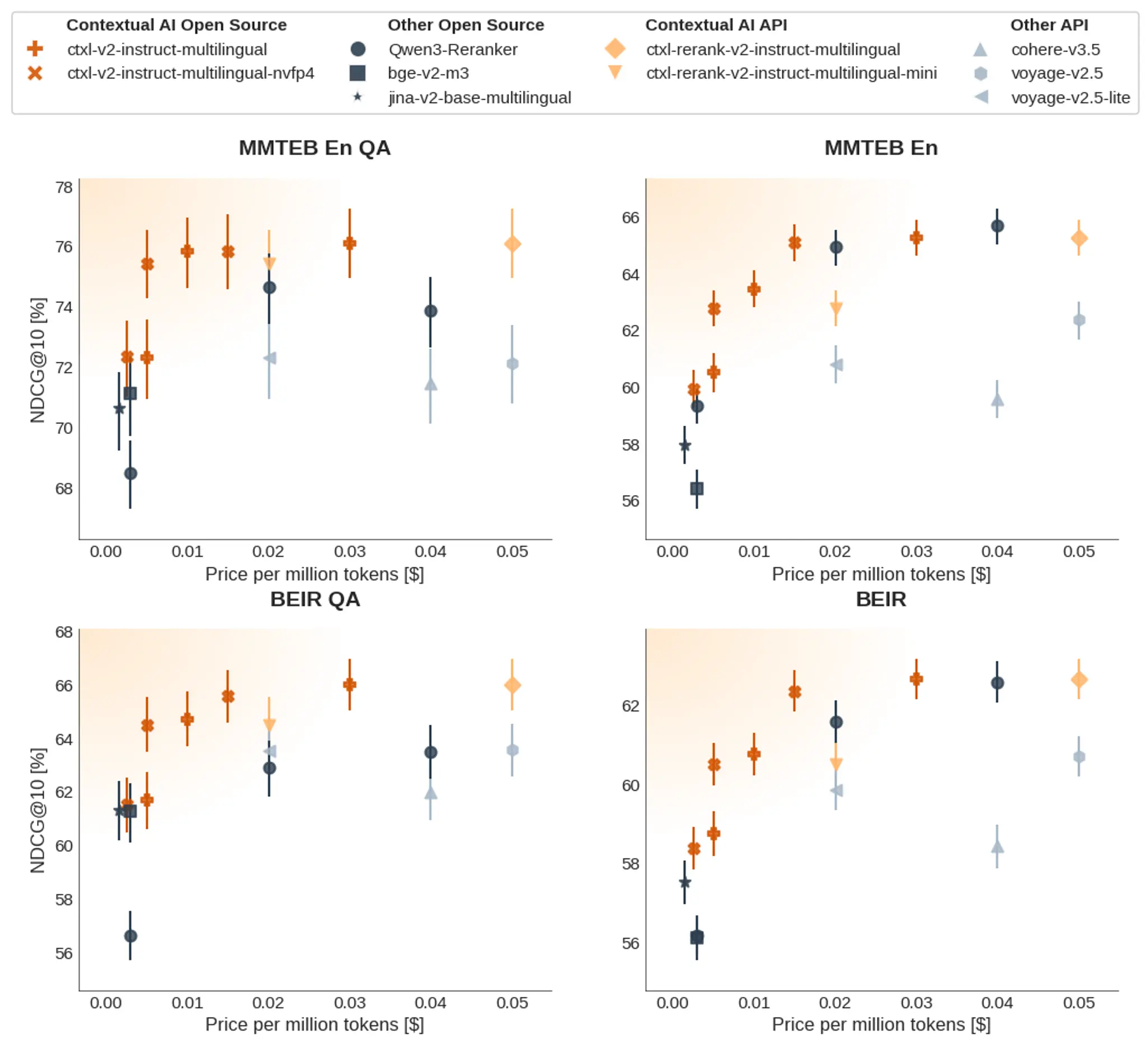

Figure 1. Performance vs price [1] (per million tokens) for rerankers across four benchmarks. Colors denote provider/source categories with Contextual AI models in warm hues and others in cool hues. Lower cost is to the left and higher performance is upward, as indicated by the orange gradient. The top-left panel uses a different performance metric and y-axis range from the others (MRR vs. NDCG@10), which explains the smaller error bars [2].

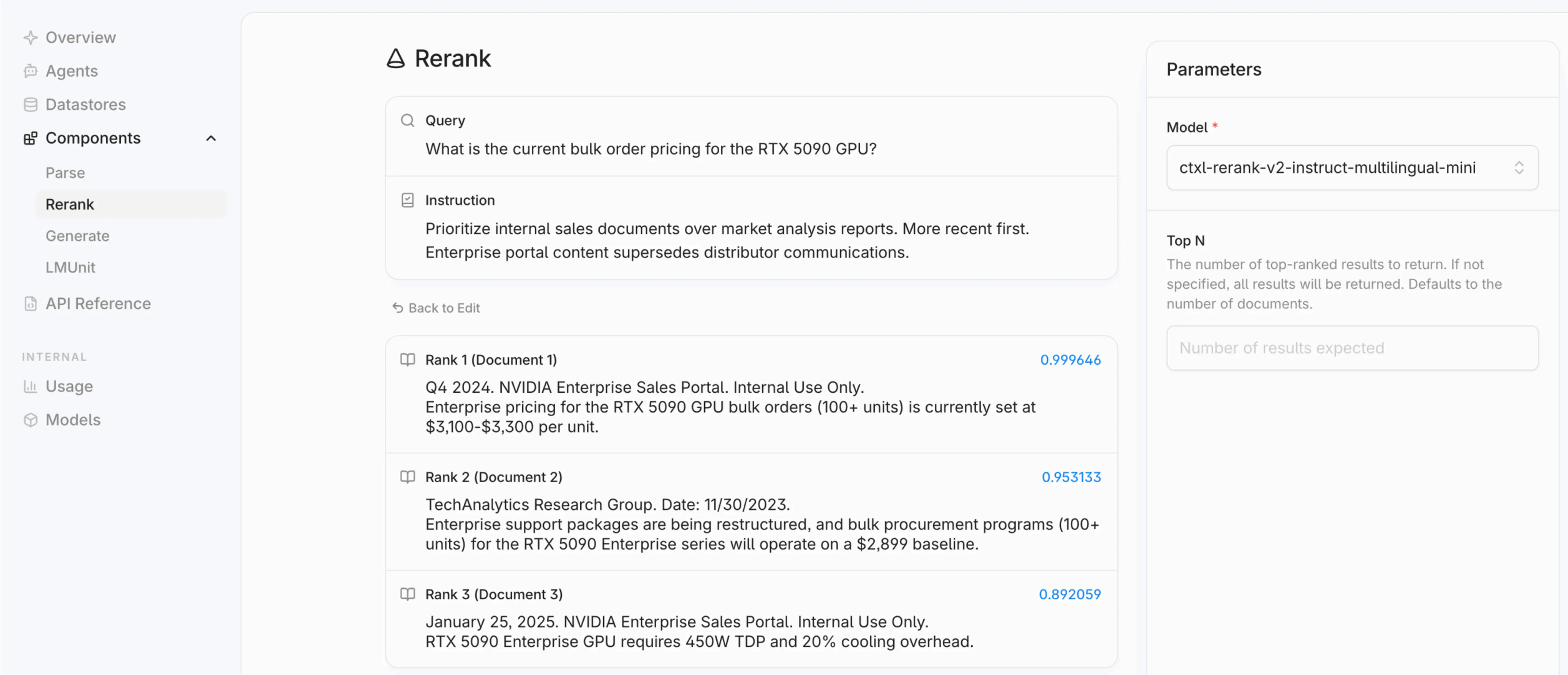

Instruction Following

Knowledge search systems often deal with conflicting information in their knowledge bases. Marketing materials can conflict with product materials, documents in Google Drive could conflict with those in Microsoft Office, Q2 notes conflict with Q1 notes, and so on. You can tell our reranker how to resolve these conflicts with instructions like

- “Prioritize internal sales documents over market analysis reports. More recent documents should be weighted higher. Enterprise portal content supersedes distributor communications.” or

- “Emphasize forecasts from top-tier investment banks. Recent analysis should take precedence. Disregard aggregator sites and favor detailed research notes over news summaries.”

Check out our previous blogpost for detailed examples with visuals.

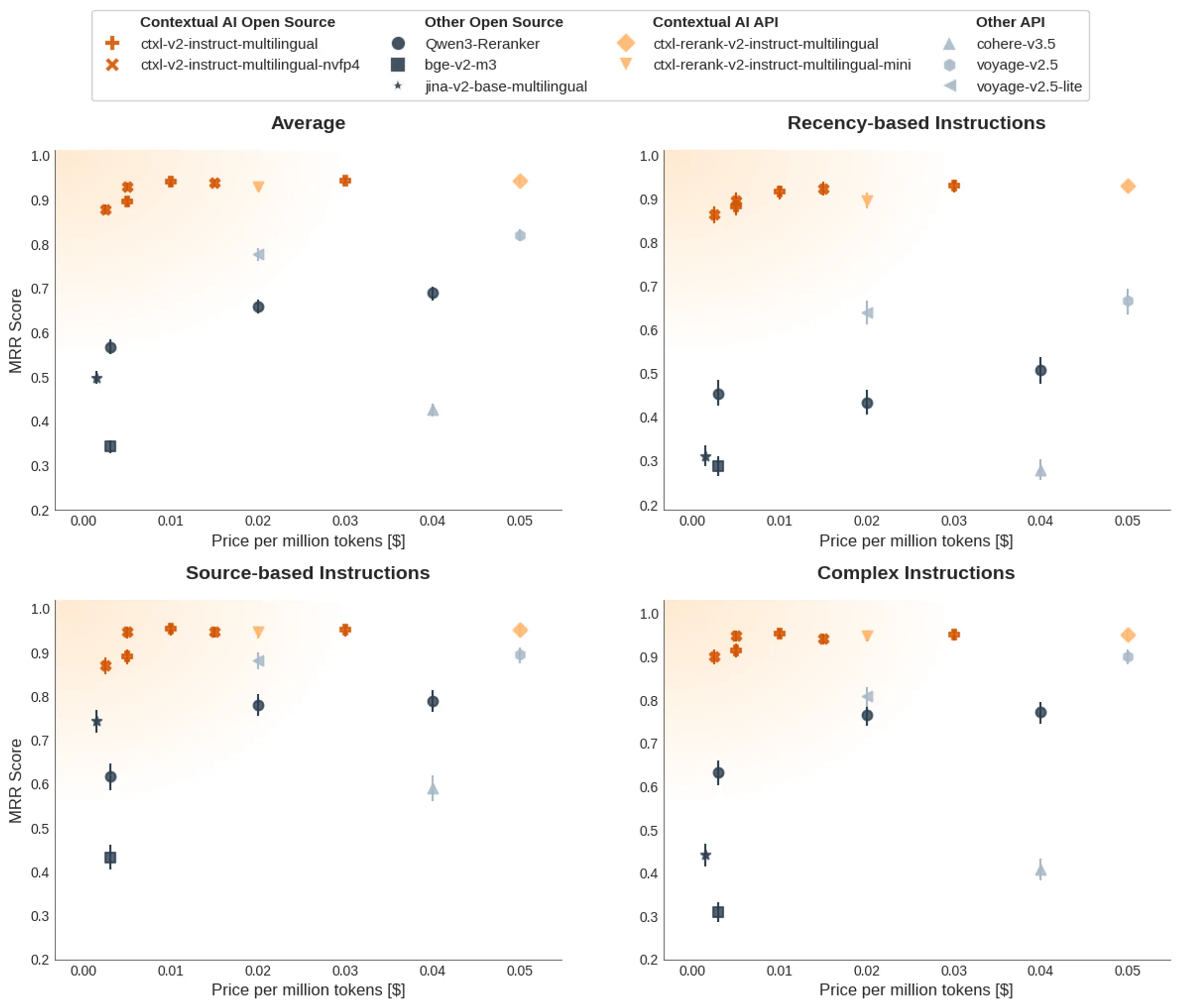

To test how our reranker’s instruction-following capabilities compare with other rerankers, we created three datasets that we are also open sourcing on HuggingFace. All three of our reranker sizes outperform all other rerankers on recency-based, source-based, and complex multi-source instructions. For example, you can get a ~35% increase in recency-awareness with our quantized 2B reranker compared to the second-best reranker at a tenth of the price.

Figure 2. Performance measured by the MRR metric vs price [1, 2] (per million tokens) for rerankers across three instruction-following benchmarks and their average (top left).

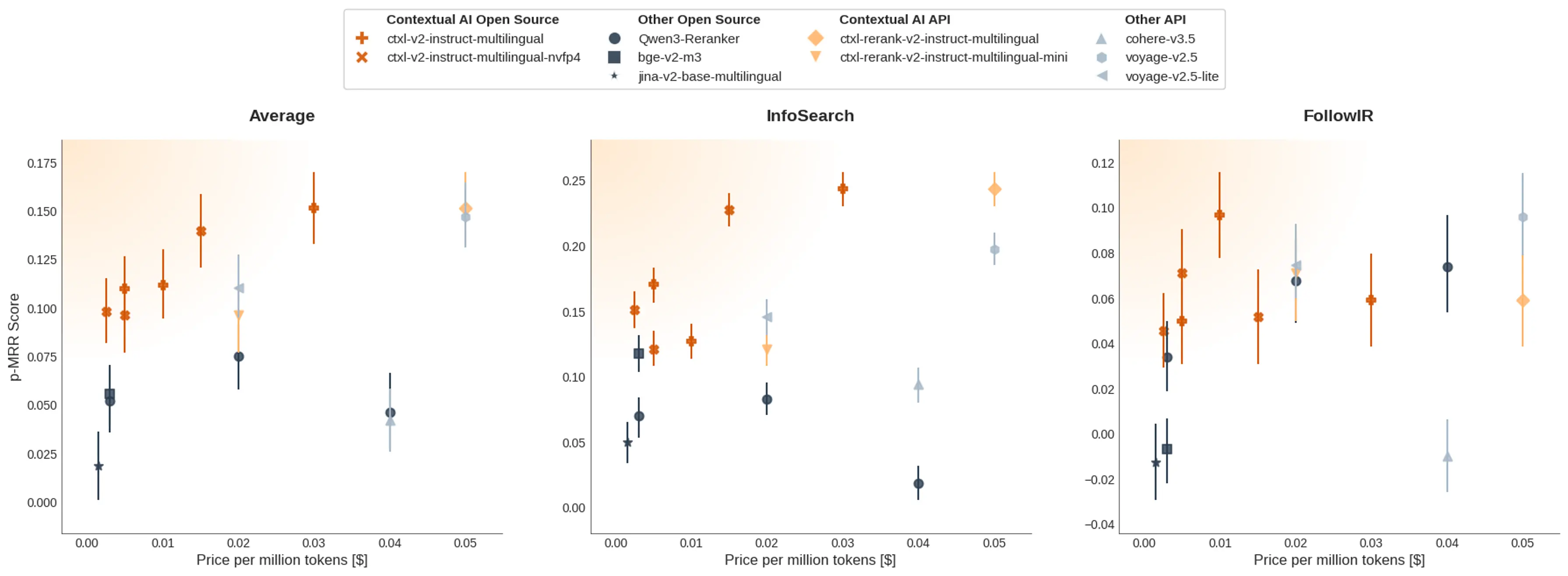

We also evaluate our reranker against others on the academic instruction-following benchmarks InfoSearch and FollowIR. The results here again show our reranker’s superior performance and cost effectiveness compared to others.

Figure 3. Performance measured by the p-MRR metric vs price [1, 2] (per million tokens) for rerankers across two instruction-following benchmarks and their average (left).

Question Answering

Since question answering (QA) constitutes the primary use case for rerankers in production—particularly in RAG agents where retrieval quality determines response accuracy—accurate QA evaluation is critical. While we evaluate on the standard public benchmarks MMTEB English v2 (the retrieval subset) and BEIR, these include many non-QA tasks such as argument-counterargument matching (ArguAna) and duplicate-question detection (Quora) that don’t reflect typical deployment scenarios. To address this gap, we curate a QA-focused suite from within these benchmarks, specifically selecting datasets where queries are questions and documents are answer-containing passages [3, 4].

On the QA-focused suites, Contextual AI Reranker v2’s performance exceeds that of all other rerankers with superior throughput, latency, and cost. On the full MMTEB English v2 and BEIR suites, Contextual AI Reranker v2 maintains position on the cost/performance Pareto frontier, matching the largest Qwen3-Reranker’s performance with superior throughput, latency, and cost while outperforming all other models.

Figure 4. Performance measured by the NDCG@10 metric vs price [1, 2] (per million tokens) for different rerankers. The left panels show the QA-focused suites of the right panels.

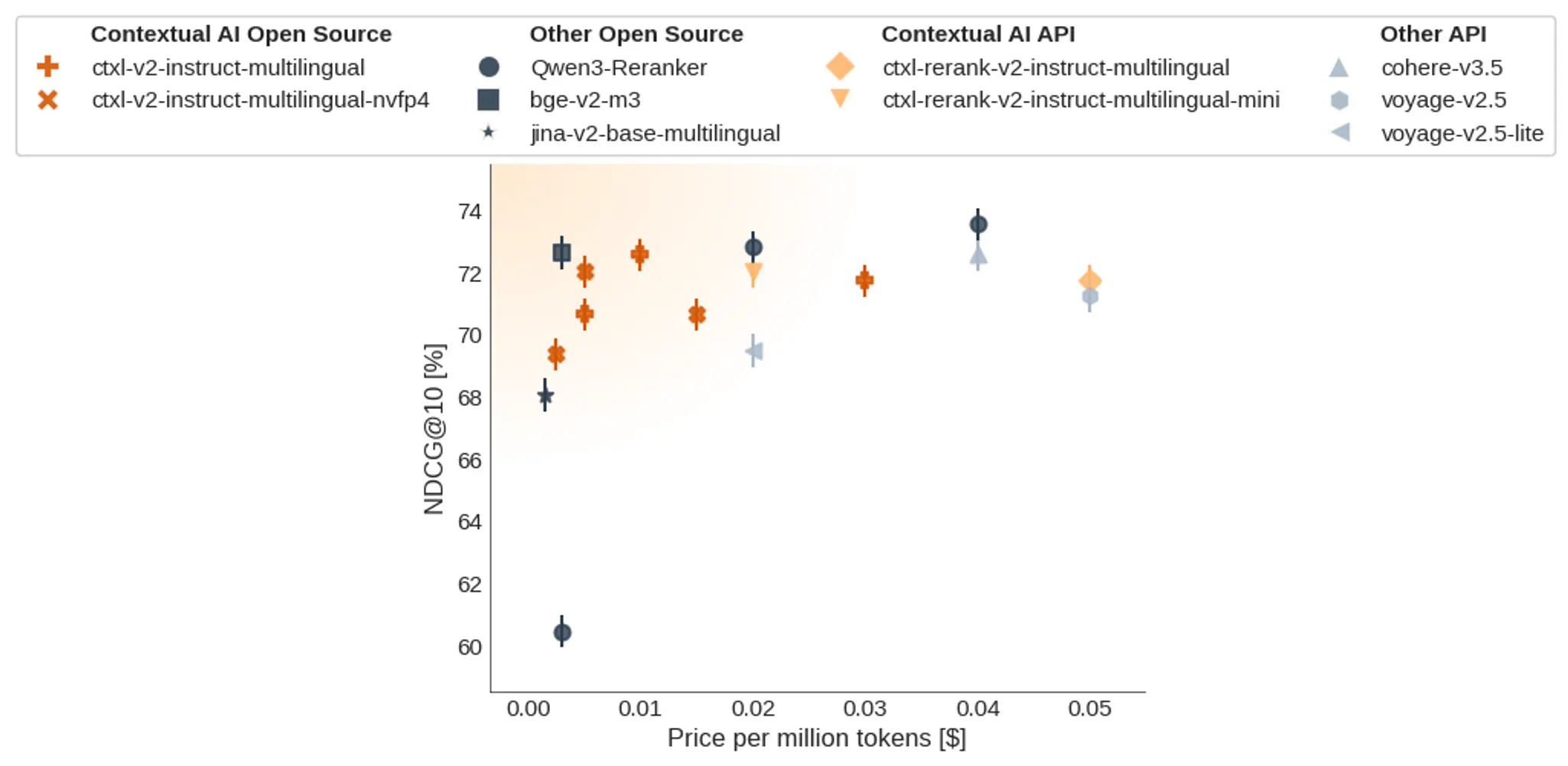

Multilinguality

Multilingual reranking capability is increasingly critical as retrieval systems must serve globally diverse user bases and handle cross-lingual information needs in real-world applications. We test our reranker on the industry-standard multilingual retrieval benchmark, MIRACL, specifically on the hard-negatives version developed for MMTEB. We find that our reranker matches rerankers like Qwen3-Reranker on this benchmark, highlighting our reranker’s multilingual capability.

Figure 5. Performance measured by the NDCG@10 metric vs price [1, 2] (per million tokens) for different rerankers on the MIRACL hard-negatives benchmark developed for MMTEB for testing multilingual capabilities.

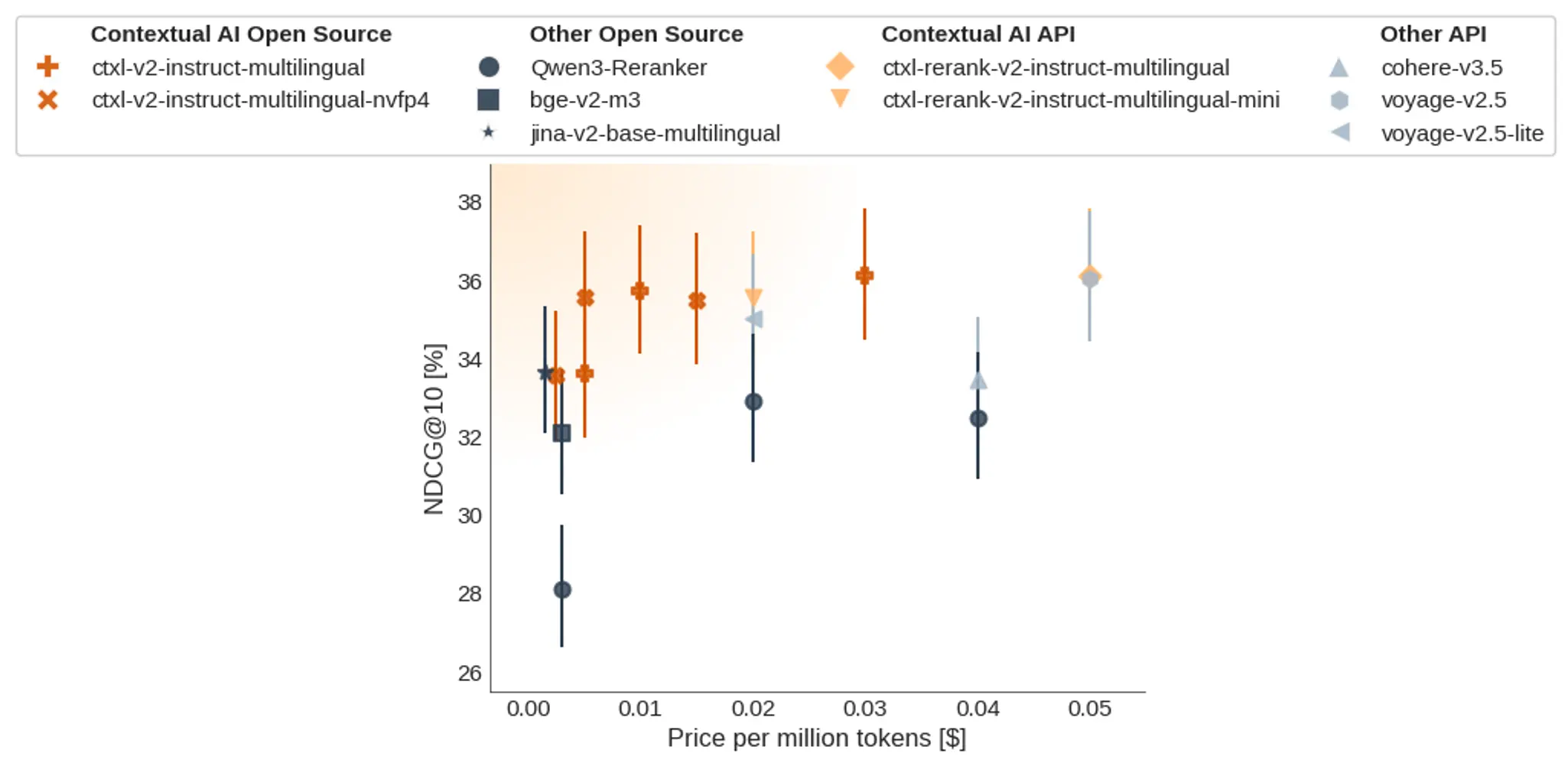

Product Search & Recommendation Systems

Another key application of rerankers is in recommendation systems. When users search an e-commerce site, for example, rerankers help match their queries to the most relevant products in the database. The ideal public benchmark for this is the TREC 2025 Product Search and Recommendations Track. We evaluate the rerankers and find that our models outperform all other models at a superior throughput, latency, and cost.

Figure 6. Performance measured by the NDCG@10 metric vs price [1, 2] (per million tokens) for different rerankers on the TREC 2025 Product Search and Recommendations Track.

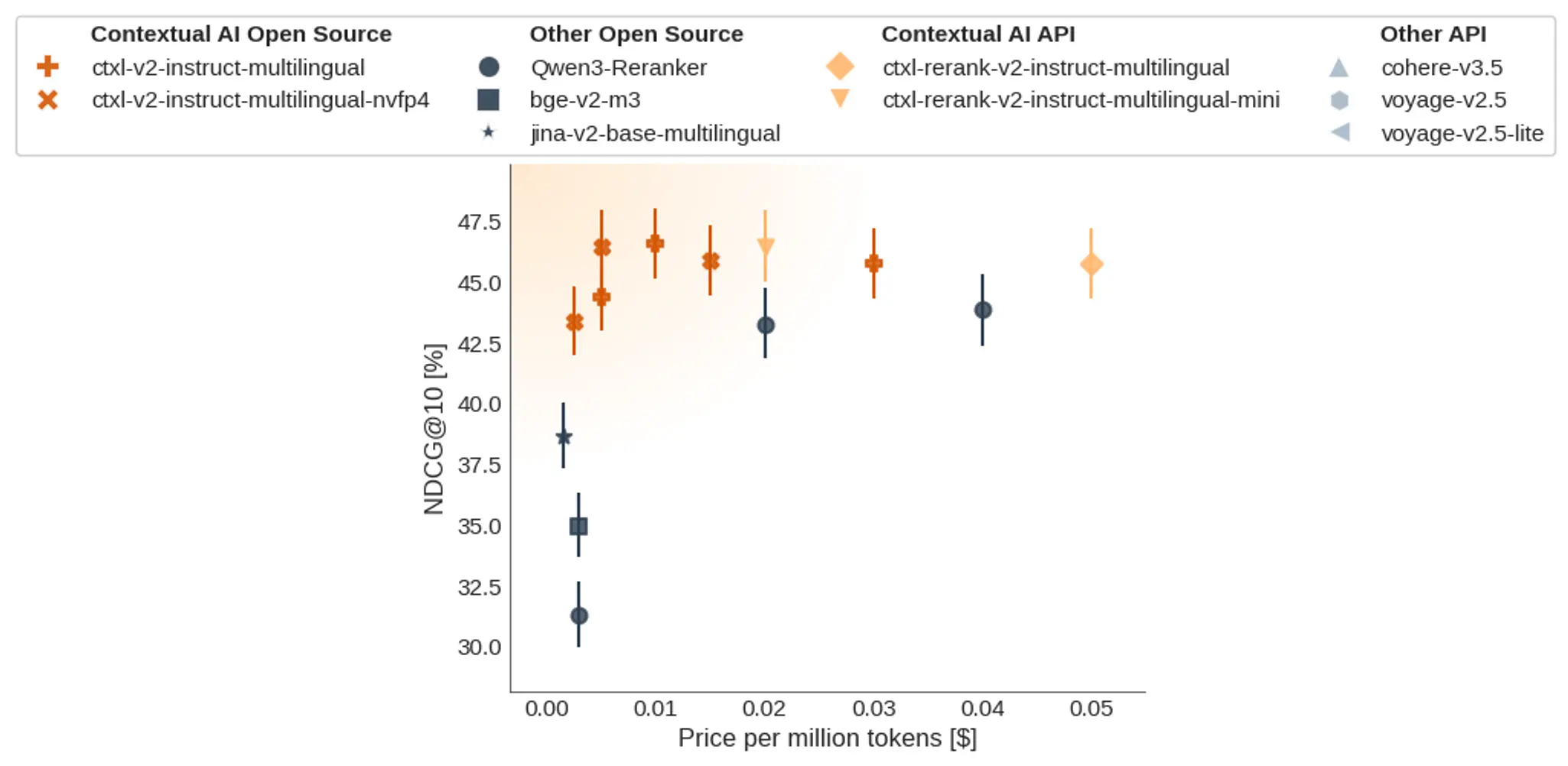

Real World Use Cases

Finally, on real-world private customers’ retrieval benchmarks across enterprise domains like finance, technical documentation, engineering, and code, Contextual AI Reranker v2 outperforms all other open source rerankers. We do not evaluate API models on sensitive customer data.

Figure 7. Performance measured by the NDCG@10 metric vs price [1, 2] (per million tokens) for different rerankers on real-world customers’ benchmarks across domains like finance, technical documentation, engineering, and code.

Conclusions

This blogpost highlights that

- We are open sourcing the highest performing, most efficient reranker in the world.

- Our multilingual reranker is state-of-the-art on instruction following, question answering, product search and recommendations, and real-world use cases.

- Our reranker is the only family of models capable of ranking more recent information higher than stale information.

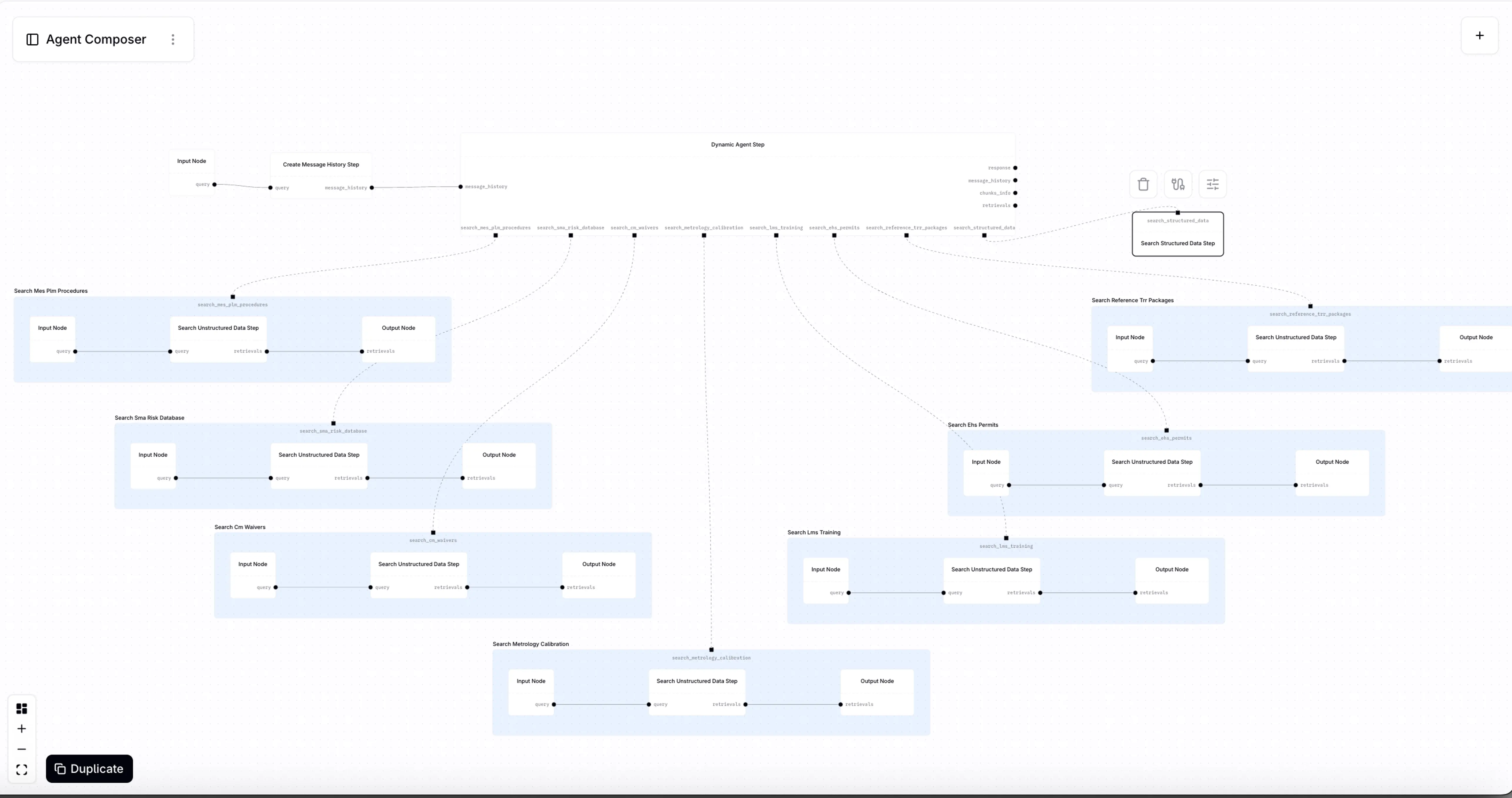

Knowledge search systems have evolved from static RAG systems to dynamic multi-step retrieval determined by an agent equipped with knowledge search tools. Providing an agent with a tool that takes a query and an instruction about the type of information to retrieve as input is strictly more powerful than a tool that only takes a query as an input. It enables an unprecedented level of control that improves knowledge search performance significantly.

Getting started

Open source

- Our reranker and its quantized versions are on HuggingFace.

Google Model Garden

API endpoint

- Sign up for free here. The first $50 (1 billion tokens) are free when you sign up with a business email.

- Documentation page

- Pricing information

Example usage:

import requests

headers = {

"accept": "application/json",

"content-type": "application/json",

"authorization": f"Bearer {contextual_api_key}"

}

payload = {

"query": query,

"instruction": instruction,

"documents": documents,

"model": "ctxl-rerank-v2-instruct-multilingual"

# or "ctxl-rerank-v2-instruct-multilingual-mini"

}

rerank_response = requests.post (

"https://api.contextual.ai/v1/rerank",

json=payload,

headers=headers

)Python SDK

Example usage:

# pip install contextual-client

from contextual import ContextualAI

contextual_client = ContextualAI(

api_key=contextual_api_key,

base_url="https://api.contextual.ai/v1"

)

rerank_response = contextual_client.rerank.create(

query=query,

instruction=instruction,

documents=documents,

model="ctxl-rerank-v2-instruct-multilingual"

# or "ctxl-rerank-v2-instruct-multilingual-mini"

)Contextual AI Playground

Footnotes

- The prices per million tokens for the open source models including ours are estimated based on the inference prices for models of the same sizes on openrouter.ai. For models with no equivalent sizes on openrouter.ai, we linearly interpolate/extrapolate to estimate the price. This choice of x-axis allows us to place our models on the same x-axis as API models with unknown sizes and infrastructure. Since Cohere’s pricing is per search, we assume that each search request includes 100 documents and that the sum of the number of tokens in the query and the number of tokens in each document is 500. Finally, we estimate that the price for NVFP4 models is half the price for BF16 models based on our throughput and latency tests.

- Error bars show 80% bootstrap confidence intervals for the weighted mean, using a stratified bootstrap that resamples queries within each dataset while holding dataset weights fixed.

- The datasets used in the QA-focused suite of MMTEB English are: FiQA2018, HotpotQAHardNegatives, TRECCOVID, and Touche2020Retrieval.v3.

- The datasets used in the QA-focused suite of BEIR are: FiQA2018, HotpotQA. MSMARCO, NQ, TRECCOVID, and Touche2020.

Related Articles

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?