An Agentic Alternative to GraphRAG

We are introducing a new Metadata Search Tool for agents, designed to solve the reference traversal problem in RAG pipelines.

In complex domains like law or compliance, retrieval is rarely a linear path to a single document. Often, the "Main Chunk" matching the search terms is just the starting point, a node that points elsewhere. For example, an agent retrieves a chunk that says, "Refer to Section 4.2.1 for policy details." To construct a complete answer, the agent must successfully traverse this reference. A standard semantic search often fails to retrieve "Section 4.2.1" directly because that linked chunk lacks the specific context of the user's query, even though access to it is critical for the agent's reasoning.

Our new Metadata Search Tool gives agents the flexibility to traverse these references dynamically, unlocking the reasoning capabilities typically associated with GraphRAG without the architectural complexity.

GraphRAG Limitations

Processing documents heavily at the indexing stage allows systems to capture latent relationships that might otherwise be lost in a flat vector index, creating a structured map for retrieval. Graph retrieval-augmented generation (GraphRAG) is a popular architectural pattern for this type of pre-computed multi-hop reasoning. It works by extracting nodes and edges to build a static knowledge graph. However, traditional GraphRAG relies on rigid, heuristic-based pipelines that introduce significant friction:

- Heuristic Overload: Building a graph requires strict rules for edge construction, node deduplication, and graph traversal.

- Brittleness to Updates: GraphRAG indices are fragile. Because they often rely on global community detection and hierarchical summarization, updating a single document isn't isolated. It can trigger a ripple effect requiring the recomputation of significant portions of the graph and regeneration of community summaries, making real-time data synchronization prohibitively expensive.

- Diminishing Returns: In our internal ablations, we found that these complex heuristics (like community summarization) offered diminishing returns compared to the computational cost and latency they introduced.

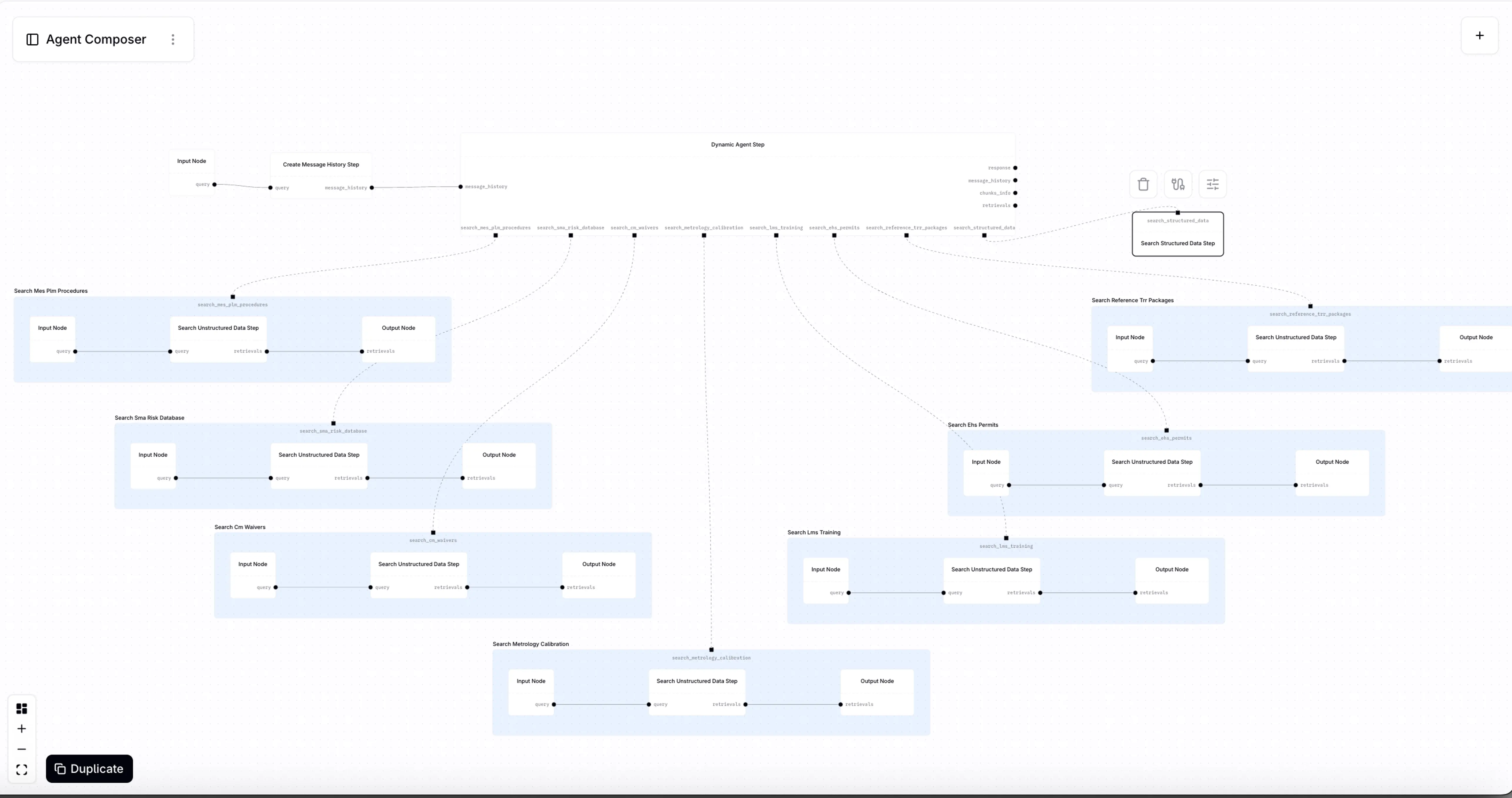

System Design

We chose a leaner, agentic approach. Instead of building a static graph, we extract structured metadata (aliases) at indexing time, such as section hierarchies, claim lists, or citation keys, and index them alongside the text. The "graph" traversal isn't hard-coded; it is performed by the agent deciding which tool to use and what query to use with that tool. This keeps the system adaptable: adding new docs or changing your metadata schema is trivial. The architecture relies on giving the agent options—specifically, the choice between searching the raw text or searching specific metadata indices.

Indexing Workflow

When processing data, users can trigger the creation of an additional metadata index for the same datastore. While the main index stores embeddings of the original chunk content, the secondary index stores "aliases"—alternative ways of referring to that chunk.

- Example: A chunk containing a corporate policy might be indexed under its raw text, but also under a separate index for "Section 4.2.1".

- Configurability: The content of the additional index is defined via a prompt, allowing users to auto-generate nodes relevant to their data (e.g., section hierarchies, specific questions the chunk answers).

Figure 1: On the left, metadata (aliases) is extracted from chunks to create a secondary index. On the right, the agent dynamically selects which index to query to resolve references. At the bottom, an example of what each index contains and how chunks are linked is shown.

Query Workflow

The agent acts as the traversal engine. For every step in its workflow, it decides:

- Which datastore index to query (Content vs. Metadata).

- What query string to use.

In the reference traversal example, the agent retrieves the "Main Chunk" via semantic search, identifies the reference to "Section X.Y," and then deliberately uses the Metadata Search Tool to look up "Section X.Y" in the specific aliases index.

Use Cases: Explicit and Implicit Traversal

This method generalizes across two distinct types of reference patterns:

- Explicit References: Common in legal corpora and scholarly publications. Documents explicitly cite regulations or external papers (e.g., "see Halal et al. 2024"). The agent extracts the citation and uses metadata search to hop to the specific referenced chunk.

- Implicit References: Connections can also be conceptual. An agent can extract entities as metadata, allowing it to find all chunks discussing a specific entity even if they don't share keywords. This facilitates multi-hop reasoning (e.g., "find the father of the author of Book X") by enabling precise secondary retrieval steps.

Experiment and Results

To validate our approach, we created a custom benchmark based on compliance workflows using an adversarial approach wherein we selected queries with low first-pass retrieval scores for the correct chunk.

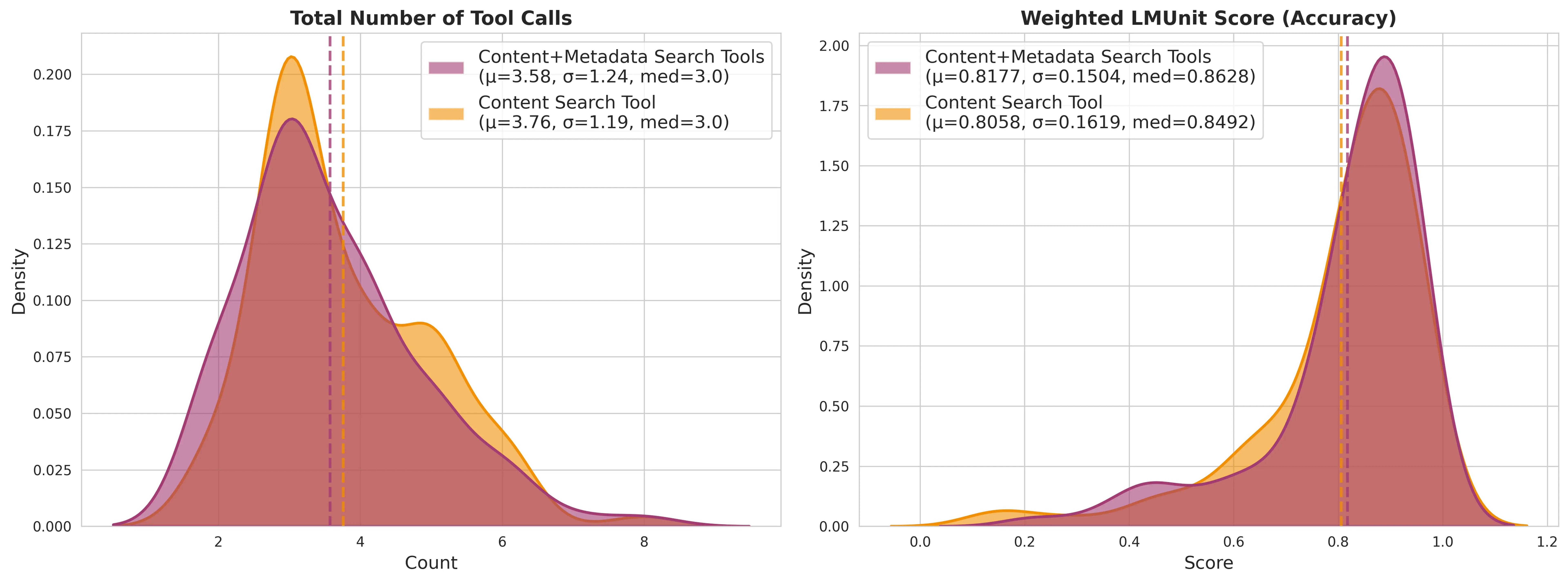

We evaluated a Claude Sonnet 4.5 agent in two configurations: one with access only to text content search, and one with access to both text content and metadata search tools.

Accuracy

When limited to 5 turns, the agent with the metadata search tool significantly outperformed the baseline:

- Agent with content + metadata search tools: 75.43%

- Agent with content search tool only: 67.81%

These scores reflect a weighted fact-based evaluation metric. We use an LLM to generate natural language unit tests categorized as "essential" and "supplementary" based on a human-annotated ground truth answer for each query. We then score each agent's response against this rubric using LMUnit, with essential facts weighted more heavily in the final score calculation.

Efficiency

While the content-only agent could eventually close the gap if allowed 10 turns (80.58% vs 81.85%), the metadata-enabled agent required a lower number of tool calls, token usage, and hence latency to guess its way to the right document.

Figure 2: Agents equipped with a metadata search tool resolve complex queries in fewer steps while giving more accurate responses compared to agents relying solely on text content search.

Conclusion

By treating metadata extraction as prompt-engineering and traversal as an agentic tool-use problem, we achieve the flexibility of GraphRAG without the complexity. This empowers you to define your own "nodes" via prompting and lets the agent handle the edges. Furthermore, this approach highlights the potential for more sophisticated indexing algorithms to create richer corpora structures, ultimately enabling agents to navigate complex information landscapes with greater intuition and precision.

Related Articles

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?