Today, we’re introducing our state-of-the-art reranker, the first reranker that can follow custom instructions about how to rank retrievals based on your specific use case’s requirements (recency, document type, source, metadata, etc.). Our reranker is the most accurate in the world with or without instructions – outperforming competitors by large margins on the industry-standard BEIR benchmark, our internal financial and field engineering datasets, and our novel instruction-following benchmarks.

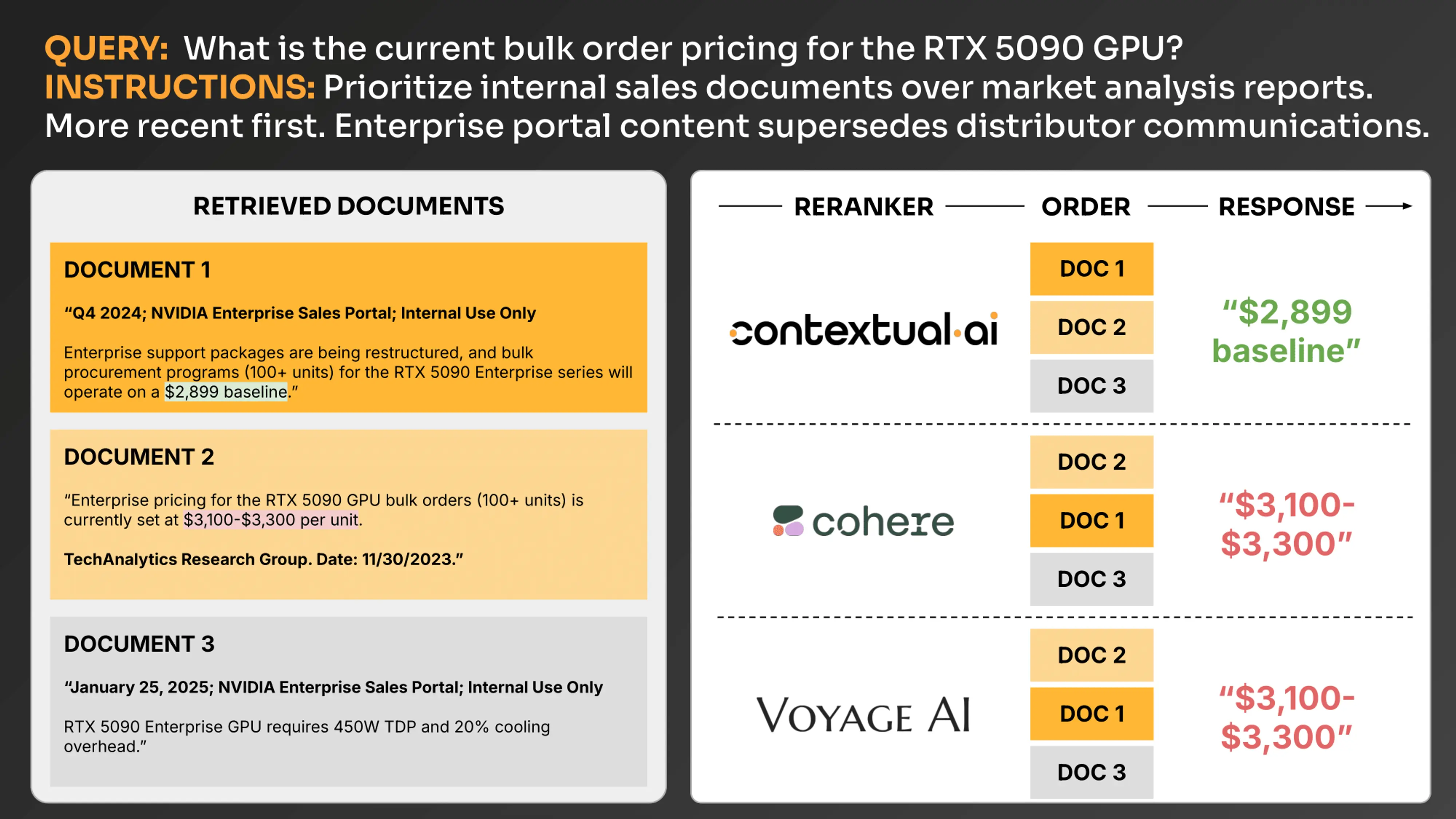

Enterprise RAG systems often deal with conflicting information in their knowledge bases. Marketing materials can conflict with product materials, documents in Google Drive could conflict with those in Microsoft Office, Q2 notes conflict with Q1 notes, and so on. You can tell our reranker how to resolve these conflicts with instructions like “Prioritize internal sales documents over market analysis reports. More recent documents should be weighted higher. Enterprise portal content supersedes distributor communications.” or “Emphasize forecasts from top-tier investment banks. Recent analysis should take precedence. Disregard aggregator sites and favor detailed research notes over news summaries.” This enables an unprecedented level of control that improves RAG performance significantly.

To get started for free today, create a Contextual AI account, visit the Getting Started tab, and use the /rerank standalone API. Our reranker is a seamless drop-in replacement for your existing reranker or an easy addition to your RAG system if you do not already use a reranker. Either way, it will improve your RAG performance.

TRY FREEInstruction following: Unprecedented control over your RAG pipeline

Reranking has become a hard requirement for enterprise AI systems seeking production-grade accuracy. While traditional retrieval methods excel at quickly surfacing potentially relevant documents, reranking provides the crucial final step of precisely ordering these candidates based on their true relevance to the query. At Contextual AI, our systems consistently outperform alternatives in part because of our unique ability to precisely rank information from steerable retrieval mechanisms.

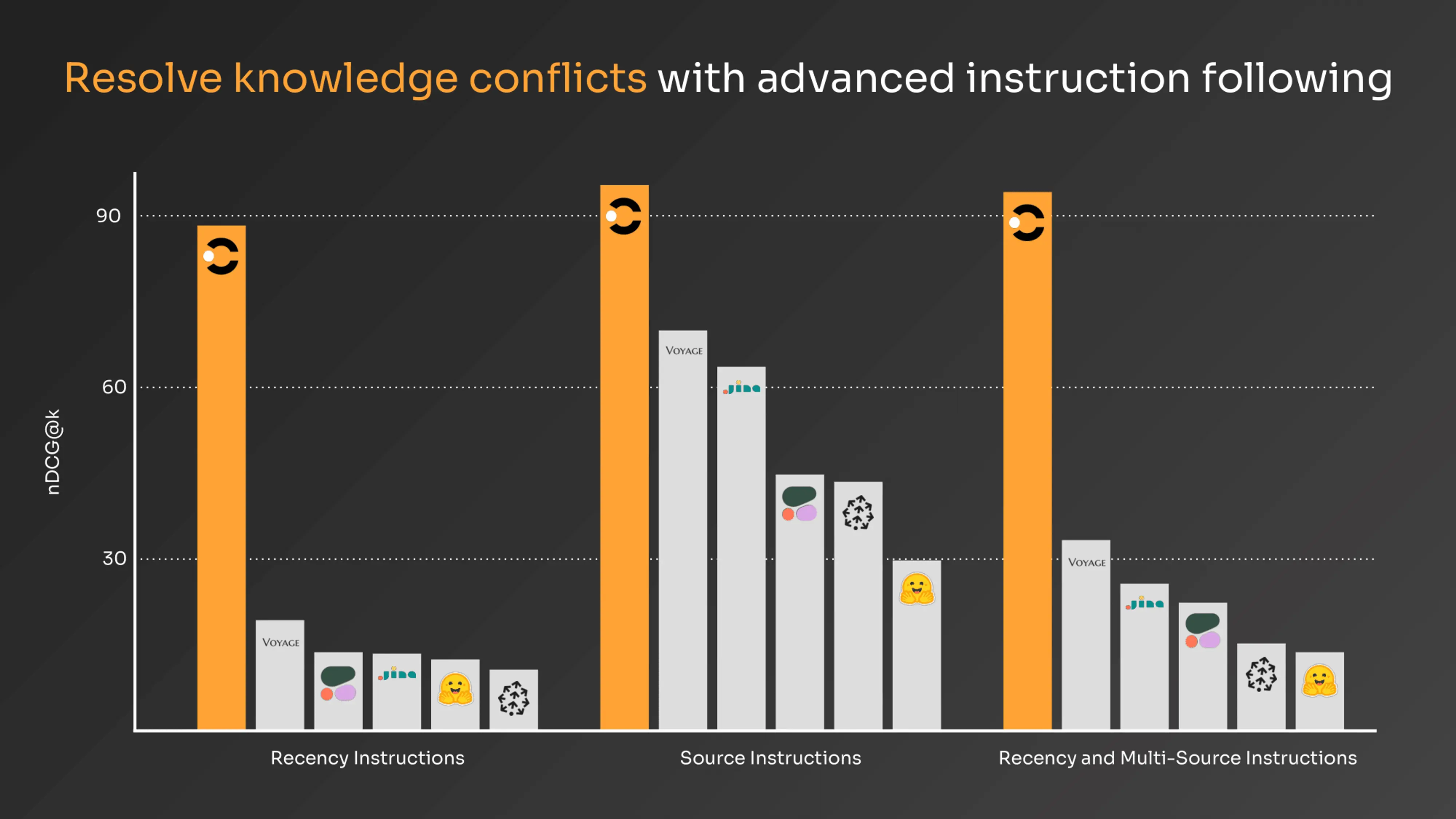

Our reranker’s novel instruction-following functionality enables you to dynamically control how documents are ranked through natural language instructions about documents’ recency, source, metadata, and more. This solves one of the most challenging problems in RAG systems: dealing with conflicting information from multiple sources.

Let’s look at some realistic examples that show how instruction following is a must-have for accurate reranking.

Example 1: The reranker’s ability to follow instructions enables it to rank the documents as desired, even when other documents are also relevant to the query.

Example 2: Other rerankers fail to follow instructions and consequently rank documents incorrectly. In this example, only Contextual’s reranker correctly reranks the provided documents because it prioritizes the most recent sales document’s info over other documents, per the instruction. The instructions are appended to the query when given to the rerankers. We found many more examples like this.

We synthetically generated the documents in this example. Rerankers that do not effectively follow instructions incorrectly rank the documents.

These examples just show a glimpse into the types of instructions that our reranker can handle. We encourage you to write instructions that are specific to your use cases and knowledge bases.

State-of-the-art performance

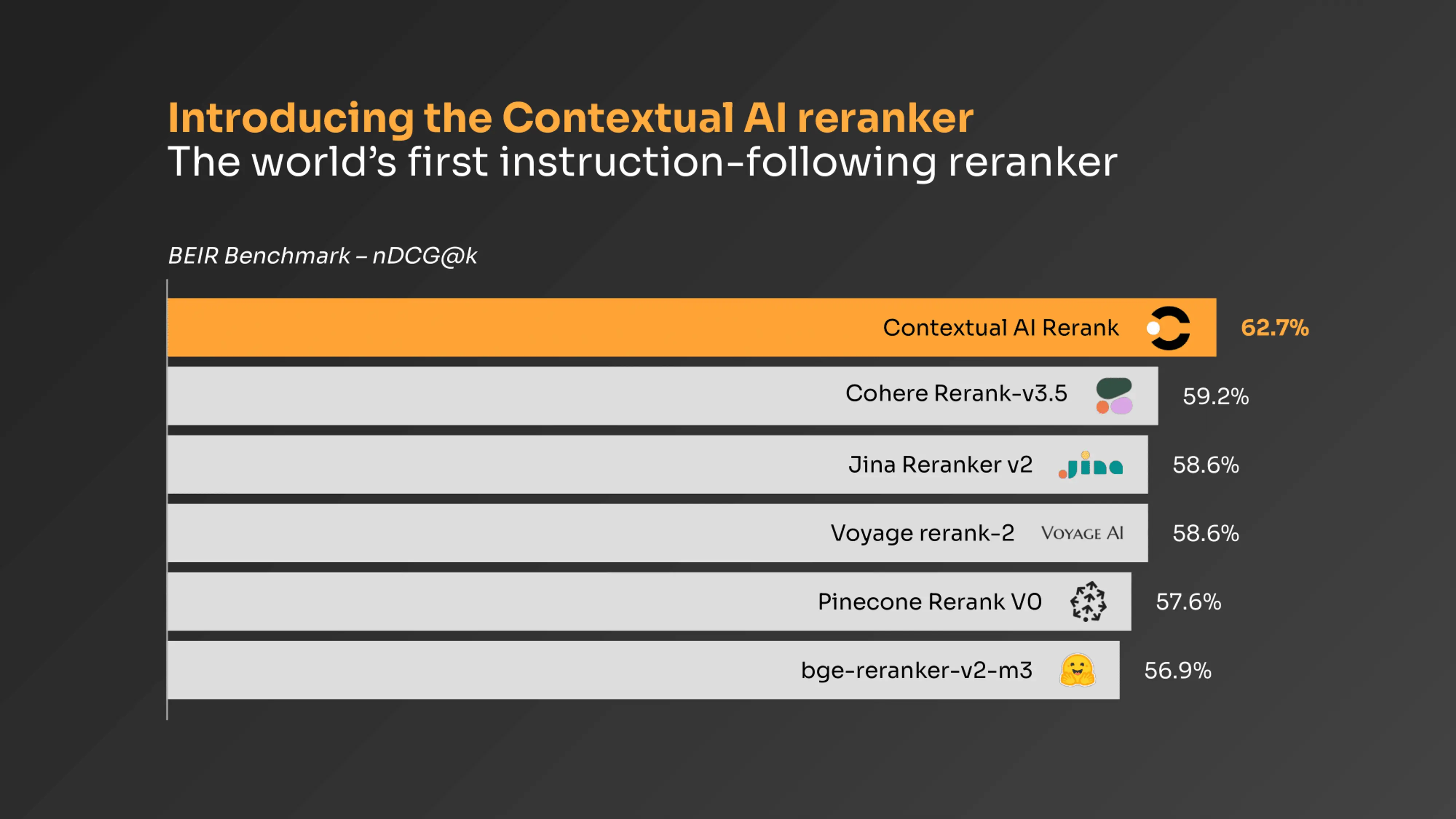

Our reranker is the most accurate and robust in the world with or without instructions, achieving state-of-the-art performance on the industry-standard BEIR benchmark, our internal customer datasets, and our novel instruction-following benchmark.

On BEIR, our reranker achieves state-of-the-art performance with large gains on high-value tasks. Some highlights in which we outperformed all others are:

- Multi-hop Reasoning: State-of-the-art performance on HotpotQA, demonstrating excellent capability in handling complex queries that require connecting information across multiple documents

- Financial Domain: State-of-the-art results results on FiQA and our internal financial customer datasets (Fortune 500 bank), showing particular strength in financial document understanding

- Scientific Literature: State-of-the-art performance on SCIDOCS and SciFact, proving effectiveness in academic and research contexts

- Fact Verification: State-of-the-art results on FEVER and ClimateFEVER, highlighting reliable ranking for fact-checking applications

We used 14 BEIR datasets with k=8. We ranked 100 retrieved documents.

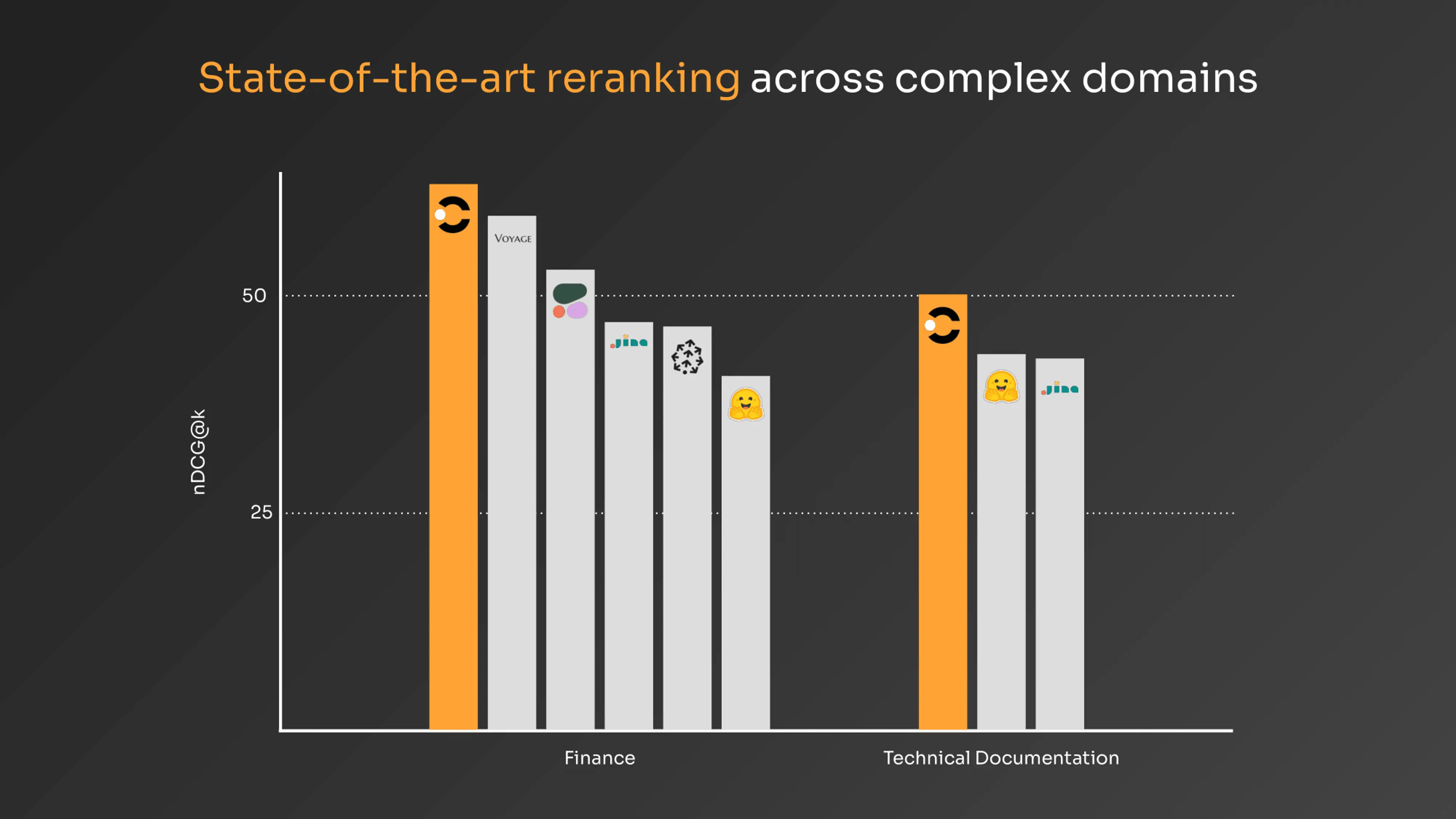

In practice, our reranker outperforms others on our internal customer datasets from Fortune 500 enterprises and more.

The Finance benchmark includes 2 datasets (930 total queries), and the Technical Documentation benchmark includes 4 datasets (1046 queries). We use k=8 for both.

Finally, we created datasets of examples that test a reranker’s ability to follow instructions in queries, and our reranker once again achieved leading performance by a huge margin.

Each of these benchmarks has 500 queries. We use k=1.

These improvements translate directly to more accurate and reliable RAG systems in production environments.

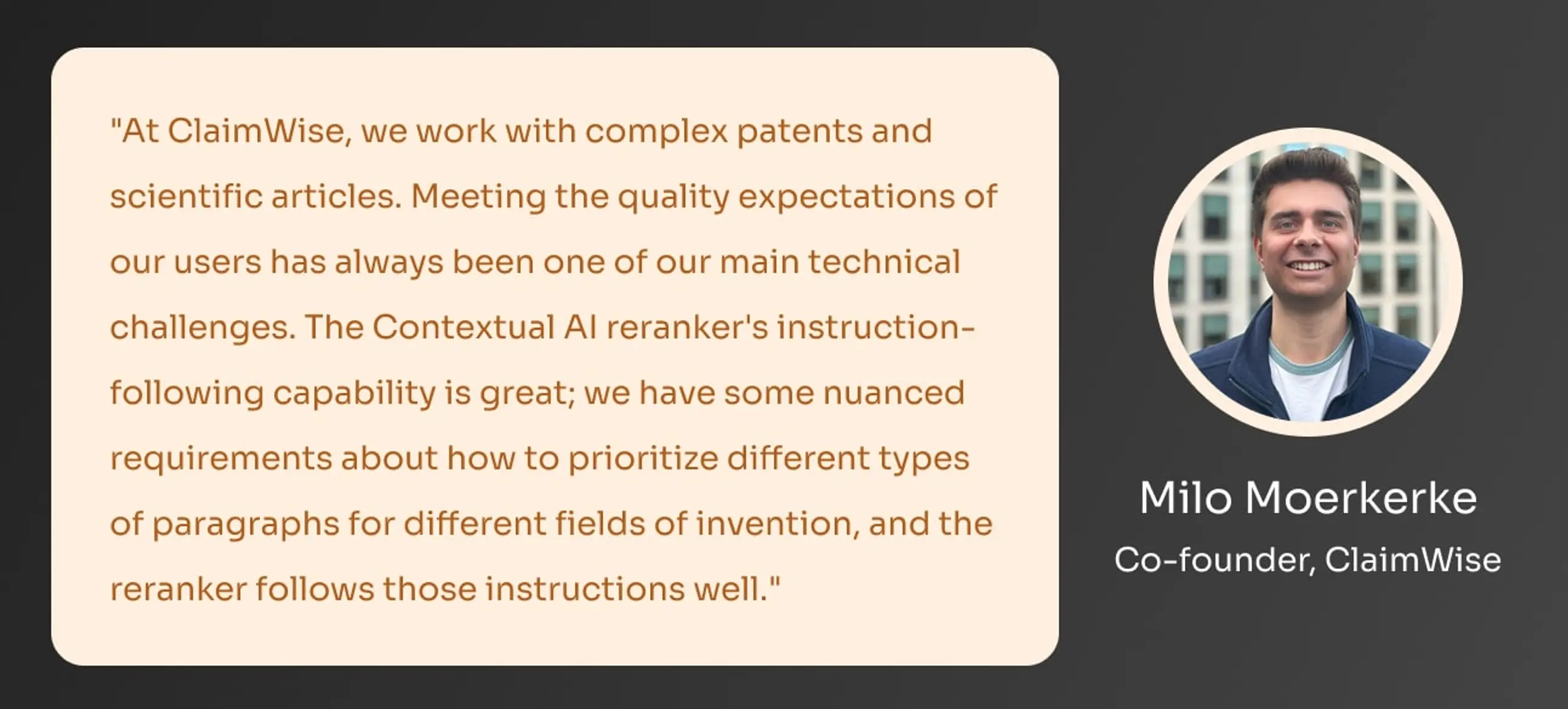

Customer spotlight: ClaimWise

ClaimWise is an AI solution designed to validate patent legitimacy. They assist patent professionals in developing stronger legal reasoning for patentability assessments, responding to office actions, handling opposition procedures, and determining enforceability. They originally used another leading reranker but switched to the Contextual AI reranker because of its superior performance.

Getting started with the reranker

Try the reranker for free today by creating a Contextual AI account, visiting the Getting Started tab, and using the /rerank standalone API. We provide credits for the first 50M tokens, and you can buy additional credits as your needs grow. To request custom rate limits and pricing, please contact us. If you have any feedback or need support, please email reranker-feedback@contextual.ai.

The reranker ranks documents according to their relevance to the query first and your custom instructions secondarily. We evaluated the model on instructions for recency, document type, source, and metadata, and it can generalize to other instructions as well. For instructions related to recency and timeframe, specify the timeframe (e.g., instead of saying “this year”) because the reranker doesn’t know the current date.

Documentation: /rerank API, Python SDK, Langchain package, and code examples for each of these!

TRY FREEOptimizing the reranker with the Contextual AI Platform

Founded by the inventors of RAG, Contextual AI offers a state-of-the-art platform for building and deploying specialized RAG agents. Each individual component of the platform achieves state-of-the-art performance, including document understanding, structured and unstructured retrieval (with the reranker), grounded generation, and evaluation. The platform achieves even greater end-to-end performance by jointly optimizing the components as a single, unified system.

Using the Contextual reranker will likely drive significant improvement in your existing RAG and agentic workflows, and using the Contextual AI Platform can provide even further gains. Our platform is generally available and works for a diversity of use cases and settings. Sign up to build a production-ready, specialized RAG agent today!

Related Articles

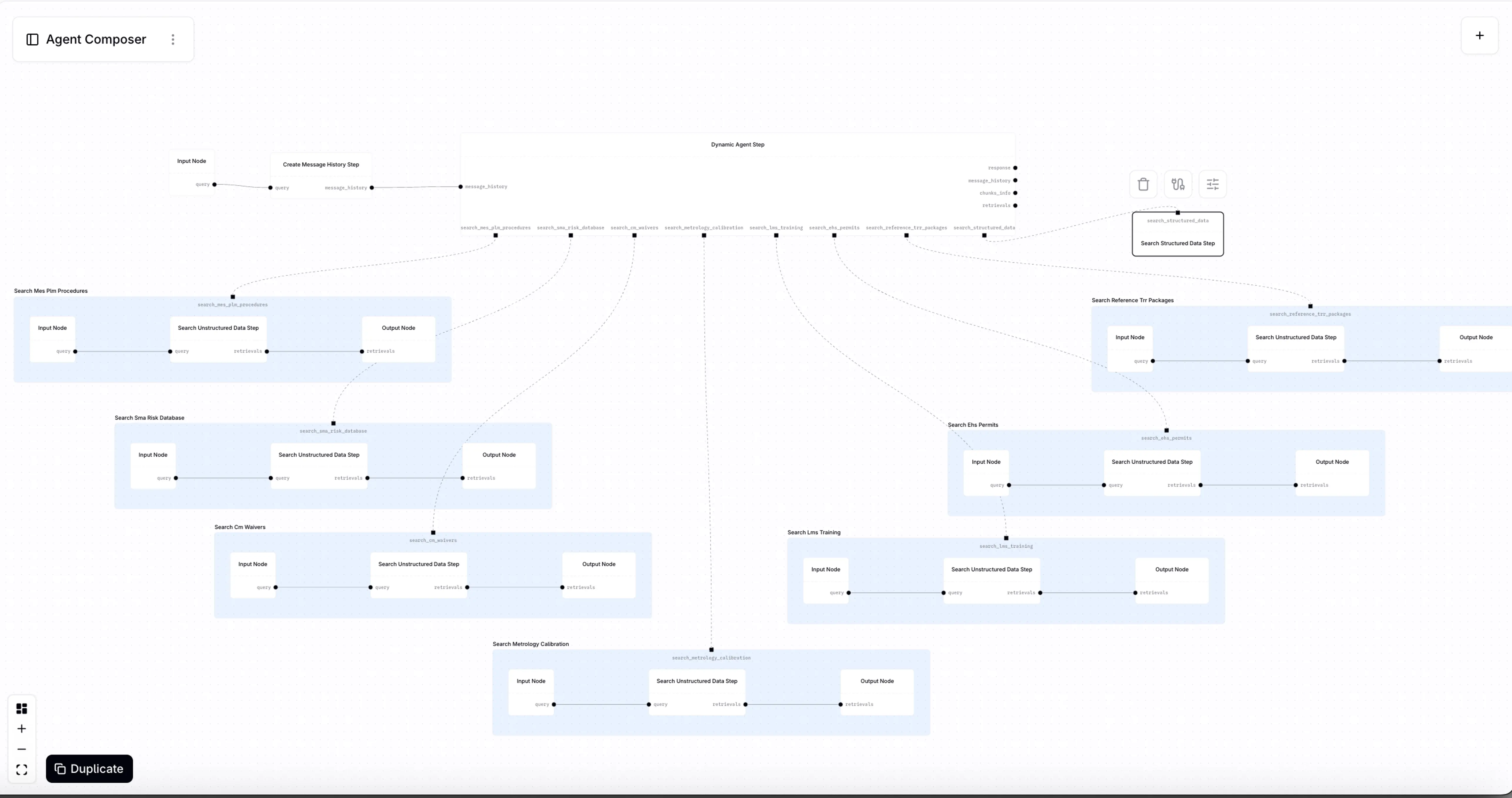

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?