Dynamic MCP Server Selection: Using Contextual AI’s Reranker to Pick the Right Tools for Your Task

We had an interesting meta-learning at AI Engineer World’s Fair from some of the organizers of the MCP track: there has been such an explosion in MCP Server creation, that one of the emerging challenges in this space is selecting the right one for your task. There are over 5,000 servers on PulseMCP and more being added daily. Recent findings on context rot quantify our shared experience with LLMs—the more tokens you add to the input, the worse your performance gets. So how would your AI Agent select the right tool from thousands of options and their accompanying descriptions? Enter the reranker.

We prototyped a solution that treats server selection like a Retrieval-Augmented Generation (RAG) problem, using Contextual AI‘s reranker to automatically find the best tools for any query. In a typical RAG pipeline, a reranker takes an initial set of retrieved candidates (usually from semantic search) and reorders them based on relevance to the query. However, we’re using the reranker here in a non-standard way as a standalone ranking component for MCP server selection. Unlike traditional rerankers that only reorder a pre-filtered candidate set based on semantic similarity, our approach leverages the reranker’s instruction-following capabilities to perform a comprehensive ranking from scratch across all available servers. This allows us to incorporate specific requirements found in server metadata and functional descriptions, going beyond simple semantic matching to consider factors like capability alignment, parameter requirements, and contextual suitability for the given query.

The Problem: Too Many Choices

MCP is the missing link that lets AI models talk to your apps, databases, and tools without having to integrate them one by one: think of it as USB-C port for AI. With thousands of MCP servers, it is very likely that you can find one for your use case. But how do you find that tool using just a prompt to your LLM?

Say your AI needs to “find recent CRISPR research for treating sickle cell disease.” Should it use a biology database, academic paper service, or general web search tool? With thousands of MCP servers available, your agent has to identify which server or sequence of servers can handle this specific research query, then choose the most relevant options. The main challenge isn’t finding servers that mention “research”, it’s understanding semantic relationships between what users want and what servers actually do.

Screenshot of the PulseMCP Server Directory on July 31, 2025

Server Selection as a RAG Problem

This server selection challenge follows the same pattern as Retrieval-Augmented Generation: you need to search through a large knowledge base (server descriptions), find relevant candidates, rank them by relevance, then give your AI agent the best options.

Traditional keyword matching falls short because server capabilities are described differently than user queries. A user asking for “academic sources” might need a server described as “scholarly database integration” or “peer-reviewed literature access.” Even when multiple servers could handle the same query, you need smart ranking to prioritize based on factors like data quality, update frequency, and specific domain expertise that the user desires.

Rather than creating a full RAG system for server selection, we are leveraging one component of the pipeline: the reranker. A reranker is a model that takes an initial set of retrieved documents from a search system and reorders them to improve relevance, typically by using more sophisticated semantic understanding than the original retrieval method. Contextual AI’s reranker can also follow instructions, to specify this selection more granularly.

Our Solution: MCP Server Reranking with Contextual AI

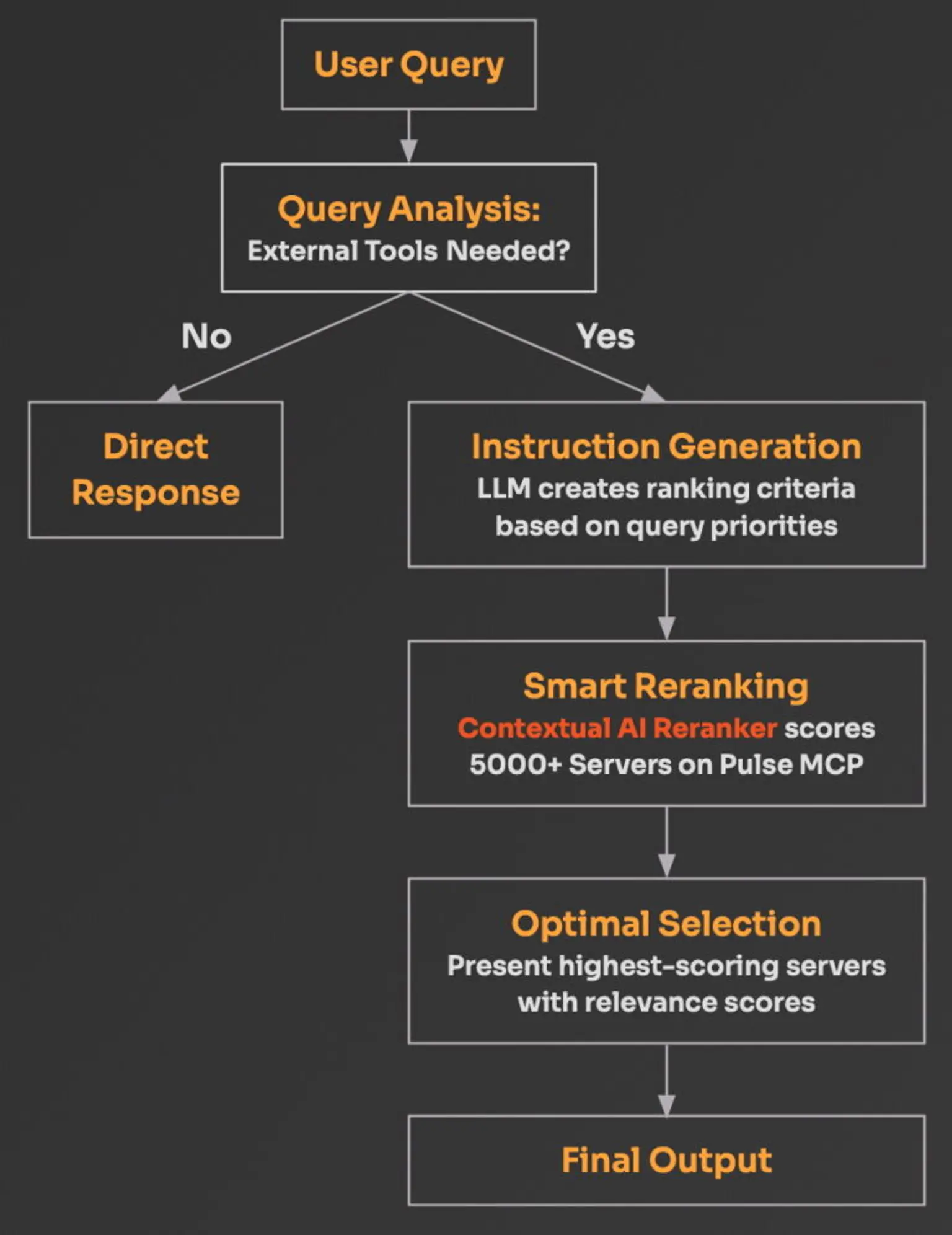

We built a workflow that automatically handles server selection:

- Query Analysis: Given a user query, an LLM first decides whether external tools are needed;

- Instruction Generation: If tools are required, the LLM automatically creates specific ranking criteria based on the query that emphasizes the priorities;

- Smart Reranking: Contextual AI’s reranker scores all 5000+ servers on PulseMCP against these criteria;

- Optimal Selection: The system presents the highest-scoring servers with relevance scores.

In this solution, one key innovation is using an LLM to generate ranking instructions rather than using generic matching rules. For example, for the CRISPR research query, the instructions might prioritize academic databases and scientific APIs over social media or file management tools. Check out the full notebook here.

Reranker vs LLM baseline

To test our approach, we set up a comparison between our reranker system and a straightforward baseline where GPT-4o-mini directly selects the top 5 most relevant servers from truncated descriptions* of all 5,000+ available MCP servers.

*note: we truncated these to fit in context, and this step would not be necessary as context windows increase

For simple queries like

help me manage GitHub repositoriesboth approaches perform similarly – they correctly identify GitHub-related servers since the mapping is obvious.

But complex queries reveal where our approach truly shines. We were looking for a well-rated remote MCP server for communicating externally for a multi-agent demo, and tried this query:

I want to send an email or a text or call someone via MCP, and I want the server to be remote and have high user ratingOur reranker workflow springs into action. First, the LLM recognizes this query needs external tools and generates specific ranking instructions:

Select MCP servers that offer capabilities for sending emails, texts, and making calls. Ensure the servers are remote and have high user ratings. Prioritize servers with reliable communication features and user feedback metrics

Then Contextual AI’s reranker evaluates all 5,000+ servers against these nuanced criteria. Its top 5 selection are

1. Activepieces (Score: 0.9478, Stars: 16,047) - Dynamic server to which you can add apps (Google Calendar, Notion, etc) or advanced Activepieces Flows (Refund logic, a research and enrichment logic, etc). Remote: SSE transport with OAuth authentication, free tier available

2. Zapier (Score: 0.9135, Stars: N/A) - Generate a dynamic MCP server that connects to any of your favorite 8000+ apps on Zapier. Remote: SSE transport with OAuth authentication, free tier available

3. Vapi (Score: 0.8940, Stars: 24) - Integrates with Vapi's AI voice calling platform to manage voice assistants, phone numbers, and outbound calls with scheduling support through eight core tools for automating voice workflows and building conversational agents. Remote: Multiple transports available (streamable HTTP and SSE) with API key authentication, paid service

4. Pipedream (Score: 0.8557, Stars: 10,308) - Access hosted MCP servers or deploy your own for 2,500+ APIs like Slack, GitHub, Notion, Google Drive, and more, all with built-in auth and 10k tools. Remote: No remote configuration available

5. Email Server (Score: 0.8492, Stars: 64) - Integrates with email providers to enable sending and receiving emails, automating workflows and managing communications via IMAP and SMTP functionality. Remote: No remote configuration availableThe top three results deliver exactly what we need – remote deployment capability – and the first option worked flawlessly in our demo. This is partly because our baseline system has no way to input metadata criteria like “remote” and “stars,” so it recommends MCP servers without considering these critical requirements that users actually care about.

1. Email Server

2. Gmail

3. Twilio Messaging

4. Protonmail

5. Twilio SMSYour MCP server selection will be more effective in matching your instructions to the top suggestion from the reranker, rather than a baseline result. And faster than reading through all the documentation yourself.

Check out the full notebook here.

Conclusion

By connecting MCP servers to your LLM with Contextual AI’s reranker as an interface, your agent is able to automatically surface the most relevant tools while filtering out thousands of irrelevant options.

The approach scales naturally as the MCP ecosystem grows – more servers just mean more candidates for the reranker to evaluate intelligently. Since we’re parsing from a live directory that is being updated every hour, your LLM always has access to the newest tools without manual configuration or outdated server lists.

Get started

You can start using Contextual AI’s reranker (or other products) free by signing up at https://contextual.ai/. The full notebook with the above work is here. And follow us on X or LinkedIn for some exciting coming news about our reranker!

Related Articles

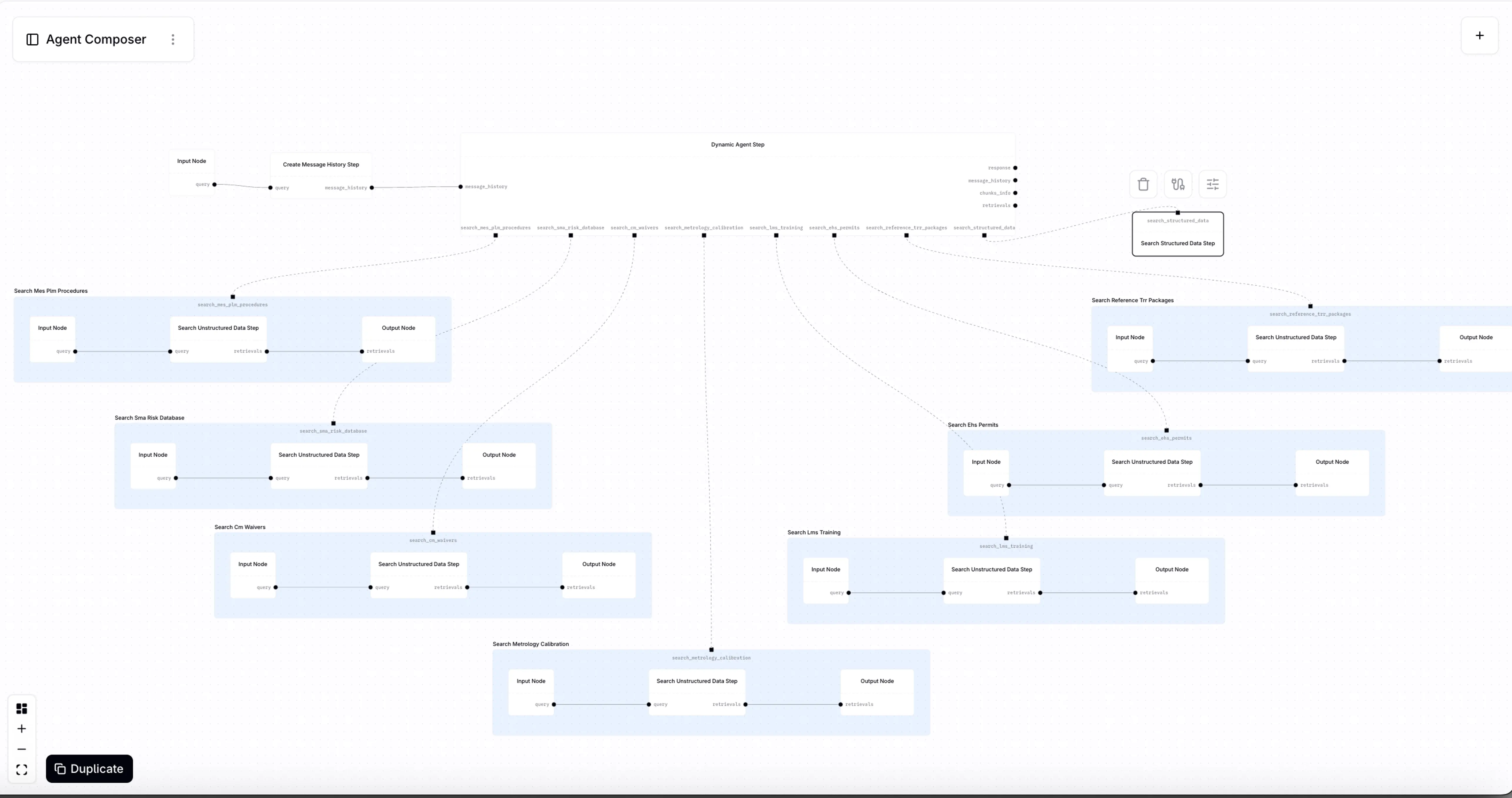

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?