Retrieval-Augmented Generation (RAG) remains one of the most discussed approaches in enterprise AI. As AI systems become increasingly integrated into businesses, the need for accurate, reliable, and up-to-date information has never been more critical. Despite the advancement of large language models (LLMs) with ever-expanding context windows, RAG continues to be essential for organizations to leverage their data alongside the reasoning capabilities of modern AI.

This guide aims to clarify what RAG is, why it matters for enterprises, and how it fits into the broader AI ecosystem.

What is RAG?

RAG was first introduced by researchers at Meta Fundamental AI Research (FAIR) in 2020 as a method to augment generative models with external knowledge. At its core, RAG combines the best of two worlds: the parametric memory of language models (knowledge encoded in the model’s weights from training) and non-parametric memory (knowledge stored externally that can be accessed on demand).

RAG extends a language model’s capabilities by retrieving relevant information from external data sources and injecting it into the model’s context window before generation. This approach allows the model to “reason” over information it was not originally trained on.

In simpler terms, RAG gives AI systems the ability to “look up” information they need rather than relying solely on what was learned during training. Just as humans consult reference materials when faced with unfamiliar questions, RAG provides AI with access to the specific knowledge needed for a given task.

The AI Problems RAG Solves

RAG addresses several fundamental limitations of standalone LLMs and enables AI to address more domain-specific use cases:

- Proprietary Data Access: Companies possess vast amounts of internal knowledge—documents, databases, code repositories, and communication records—that no public LLM has been trained on. RAG provides a secure bridge between these proprietary information sources and powerful AI reasoning capabilities.

- Knowledge Cutoff Mitigation: All LLMs have a training cutoff date beyond which they have no knowledge. For enterprises dealing with rapidly changing information (market conditions, product specifications, regulations), this limitation is significant. RAG allows systems to access the most current information available.

- Hallucination Reduction: Despite significant advances in this area, LLMs continue to generate plausible-sounding but incorrect information, which can do significant business damage. By grounding responses in actual retrieved content, RAG significantly reduces these hallucinations, making AI systems more trustworthy for critical business applications.

- Attribution and Compliance: Tracing AI-generated outputs back to source documents is essential, particularly in regulated industries. RAG naturally facilitates attribution by explicitly retrieving and referencing specific documents, enabling citation and verification.

- Cost Efficiency: While expanding context windows might seem like an alternative solution (more on this later), RAG offers a more economical approach by only processing the most relevant information rather than loading enormous amounts of data into the model’s context.

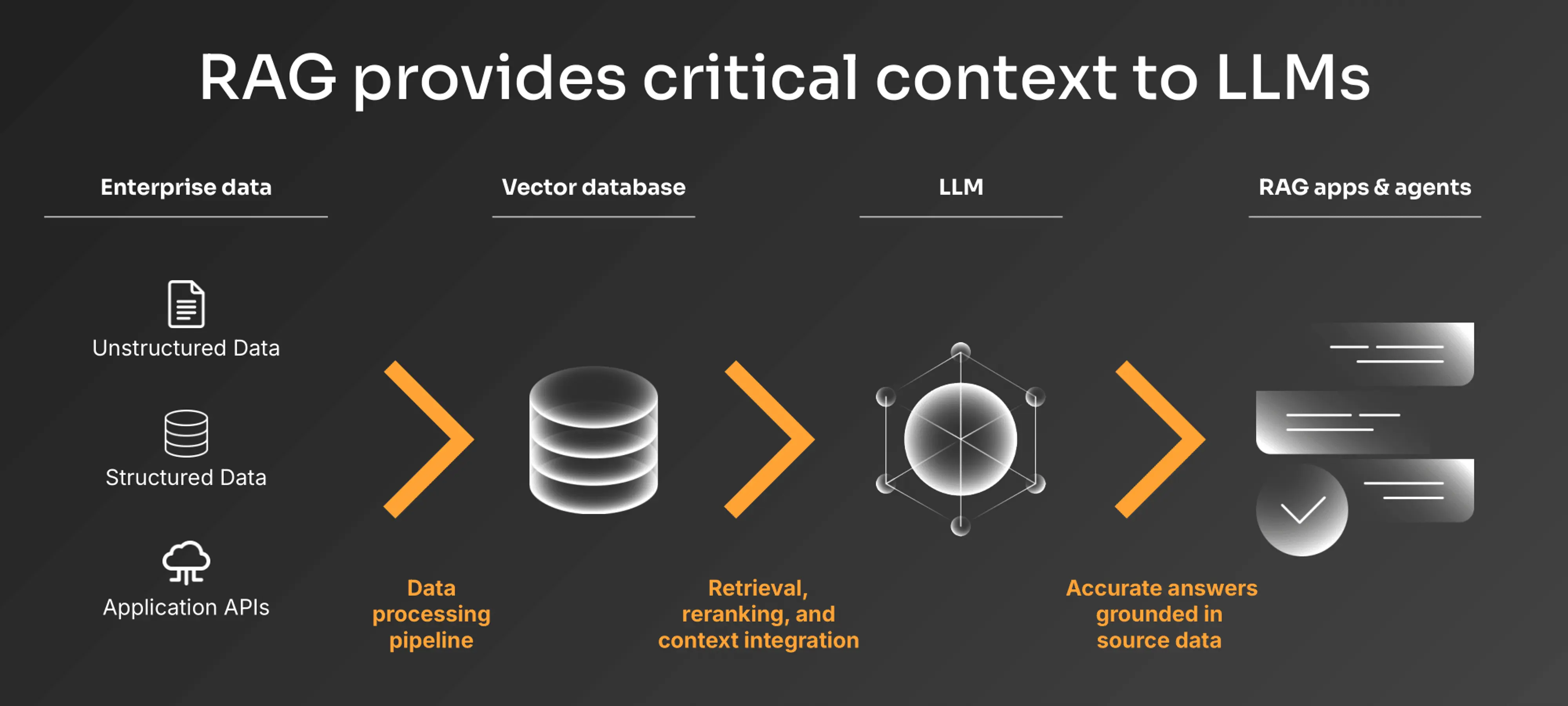

Core Components of a RAG System

A typical RAG implementation consists of several key components:

- Data Processing Pipeline: Before retrieval can happen, documents must be ingested, chunked into appropriate segments, and processed. This pipeline handles various data formats (e.g., PDFs, DOCX, HTML) and prepares them for embedding. Effective chunking strategies are critical—too small, and you lose context; too large, and precision suffers. Advanced pipelines incorporate metadata extraction, hierarchical chunking, and specialized processors for different content types (e.g., tables, code, figures).

- Vector Database: Documents are transformed into vector embeddings that capture their semantic meaning and stored in specialized databases optimized for similarity search. The choice of embedding model significantly impacts retrieval quality, with domain-specific models often outperforming general-purpose ones for enterprise use cases. These databases must balance query performance, storage efficiency, and update capabilities to handle evolving enterprise knowledge.

- Retrieval Mechanism: When a query arrives, the system determines which documents are most relevant using techniques ranging from simple similarity search to more sophisticated query expansion and hybrid retrieval methods. Effective retrieval often combines dense (semantic) and sparse (keyword) search methods to capture both conceptual similarity and specific terminology. Query reformulation techniques can transform user questions into more effective search queries by identifying key entities and intent.

- Reranking: After initial retrieval, a reranking step applies more computationally intensive algorithms to score and filter the candidate documents, dramatically improving precision. Modern rerankers use cross-attention mechanisms to evaluate query-document relevance more thoroughly than initial retrieval can provide. This step is crucial for reducing noise and ensuring only the most pertinent information reaches the LLM’s context window.

- Context Integration: Retrieved information is formatted into prompts that help the LLM understand how to use the external knowledge effectively. This involves careful prompt engineering to distinguish between retrieved content and instructions, establishing clear expectations for citation, and managing context window limitations. The system must prioritize and position the most relevant information where the model is most likely to utilize it.

- Response Generation: The LLM generates its response based on both its internal knowledge and the retrieved context, ideally synthesizing information coherently and accurately. Post-processing may include fact-checking against retrieved sources, adding citations, and reformatting to meet specific output requirements. Advanced systems implement feedback loops where generation quality informs future retrieval priorities.

Advanced RAG Techniques and Architectures

Beyond basic implementations, several advanced RAG approaches have emerged that significantly enhance performance for enterprise applications:

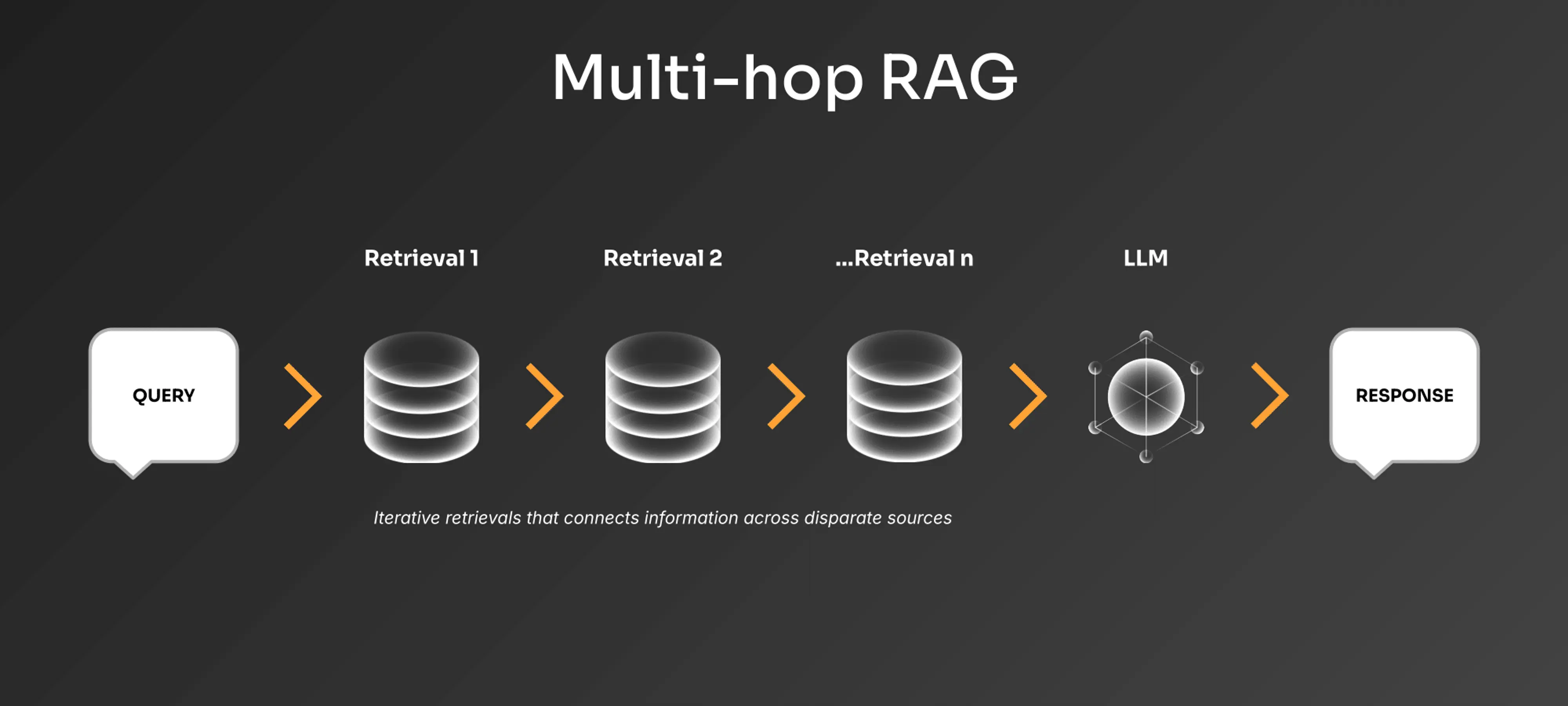

Multi-hop RAG enables systems to follow chains of connected information across multiple documents when a single retrieval pass is insufficient. Unlike basic RAG that retrieves information directly related to the query, multi-hop retrieval can answer questions that require connecting disparate pieces of information. For instance, to answer “Where did the CEO who announced our new sustainability initiative go to college?”, the system might first retrieve documents about the sustainability initiative to identify the CEO, then use that information to find biographical details in different documents.

Self-reflective RAG systems can evaluate their own responses, determine when additional information is needed, and initiate further retrieval steps automatically. These systems employ capabilities to recognize knowledge gaps and actively seek to fill them. The model might generate a tentative response, analyze its confidence, then perform targeted retrieval to strengthen weak points.

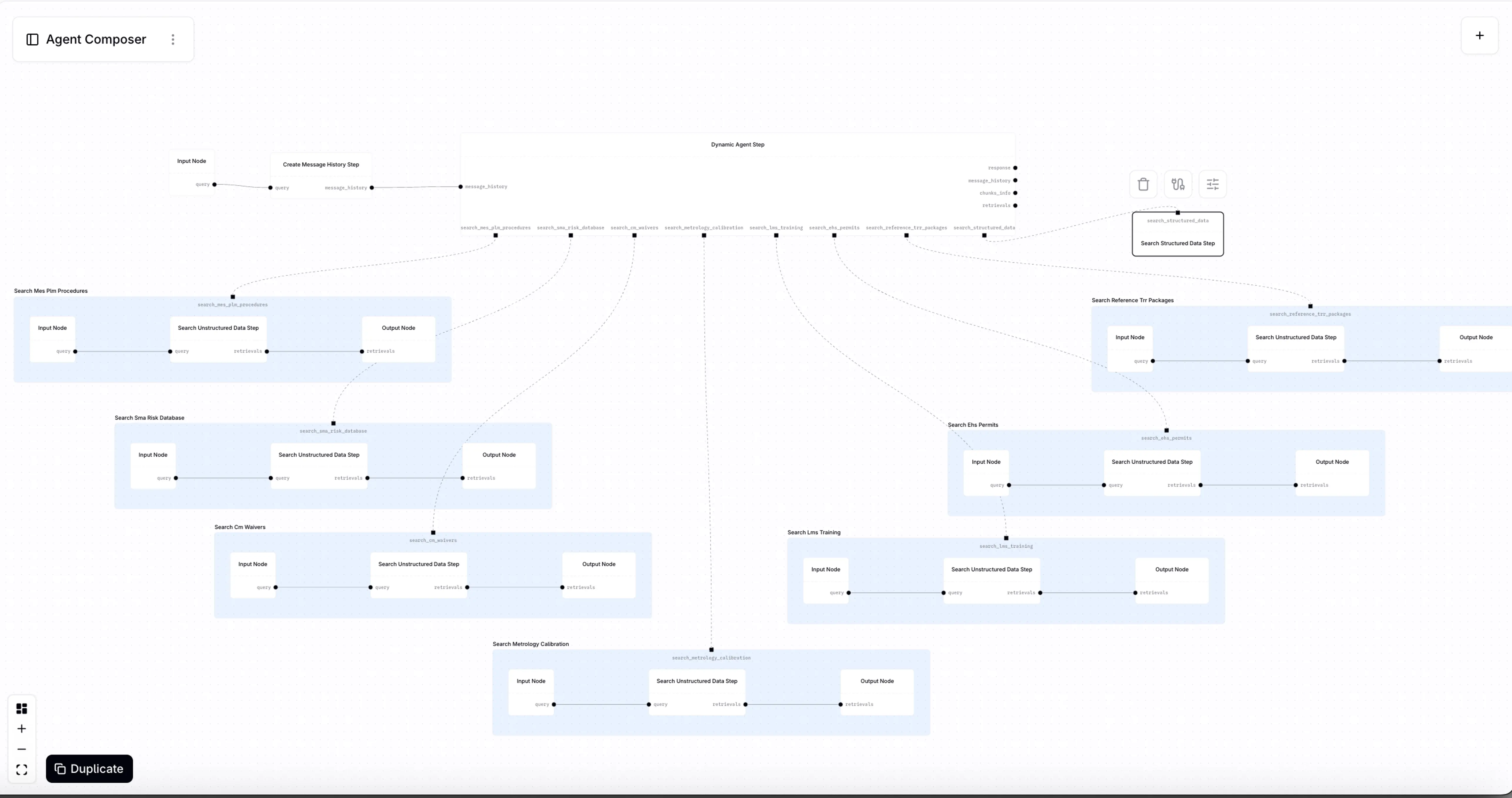

Agentic RAG systems function as autonomous decision-makers that strategically plan and execute complex information retrieval tasks. These systems can dynamically determine what to retrieve, reformulate queries based on initial results, and incorporate diverse tools beyond retrieval. Agentic RAG can independently orchestrate multiple retrieval steps and analysis methods, adapting its approach based on intermediate findings without relying on predefined workflows.

Multimodal RAG extends beyond text to incorporate images, audio, and video, allowing systems to retrieve and reason over diverse data types. An enterprise system might retrieve technical diagrams alongside textual documentation when answering product support questions. These systems require specialized embedding models for different content types and fusion mechanisms that align information across modalities.

Structured data RAG integrates databases, APIs, and other structured information sources, enabling more precise retrieval and reasoning over tabular data. Advanced implementations understand schema relationships and can perform operations like joins, aggregations, and filters implicitly based on natural language queries.

The choice of architecture depends significantly on the specific use case, with different approaches optimized for response accuracy, latency, cost, or compliance requirements.

Common Misconceptions About RAG

Several persistent misconceptions about RAG deserve correction:

“RAG is just search.” While retrieval is a component of RAG, the approach goes far beyond traditional search by intelligently integrating retrieved information into the generative process. Traditional search returns documents for humans to read; RAG feeds information directly to models for reasoning and synthesis. This integration enables emergent capabilities like cross-document reasoning and source-grounded inference that neither search nor standalone LLMs can achieve independently.

“RAG is obsolete with larger context windows.” Even models with 10M token context windows face scalability issues when dealing with enterprise knowledge bases containing terabytes of data. A 10M token context can hold approximately 7,500 pages of text, while enterprise knowledge bases routinely contain millions of documents. Additionally, research consistently shows attention mechanism efficiency and reasoning performance degrade significantly as context size increases. Models exhibit “context cliffs” where retrieval accuracy drops sharply beyond certain depths.

“RAG competes with fine-tuning.” In reality, these approaches complement each other and address different limitations. Fine-tuning improves how models process and reason with information, while RAG expands what information they can access. Fine-tuning a model on domain-specific data can make it more adept at utilizing retrieved information from that domain. Similarly, RAG can compensate for gaps in a fine-tuned model’s knowledge when information changes or expands beyond the fine-tuning dataset.

“RAG is only for text data.” Modern RAG systems can incorporate multimodal data, including images, audio, and structured database records. Specialized embedding models can transform non-textual data into vector representations that can be retrieved alongside or instead of text. The same fundamental RAG architecture applies across data types, with modality-specific adaptations for chunking, embedding, and context integration.

Conclusion

RAG isn’t a temporary solution or a competing alternative to other AI advancement approaches—it’s a foundational architectural pattern that addresses intrinsic limitations of language models for enterprise use cases. As AI systems become more deeply integrated into business operations, the ability to reliably access, reason over, and cite specific information from proprietary data sources will remain essential.

At Contextual AI, we’re focused on helping AI teams build production-grade RAG systems. Built by the original researchers who developed RAG at Meta FAIR, our platform tightly integrates and orchestrates state-of-the-art RAG components as a unified system, removing the need to cobble together disparate models that yield suboptimal results. For teams requiring more customization and flexibility, the platform also provides powerful primitives—including agentic document parsing, instruction-following reranking, grounded generation, and comprehensive LLM evaluation—to meet any set of enterprise requirements.

Get started with the platform for free and see how easy it is to contextualize AI applications and agents with your data!

Related Articles

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?