We’re excited to share several new platform features that simplify data ingestion, expand metadata compatibilities and improve agent development and end user experience.

Securely scale document ingestion with connectors

Developing specialized agents requires connecting large amounts of enterprise data from multiple sources. This introduces several challenges including:

- Engineering overhead: It is challenging to build and maintain APIs to various data sources

- Security gaps: Many solutions fail to retain ingested document permissions, and often demand broad, unnecessary access to all documents

- Scale limitations: Many solutions have restrictions around document type, size and quantity which makes ingestion more challenging.

- Data staleness: Infrequent and manual data syncs prevent datastores and agents from being completely up to date.

Introducing a new way to ingest data: prebuilt connectors

Ingesting data from third-party sources just got easier. You can now sync data directly from external systems such as Google Drive and Microsoft SharePoint. With just a few clicks, you can connect, authenticate, and select the folders to ingest into a datastore that respects existing permissions.

We’re launching Connectors with Google Drive and SharePoint today, with OneDrive, Box, Dropbox, Confluence, and Salesforce coming soon!

How it works:

Start by creating a datastore, choosing from a gallery of connectors, and authenticating with your credentials.

Our connectors provide you with full control over the data you want to ingest. You can choose to ingest either your entire drive or select specific folders.

After setting up a connector, you will be able to monitor the syncing progress. Connectors support auto-syncing, and you can also manually trigger syncs to pull in changes faster.

How connectors help with data ingestion security, scale and freshness

With connectors, customers have another way of connecting data in addition to direct uploads, or connecting via API.

Connectors provide additional benefits including:

- Inherited permissions: Enforce existing document access controls at the user and group level at query time

- Granular document selection: Ingest only what you need by choosing what’s synced at the folder level

- Always-fresh data: Get data updates every 3 hours with regular autosyncs or trigger an instant refresh on demand

- Operate at scale: No limits to total datastore size, individual file size, or file count

Give connectors a try by spinning up an agent and ingesting documents in minutes.

Custom metadata is now available

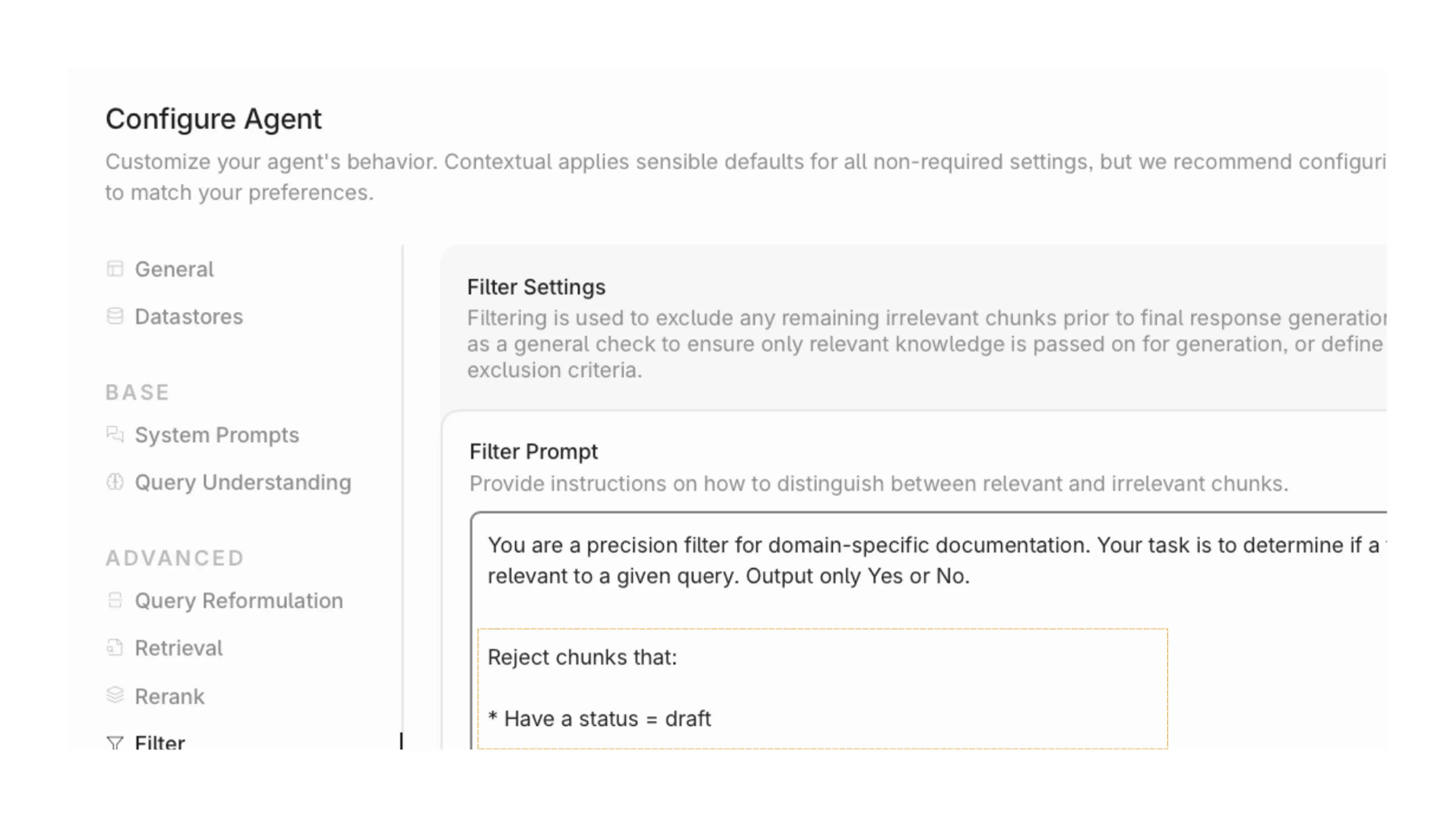

Developers can now add up to 50 custom metadata fields at ingestion using our API, enabling more powerful customization of agent configurations, retrieval instructions and generated content.

- Deterministic filtering: Easily search large volumes of documents with specific metadata attributes

- Quality outputs: add rich details to generated responses like document categories, or important attributes like links to source documents.

- More relevant retrieval: Use metadata attributes to add more specificity to reranking prompts

Figure 1: Metadata being referenced for more specified filtering.

This metadata expansion enables greater customization of agents, documents and the query path based on use-case specific needs.

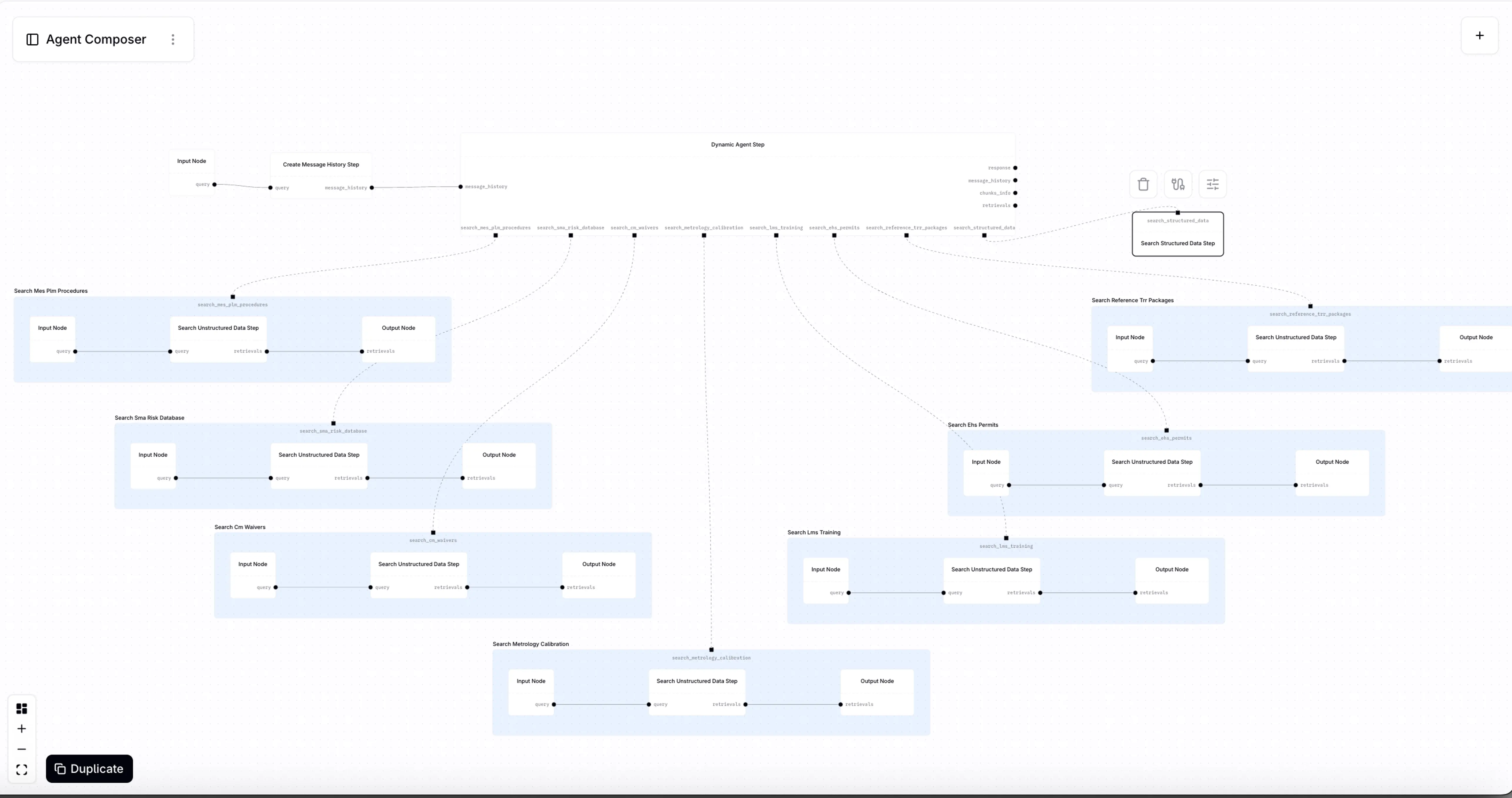

Improved agent development experience

As the platform has evolved we have added a number of new features that give developers more control over agent development and end user experiences.

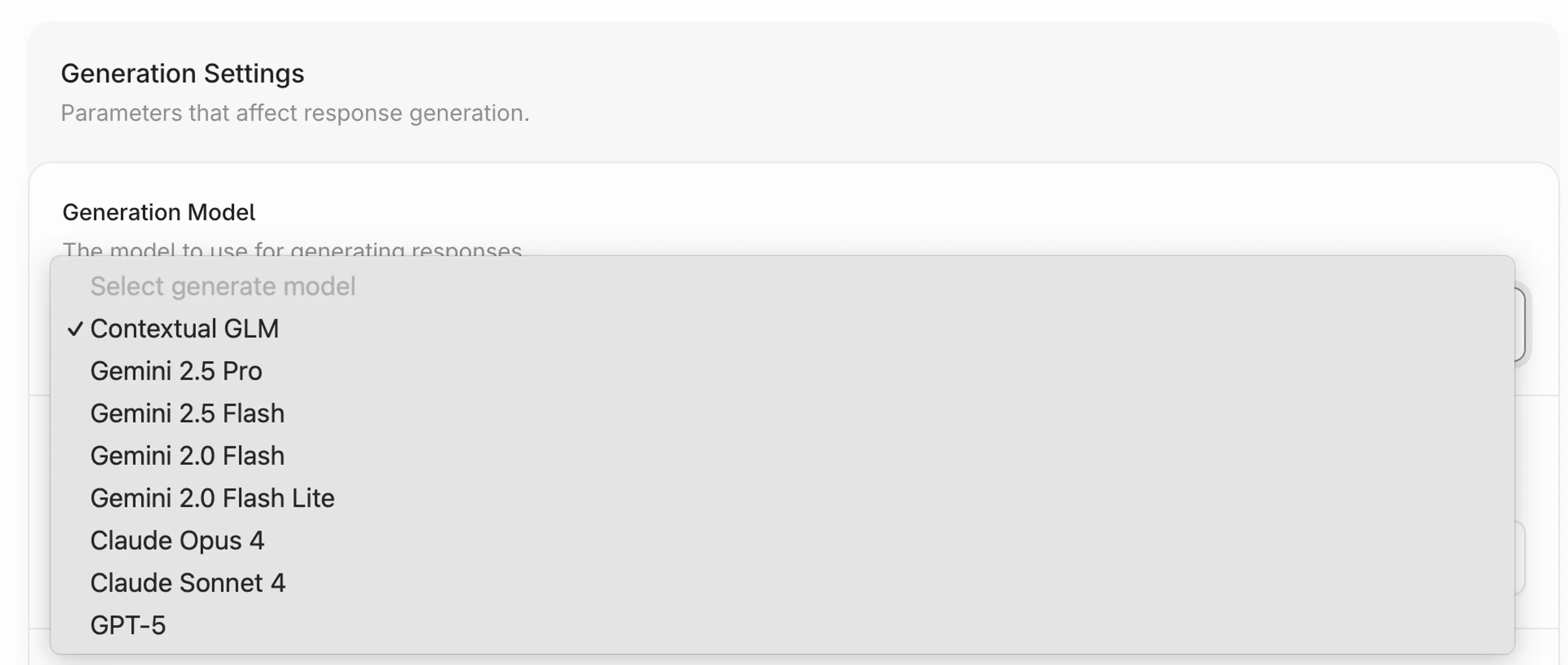

Third-party LLM selection: Contextual AI now supports 3rd party LLMs, such as GPT-5, Claude Opus 4, and Gemini 2.5 Pro. Select the LLM best suited to your specific use case while still leveraging the powerful capabilities of our unified context layer, all without getting bogged down in the administrative burden of creating and maintaining multiple accounts.

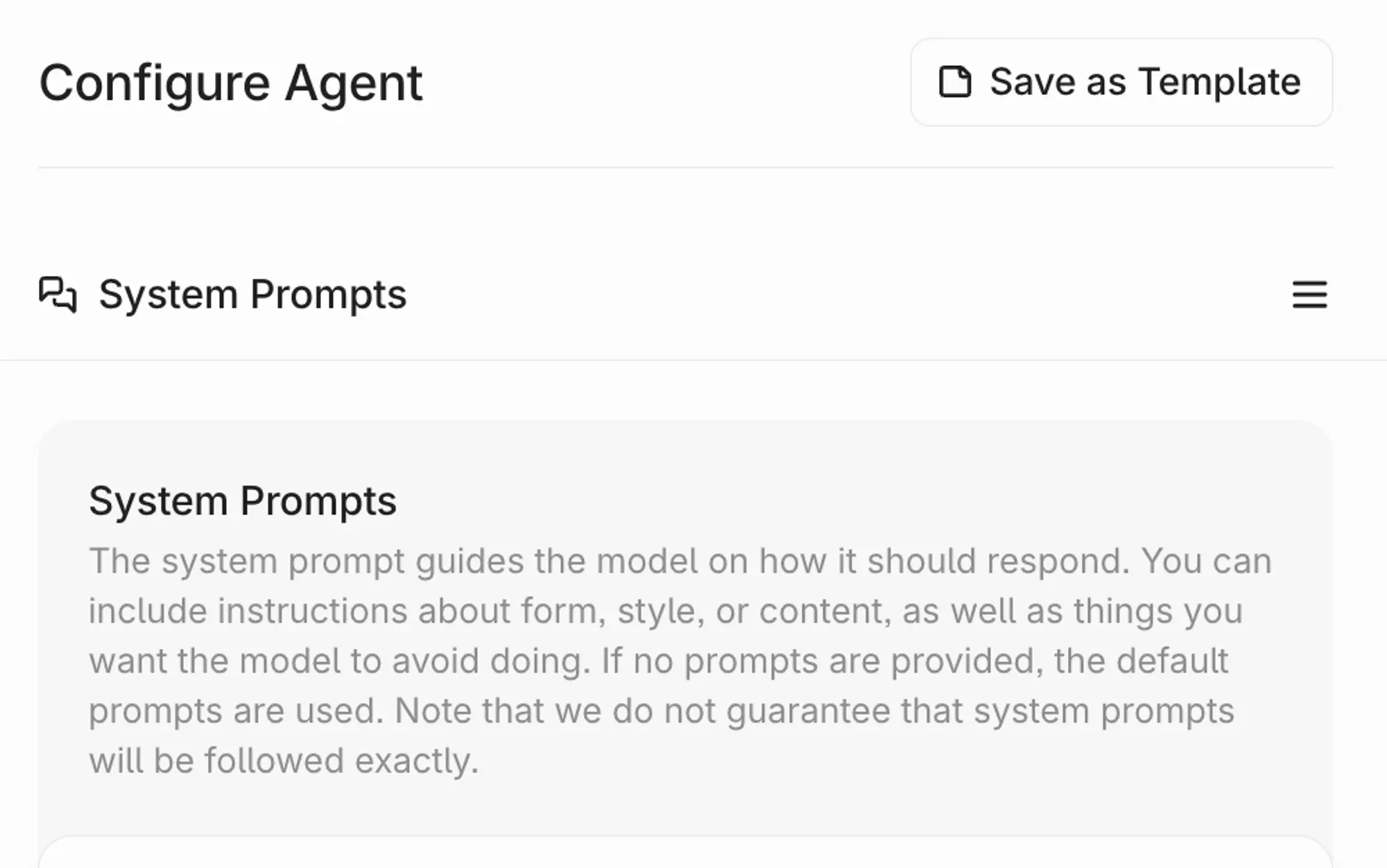

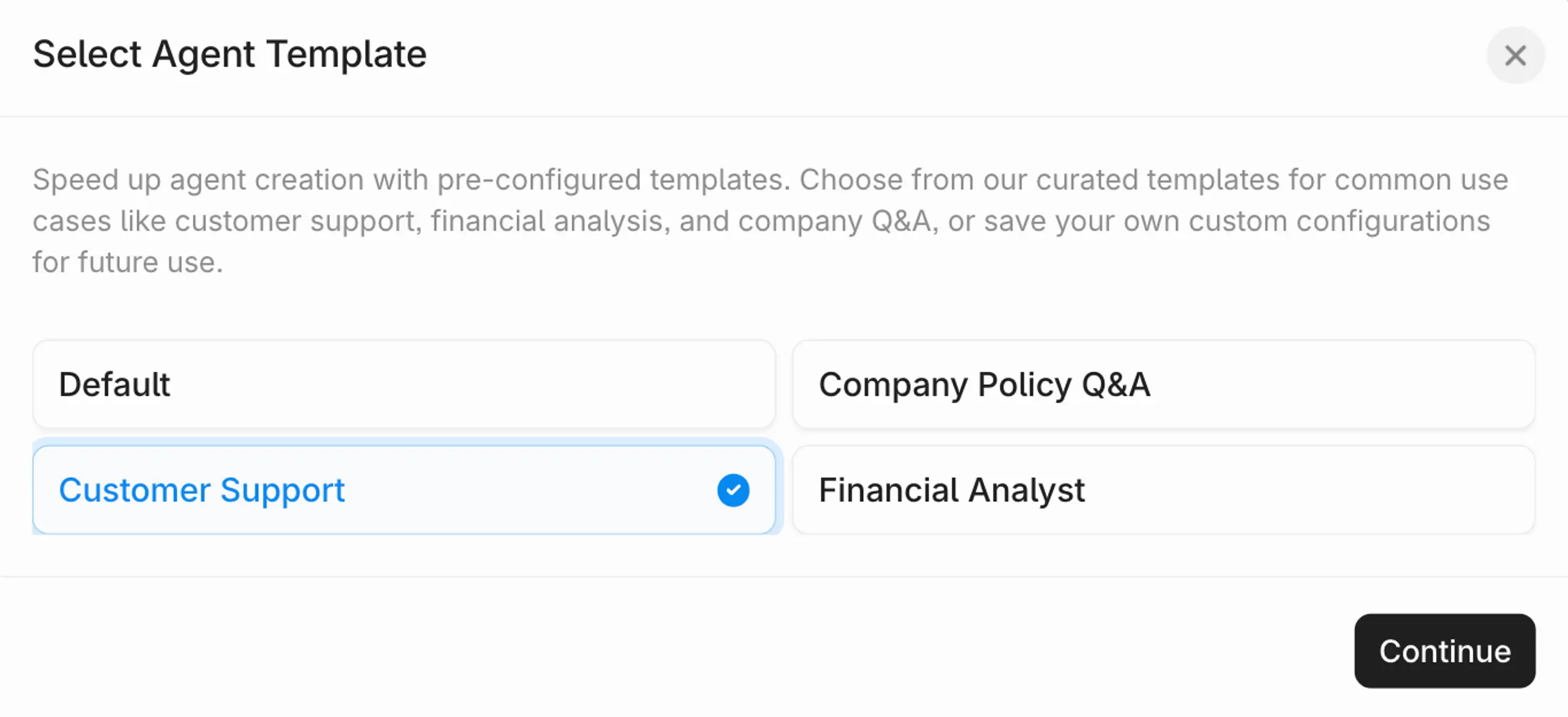

Agent templates: Create and customize agents more quickly using reusable templates. With templates you can save configurations for:

- System prompts

- Retrieval settings (# retrieved chunks, lexical and semantic retrieval weights)

- Reranker and filter prompts

- Query response settings (LLM selection, max tokens, temperature, etc)

- Suggested queries

The platform comes pre-loaded with templates for three common use cases, which leverages tested configurations to accelerate new agent development.

Users can save their own configurations directly in the UI or through the API.

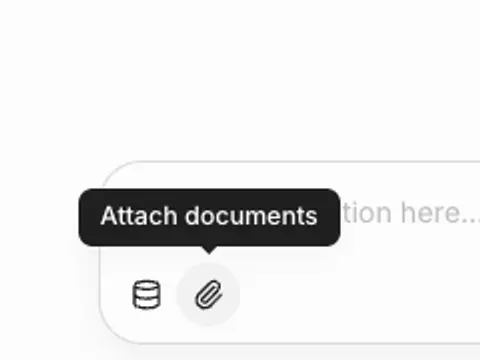

Ad-hoc document uploads: Now users can use the upload button to add any document to an attached datastore without leaving the chat interface.

Message history: The agent user experience is now personalized and enriched with a saved history of prior conversations.

Conclusion

Ready to try these new features? Log into your Contextual AI account and kick off a free trial to quickly ingest data, customize queries and reranking with metadata and more quickly build off of saved agent templates.

Related Articles

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?