Why Diagnostic Metrics Matter for Agent Evaluations at Scale

If you have ever tried to set up AI agent evaluations at scale, you have probably seen how quickly LLM-as-a-Judge becomes insufficient once you grow beyond roughly 100 initial test cases.

At Contextual AI, our frequent encounters with these limitations motivated us to build a diagnostic evaluation metric on top of LMUnit which takes a scalable and granular unit-testing approach at the output level.

Challenges of evaluating AI Agents at Scale

While great for initial evaluation, LLM-as-a-Judge setups become expensive, hard to manage, and lose descriptive value as you scale.

- Binary scoring creates false negatives and hides nuance: Binary 0/1 scoring works for simple checks but collapses under real-world complexity: a 0 can mean both a 95% correct and a 5% correct answer, making it impossible to see when a system is “almost there” or to compare model versions and prioritize failure modes. Gold answers are treated as the only correct answer, even though they often include non-essential details, forcing teams to manually separate must-have from nice-to-have information and causing valid alternative answers to be marked wrong.

- Manual maintenance becomes a full-time job: As models, domains, and requirements evolve, teams must continually rewrite LLM-as-a-Judge prompts to counter model drift and new biases (e.g., positional or length preferences), while prompt frameworks, gold answers, and annotation guidelines all drift with changing business requirements. This manual upkeep is magnified as small eval suites grow into thousands of test cases across domains like finance, hardware, and medical, with annotators relying on complex, evolving guidelines that are costly to maintain and keep consistent.

- No diagnostic insight into failures: Even when the judge returns a score and rationale, pass/fail-style feedback rarely explains whether a response missed key facts, hallucinated, was incomplete, or violated formatting/domain constraints - creating an interpretation gap where teams must do manual forensics on individual failures. Without a structured, quantitative breakdown of where and how badly a response failed, it’s hard to prioritize which issues matter most, slowing iteration and making it difficult to turn evaluation results into systematic model improvements.

Our diagnostic metric solution: WeightedLMUnitScore

To address these challenges with diagnosing quality issues at scale we built WeightedLMUnitScore metric. This aggregates LMUnit’s unit-level scores into one metric that is continuous, explainable, and configurable for every use-case.

How unit tests provide quick error visibility

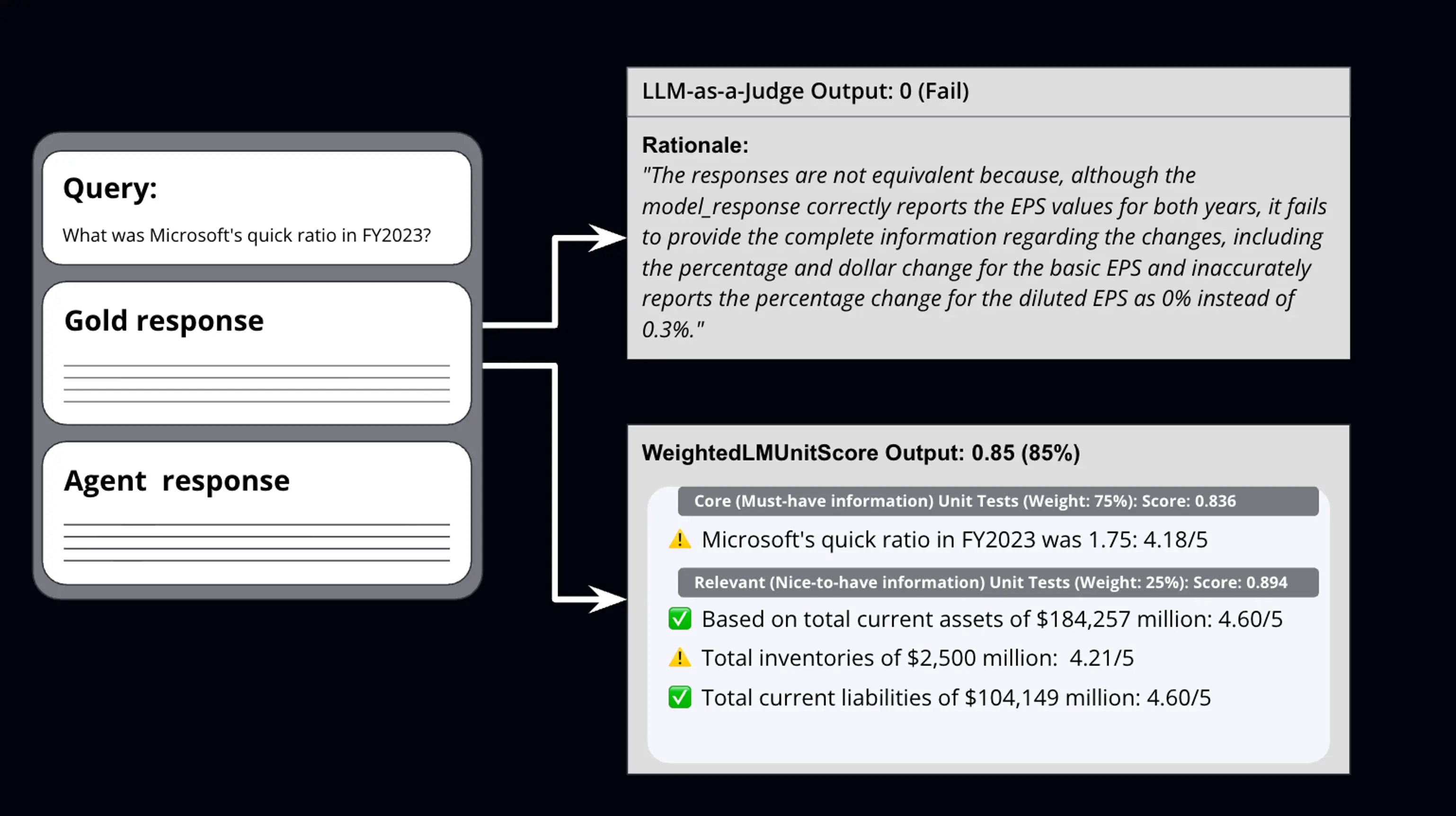

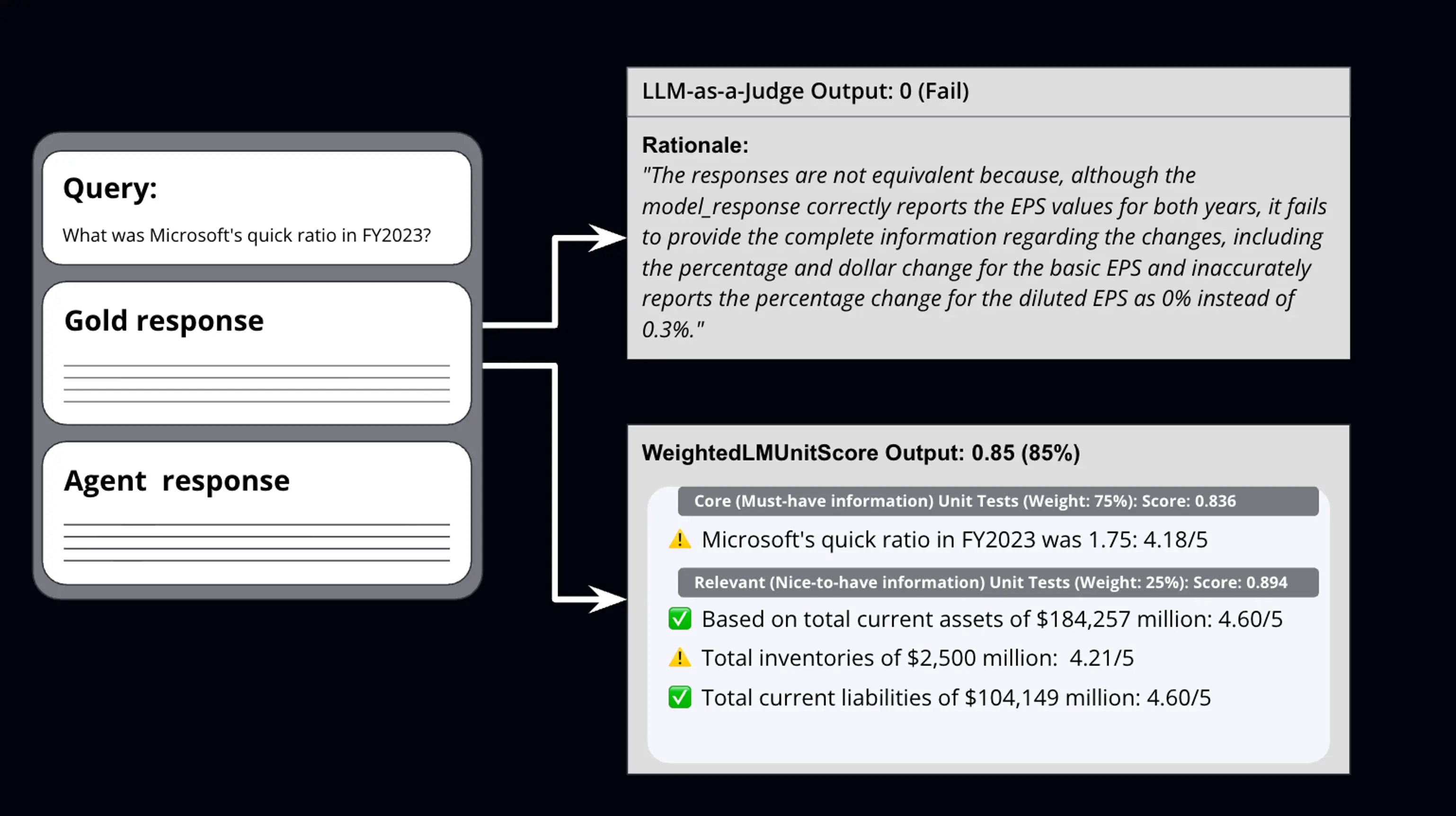

While LLM-as-a-Judge requires developers to manually sift through feedback, a unit-test-based approach makes it easy to see what aspects of the gold answer were successful and specifically what failed. As you can see from the image below a unit testing approach breaks down the gold answer into unit tests and at a glance tells you what was wrong and what was right, while LLM-as-a-judge requires reading a long rationale to find the signal.

The unit test scoring is powered by LMUnit, our state-of-the-art language model optimized for scoring unit tests, which functions as our reward model internally. While LMUnit supports two types of tests - those based on gold answers and those based on general criteria — this blog focuses specifically on tests that compare responses against known gold answers. To learn more about creating general criteria-based tests (e.g. for formatting, safety etc.), check out the notebook linked at the end of this article.

Weighing scores instead of constantly updating criteria

WeightedLMUnitScore assesses how well AI-generated responses match high-quality reference answers by breaking down gold answers into testable components and assigning weighted importance to different pieces of information. The WeightedLMUnitScore takes the prompt, golden answer and agent response to evaluate. The score works in 3 stages: decomposing unit tests from the gold answer, scoring each individual unit test and finally, averaging the scores according to your specific requirements.

WeightedLMUnitScore decomposes “must-have” from “nice-to-have” information from the gold answer:

- Core Information Unit Tests: must-have information-the essential facts, concepts, or data required to adequately answer the query. Missing most or all of these means the response fundamentally fails to address the question.

- Relevant Information Unit Tests: nice-to-have details-additional context, examples, or supplementary information that enhances quality but is not strictly necessary for a correct answer.

After the Decomposition Stage, LMUnit evaluates the agent response against each unit test and assigns individual scores from 1-5 in the Evaluation Stage and the final WeightedLMUnitScore is computed as a weighted combination:

Final Score = 0.75 × [Core Unit Scores / (5 *Total number of Core Unit Tests)] + 0.25 × [Relevant Unit Scores / (5 * Total number of Relevant Unit Tests)]

The default 75/25 core and relevant weightage reflects the higher importance of core information while still rewarding comprehensive responses. We set a weight of 0.75 as default for our eval set. We treat an answer as correct if its WeightedLMUnitScore is at least a chosen threshold; in our experiments, 0.7 worked best.

Continuous scoring eliminates false negatives:

WeightedLMUnitScore replaces binary, all-or-nothing penalties with continuous scoring on a 0-1 scale at the unit-test level so that “almost right” is easy to differentiate from “completely wrong". A continuous scale also makes it a lot easier to measure the actual performance and detect granular improvements.

- Richer, continuous scoring instead of binary gatekeeping: LMUnit scores each unit test on a 1–5 scale (not 0/1), so “95% correct” answers can get 4.75 instead of 0, and WeightedLMUnitScore aggregates these into a 0 - 1 metric (e.g., a financial agent with one small calculation error might score 0.85), clearly distinguishing “high-quality with a minor flaw” from “unusable.”

- Built-in signal for incremental improvement: Because scores are continuous, teams can track progress over time (e.g., 0.68 → 0.82 → 0.96 on an eval suite) and see whether changes are actually moving the model in the right direction and by how much, directly replacing binary pass/fail with a granular, diagnostic signal of gradual improvement.

Parameter tuning replaces prompt maintenance:

- Tune evaluation to your domain, not one-size-fits-all: WeightedLMUnitScore lets you configure how much core accuracy vs. relevant extras matter (e.g., 90/10 for medical systems, 60/40 for creative assistants), so the same framework works across very different use cases.

- Set clear, adjustable pass/fail standards: Instead of constantly rewriting judge prompts, you set thresholds that match your bar (e.g., 0.9 for compliance, 0.7 for conversational), then simply tweak weights and thresholds as requirements evolve.

- Scale without drift through structured, deterministic scoring: Gold answers are treated as configurable criteria rather than static truth, so you can reweight as needs change; each eval decomposes into independent, parallel unit tests that scale linearly, and deterministic scoring removes LLM scoring drift by ensuring the same response always gets the same score.

Multi-Criteria breakdown enables diagnosis

WeightedLMUnitScore doesn't just score responses, it shows you exactly where they succeed or fail, turning evaluation into a diagnostic tool.

- Separate scores per dimension: Get individual scores for core accuracy, relevance, compliance, and formatting so you instantly see a response might pass factual accuracy (4/5) but fail relevance (1/5).

- Identify specific failure patterns: A 0.72 score reveals exactly which unit tests failed - missing regulatory requirements, outdated statistics, or formatting violations, so you detect patterns like "frequent incompleteness on edge cases" instead of guessing from scattered examples.

- Prioritize fixes by impact: Each failed unit test provides a direct improvement path, and weight-based prioritization lets teams focus on fixes with the highest score impact.

- Reduce per-example diagnostic time: Where LLM-as-a-Judge setups often required 2–4 minutes of manual review per failed example, the structured unit-test breakdown now surfaces root causes in well under 1 minute, freeing teams to spend more time on fixes instead of forensics.

How we validated this approach

Before recommending WeightedLMUnitScore, we needed to prove that its evaluation quality was at least as good as a strong LLM-as-a-Judge system. In other words: if you swapped in WeightedLMUnitScore, would you be able to get human-level judgments without losing any diagnostic capabilities, while also gaining new diagnostic benefits?

Experiment Design

To ensure that WeightedLMUnitScore did not have any regressions, we ran a controlled comparison on a fixed evaluation set of 400 test cases, scoring each example with:

- A binary LLM-as-a-Judge setup

- The continuous WeightedLMUnitScore system

For WeightedLMUnitScore, we used default settings: a 75/25 split between core and relevant information, then converted the continuous scores to binary labels using two thresholds (≥ 0.7) so we could compare directly to human 0/1 labels. Human annotators were blind to automated scores to avoid bias.

The eval set was domain-agnostic, built to test how well automated metrics match humans across different types of tasks. Each of the 400 examples was a triplet spanning finance, hardware, and compliance, and included:

- the prompt

- the gold_answer

- the agent_response

- a human 0/1 label (0 = bad, 1 = good),based on query fulfillment, grounding, and formatting.

Setting the baseline

Our baseline was a carefully engineered LLM-as-a-Judge prompt built with a curriculum-inspired framework that:

- used step-by-step reasoning,

- enforced semantic equivalence between gold and agent responses, and

- included explicit negation checks

- showed high agreement with human labels

This baseline represents our best optimized LLM-as-a-Judge setup, but one that needs constant prompt updates as requirements evolve. WeightedLMUnitScore was run on the same set using only its default weight configuration.

Results

WeightedLMUnitScore matched, and slightly exceeded, the binary judge on agreement with human labels meaning that we could utilize WeightedLMUnitScore without fear of any regressions:

- At a 0.7 threshold for converting continuous scores to binary:

- LLM-as-a-Judge agreement with humans: 86.5% (346 / 400)

- WeightedLMUnitScore agreement with humans: 87% (348 / 400)

- Improvement: +0.5 percentage points

So in practice, WeightedLMUnitScore performed as well as our best LLM-as-a-Judge prompt in terms of accuracy, while requiring far less custom engineering and manual failure analysis. Beyond raw agreement, WeightedLMUnitScore told us exactly which unit tests failed for each response, providing nuanced, actionable insights into what needed fixing – something the binary baseline could not offer.

Conclusion

Traditional LLM-as-a-Judge evaluation forces teams to choose between binary pass/fail scoring that misses nuance and complex judge prompts that require constant maintenance as requirements evolve. WeightedLMUnitScore solves both: continuous scoring captures incremental improvements while configurable weights and thresholds adapt to different domains without prompt rewrites, and multi-criteria breakdowns show exactly which unit tests failed for actionable diagnostics.

We've released a public notebook that walks through implementing this approach on your own eval sets, it shows how to decompose gold answers into unit tests and plug the LMUnit model from the Contextual AI API directly into your existing evaluation pipeline. We also provide our LLM-as-a-Judge prompt in the notebook. You can read more about LMUnit here.

Acknowledgements:

We would like to thank William Berrios for his contributions to the implementation of WeightedLMUnitScore and help in designing the experiments used to benchmark its performance.

Related Articles

Making Search Agents Faster and Smarter

Optimize your search agents for speed and accuracy. Our two-axis approach—reranker improvements and RL-trained planners—reaches 60.7% accuracy.

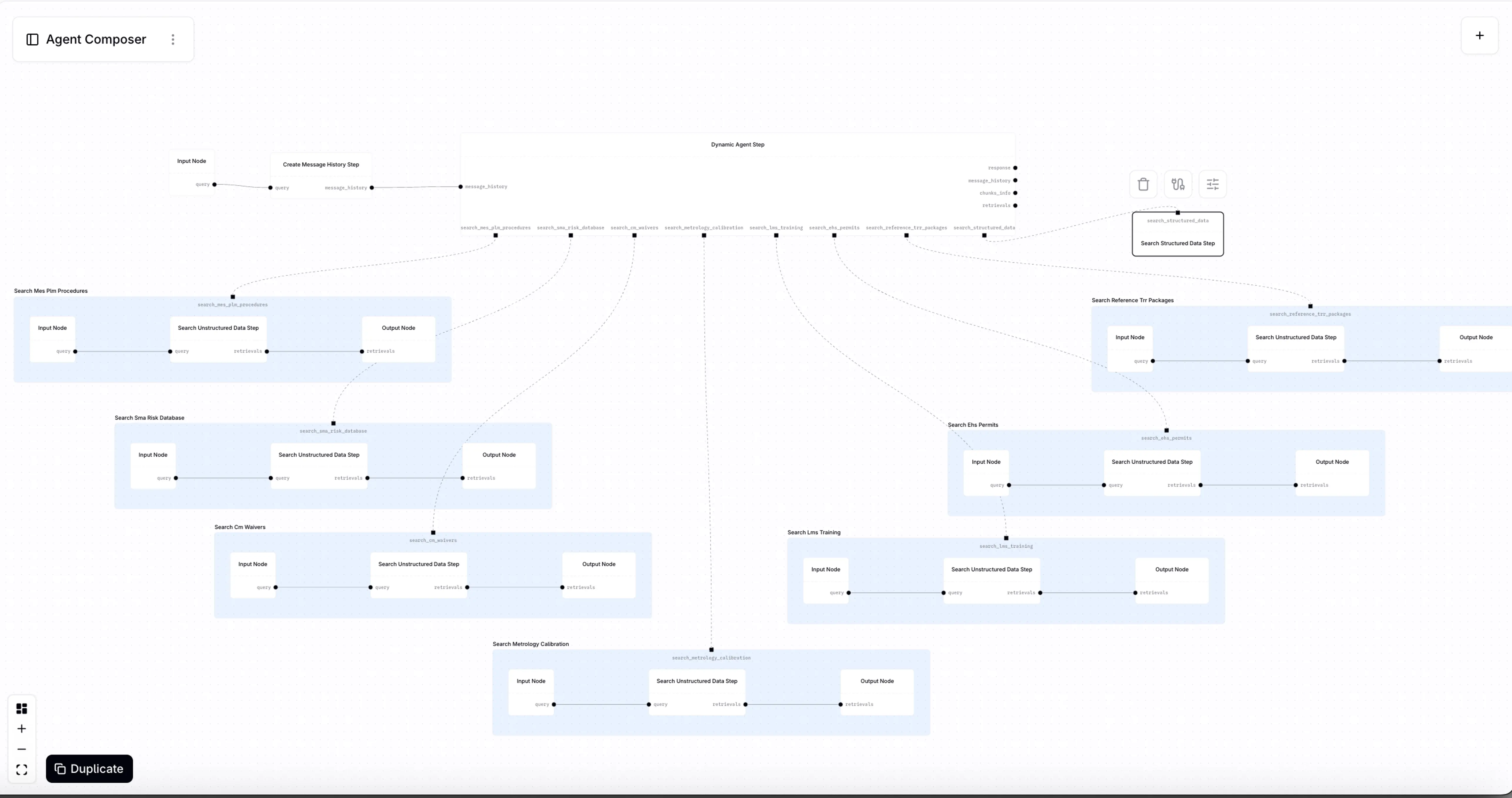

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.