Announcing the General Availability of the Contextual AI Platform

Today, we’re excited to announce the general availability of the Contextual AI Platform, our state-of-the-art solution for building specialized RAG agents that can complete highly complex knowledge tasks and improve the efficiency of human experts. This release represents an important milestone in our efforts to help organizations move their highest value AI projects past the initial demo phase and into production.

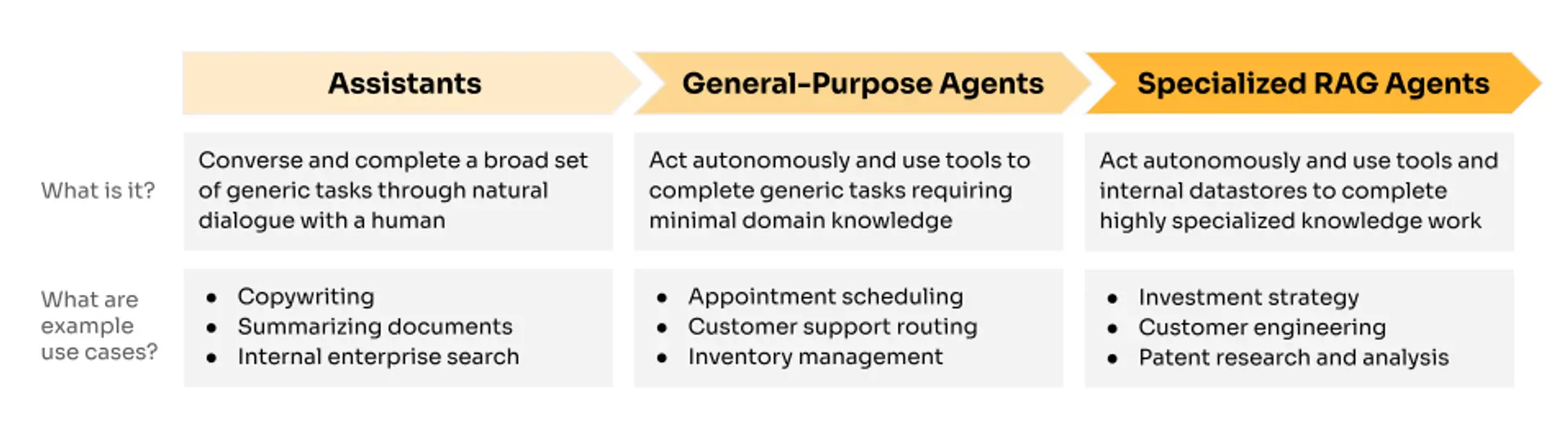

The Contextual AI Platform enables AI teams to rapidly launch specialized RAG agents that are capable of active retrieval and test-time reasoning over massive volumes of structured and unstructured enterprise data. These agents deliver exceptional accuracy in technical, complex, and knowledge-intensive workloads, such as investment analysis, engineering design support, and technical research—areas where general-purpose agents have largely fallen short.

REQUEST ACCESSThe case for specialization

AI agents will take center stage in 2025. However, while everyone will benefit from general-purpose AI agents, subject matter experts need domain-specialized RAG agents optimized for their industry-specific workflows and unique professional requirements.

These types of tasks are the most valuable work in any enterprise. However, we’ve seen over the last two years that customers find developing production-grade AI systems exceedingly difficult, particularly for complex use cases. Building each component of the system presents significant technical challenges—from document extraction to multihop and multimodal retrieval to grounded generation. Errors compound through the system, leading to poor overall accuracy that erodes user confidence and adoption. Moreover, the complexity of securing, scaling, and maintaining AI systems frequently prohibits projects from reaching production.

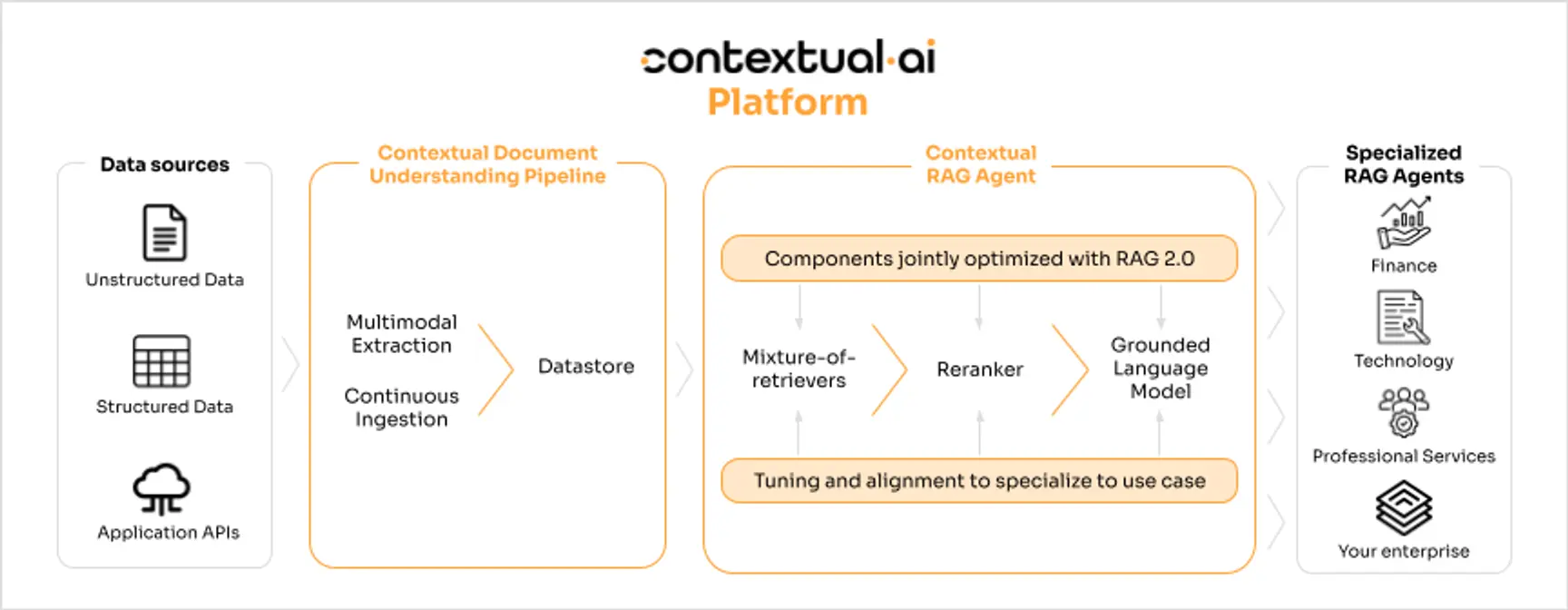

To address these challenges, the Contextual AI Platform is optimized end-to-end to easily build specialized RAG agents that achieve exceptionally high accuracy on knowledge-intensive tasks.

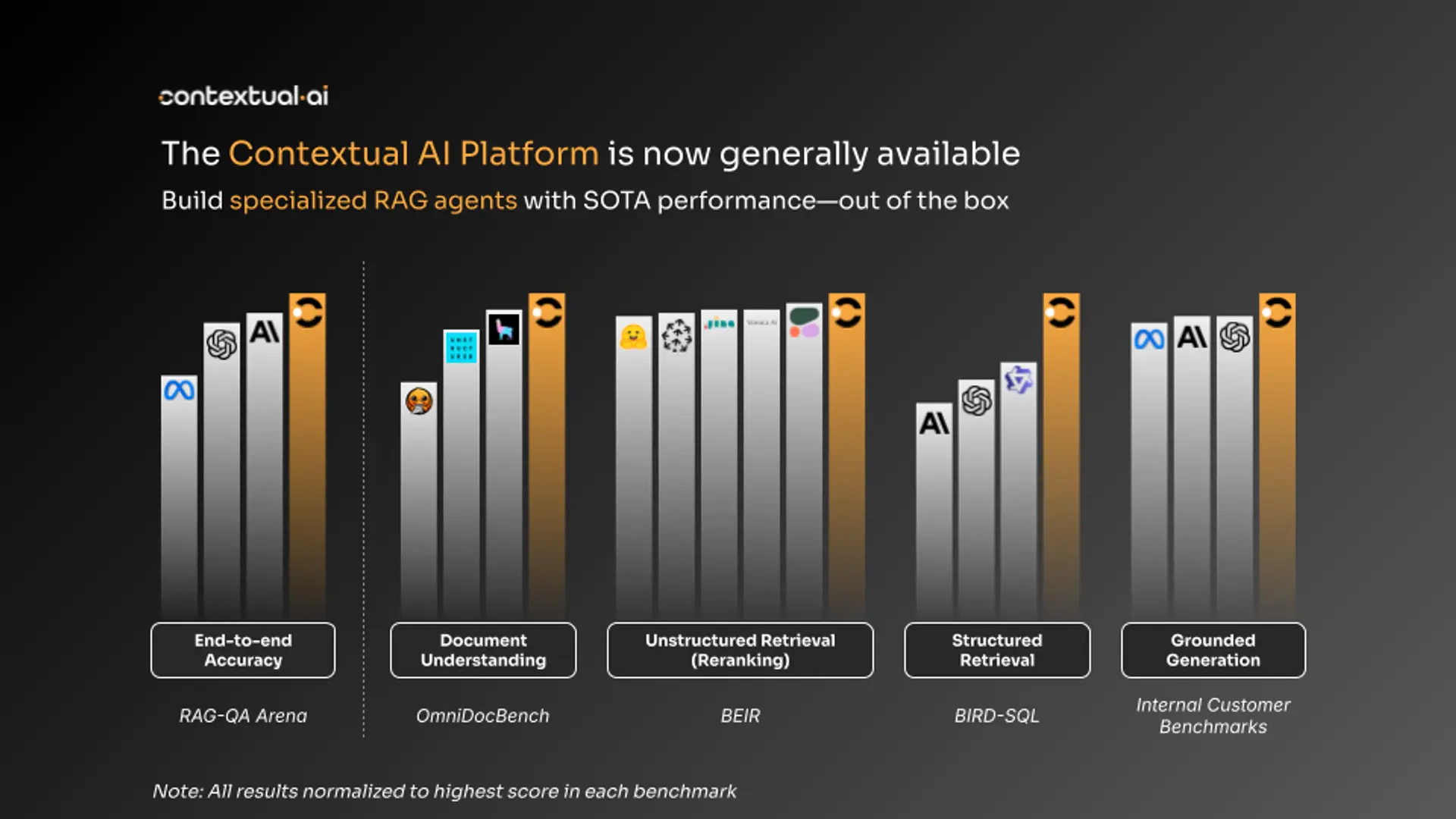

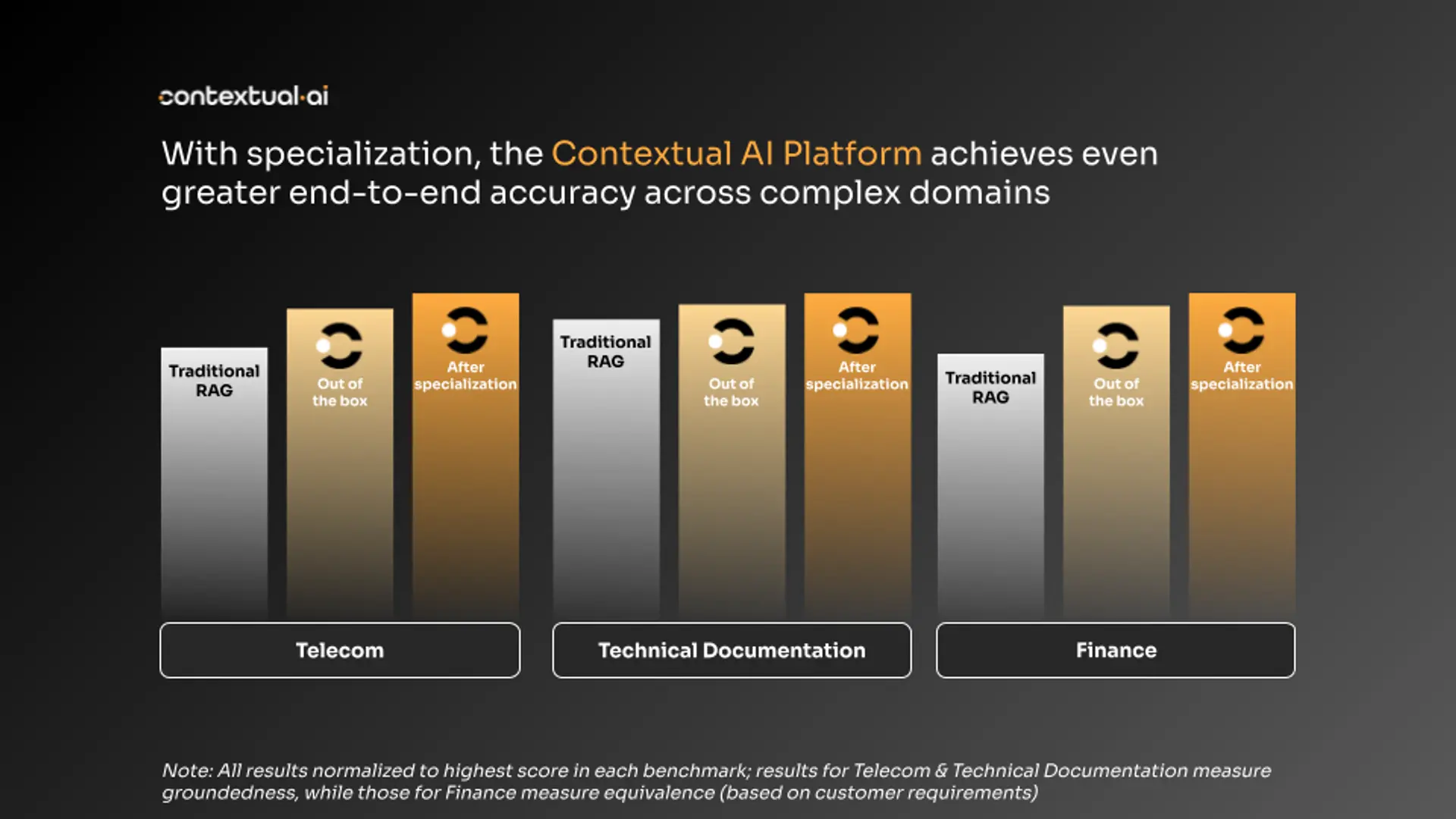

Our RAG 2.0 technology provides the foundation for building specialized RAG agents. Unlike traditional RAG systems that cobble together a collection of disjointed foundational models, RAG 2.0 jointly optimizes and tightly integrates our state-of-the-art components as a single, unified system. This approach results in unmatched accuracy, fewer hallucinations, and improved groundedness for complex tasks—out of the box. Our latest benchmarks demonstrate how our platform outperforms competing RAG systems built using leading models, including Claude 3.5 Sonnet and GPT-4o, in end-to-end accuracy, while also showing that every individual component of the platform provides unparalleled performance.

Building upon our RAG 2.0 architecture, the platform provides extensive tuning, alignment, and evaluation tools to specialize each of its components to rapidly achieve production-grade performance for specific use cases. In several real-world workloads, the Contextual AI team has partnered with customers to deliver over 15 percent greater accuracy than competing RAG systems. These results give customers the confidence to pursue mission-critical use cases where accuracy at scale is paramount.

Advanced capabilities to build specialized RAG agents for any use case

The Contextual AI Platform provides a comprehensive suite of features designed for enterprises to address any use case. Powerful platform APIs support AI teams through the entire agent development lifecycle, including data ingestion, tool integration, tuning, alignment, and evaluation.

Keep all your enterprise data at your fingertips

The Contextual AI Platform can extract insights from all enterprise data, regardless of format. Our document extraction pipeline excels at processing and understanding multimodal unstructured data sources, such as technical documentation, financial reports, and research papers. The platform also supports structured data sources, such as data warehouses, databases, and spreadsheets that store business-critical information, along with providing pre-built API integrations for popular SaaS applications like Slack, Google Drive, Sharepoint, and Github.

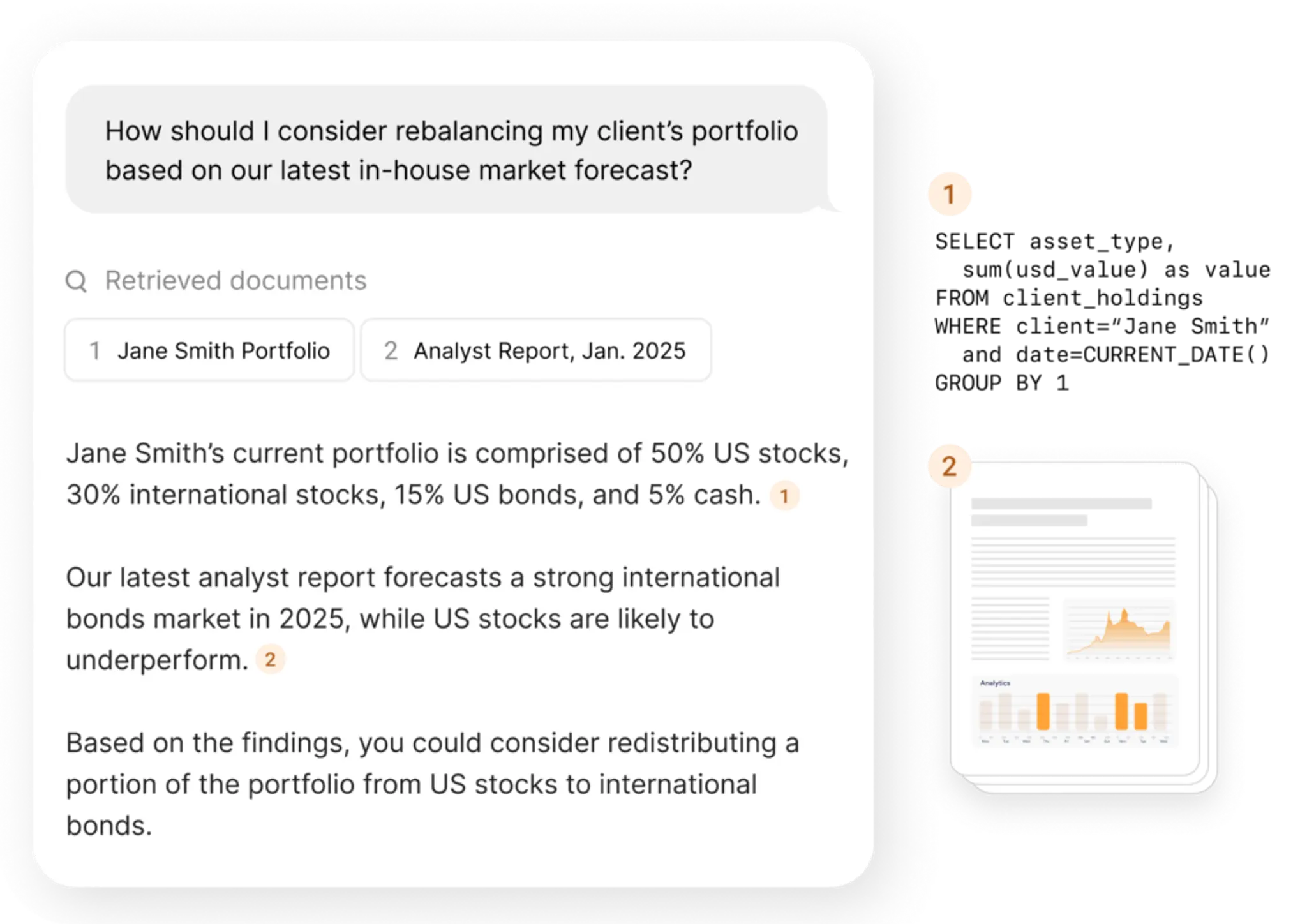

Access the most relevant data with multimodal and multihop retrieval

With our unique mixture-of-retrievers approach and state-of-the-art reranker, our agents can precisely retrieve and reason over multiple modalities, such as text, images, charts, figures, tables, and code. Our agents are also capable of test-time reasoning, choosing to search for additional data on an iterative basis to improve and sharpen responses. Together, multimodal and multihop retrieval ensure the agents are always contextualized with the most relevant knowledge needed to complete complex tasks.

Always have the freshest information

Our data ingestion pipelines are configurable to automatically ingest new data into the platform on an ongoing basis. This maximizes data freshness and guarantees agents are grounded in the most up-to-date enterprise knowledge.

Generate highly grounded responses

Built with Meta’s Llama 3.3, the platform’s grounded language models are uniquely engineered such that generated responses are strongly grounded in the retrieved data, ensuring high accuracy and protection against the hallucinations common in consumer-focused frontier models. Additionally, the platform automatically flags statements with low groundedness, further protecting end-users from potential hallucinations.

Train agents to behave the way you want

Our tuning and alignment tools enable AI teams to specialize agents for any domain-specific use case. To optimize accuracy, the platform’s retrieval and generation components can be jointly tuned by providing sample queries, gold-standard responses, and supporting evidence for each response. Other parts of the platform—like document extraction techniques, weighting of lexical and semantic search results, and more—can be extensively customized to best address individual workloads. To measure the impact of each tuning job, built-in evaluation tools assess generated responses for equivalence and groundedness.

Enterprise-grade features to move quickly and confidently into production

Moving AI systems into production requires meeting stringent enterprise requirements around security, audit trails, high availability, scalability, and compliance. Contextual AI addresses these critical prerequisites through its enterprise-grade features, flexible deployment options, and hands-on partnership to help organizations quickly operationalize their specialized RAG agents.

Rest easy with enterprise-grade security and governance

Contextual AI is SOC 2 certified and offers a comprehensive suite of enterprise-grade security features to reach production safely and confidently. Role-based access controls ensure query responses are only grounded in data that is accessible to the user, while in-transit and at-rest encryption guarantees the protection of sensitive data. Additionally, enterprises can implement query guardrails to ensure responses are safe, appropriate, and aligned with customer brand and content guidelines.

Know exactly where each claim comes from

Precise citations are provided for the retrieved documents used to generate responses. While competing platforms typically cite entire source documents, which puts undue burden on the end-user to verify accuracy, our citations include tight bounding boxes to highlight the most relevant data. The fine-grained attribution promotes greater trust and confidence, which ultimately leads to greater employee productivity through higher adoption.

Deploy in our cloud or yours

The platform has several deployment options. Our fully managed, highly secure SaaS offering on Contextual AI infrastructure enables organizations to achieve faster time-to-production, lower development and maintenance costs, and higher ROI. The SaaS offering provides uptime SLAs to ensure high availability and is elastically scalable to sustain state-of-the-art performance over time. For customers in regulated industries, we also provide VPC and on-premises deployment options.

Partner with leading AI experts

Our team of AI experts partners closely with customers at every step of the agent development process and helps enterprises rapidly meet the performance requirements needed to move their highest value use cases into production. Additionally, Contextual AI’s deep commitment to AI research, as evidenced by notable research papers on topics like evaluation, multimodal understanding, and alignment, ensures your enterprise remains at the forefront of AI innovation.

Achieving real-world, production-grade performance

Through our early partnerships with leading enterprises, the Contextual AI Platform has been battle-tested in production across a variety of industries and functional domains. One such customer is Qualcomm, a global semiconductor leader who chose Contextual AI to support its highly technical and mission-critical Customer Engineering organization. The team sought an AI solution that could navigate and synthesize information from the company’s vast technical documentation to accelerate customer issue resolution.

Unlike other evaluated solutions, which struggled to achieve the accuracy required for the complex technical use case, the Contextual AI Platform dramatically improved end-to-end accuracy. The solution has since been deployed to production and supports Qualcomm’s global team of customer engineers to quickly access precise information from tens of thousands of technical documents spanning multiple business units and product lines.

Try the Contextual AI Platform today

With the Contextual AI Platform now generally available, we invite you to build your own highly specialized, accurate, and trustworthy RAG agents. Get started today by requesting access to the platform below!

REQUEST ACCESSRelated Articles

Making Search Agents Faster and Smarter

Optimize your search agents for speed and accuracy. Our two-axis approach—reranker improvements and RL-trained planners—reaches 60.7% accuracy.

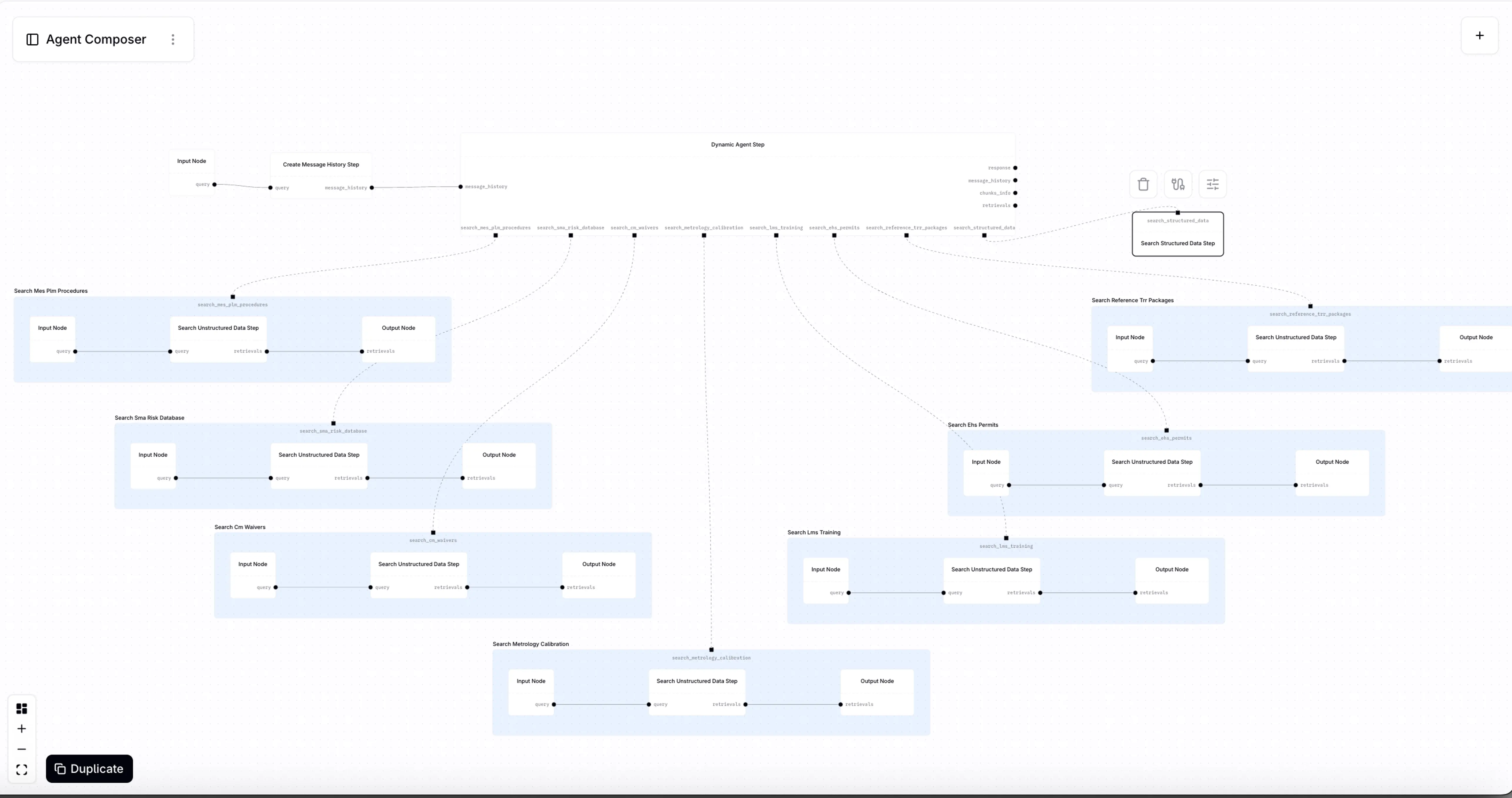

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.