Why Does Enterprise AI Hallucinate?

AI hallucination—when a model generates confident-sounding but factually incorrect information—is one of the biggest barriers to enterprise AI adoption. When a customer support bot invents product specifications that don't exist, or a research assistant fabricates citations, trust evaporates.

The conventional explanation is that "AI makes things up." But that framing obscures the real problem. AI models don't hallucinate because they're unreliable. They hallucinate because they're asked to answer questions without access to the information they need.

Understanding this distinction is the key to building enterprise AI that actually works.

The Root Cause: AI Without Context

Large language models are trained on vast amounts of public internet data. They learn patterns, relationships, and how to generate fluent text. But they don't have access to your company's technical documentation, product specifications, customer records, or institutional knowledge.

When you ask a general-purpose AI about your specific products, processes, or data, the model has two options: admit it doesn't know, or generate a plausible-sounding response based on patterns from its training data. Models are optimized to be helpful, so they often choose the second option—and hallucinations result.

This is why enterprise AI projects so often disappoint. Teams deploy a powerful model expecting it to understand their domain, only to discover it confidently generates responses that are subtly or catastrophically wrong. The model was never the problem; the missing context was.

The Three Types of Enterprise AI Hallucination

Understanding the different ways AI hallucinations manifest helps diagnose and address them.

Fabricated Facts

The model invents information that doesn't exist—fake product specifications, nonexistent features, imaginary capabilities. This happens when the model is asked about specific details it doesn't have access to and fills in the gaps with plausible-sounding content.

A semiconductor customer asks about a specific device parameter. The model doesn't have access to the datasheet, so it generates a parameter value that seems reasonable based on similar devices in its training data. The answer is wrong, and the customer makes a decision based on incorrect information.

Misattributed Information

The model takes information from one source and incorrectly attributes it to another, or applies information from one context to a different context where it doesn't apply. This is particularly common when models are asked to synthesize across documents.

A legal AI is asked about a contract clause. It retrieves information from a similar contract but presents it as if it came from the contract in question. The clause exists—just not in the document the user is asking about.

Outdated or Conflicting Information

The model provides information that was once accurate but has since changed, or selects one version of conflicting information without acknowledging the conflict. This happens when enterprise data evolves but the AI system doesn't have access to current information.

An internal AI assistant is asked about company policy. The policy changed six months ago, but the model only has access to the old version—or has access to both and doesn't know which is current. The answer reflects outdated policy.

Why Traditional Approaches Fall Short

Organizations have tried various approaches to reduce enterprise AI hallucination, with limited success.

Fine-Tuning on Enterprise Data

Fine-tuning adjusts model weights based on company-specific data. This can help the model learn domain vocabulary and patterns, but it doesn't solve the context problem. A fine-tuned model still doesn't have access to specific documents at inference time. It may hallucinate more fluently—using the right terminology while still generating incorrect information.

Fine-tuning also creates maintenance burden. Every time documentation changes, the model needs retraining. For fast-moving enterprises, this becomes impractical.

Prompt Engineering

Better prompts can help models admit uncertainty and avoid overconfident responses. Instructions like "only answer based on information provided" or "say 'I don't know' if you're uncertain" improve behavior at the margins.

But prompt engineering can't create context that doesn't exist. If the model doesn't have access to the relevant datasheet, no prompt will help it provide the correct specification. Prompt engineering optimizes the question; the missing context is the problem.

Temperature and Parameter Tuning

Reducing model temperature makes outputs more deterministic and less "creative," which can reduce some hallucinations. But this is a blunt instrument. Lower temperature also reduces the model's ability to synthesize, reason, and generate useful responses. You end up with AI that's less wrong but also less useful.

Human Review

Many organizations default to human review of all AI outputs. This catches hallucinations but eliminates the efficiency gains that motivated AI adoption in the first place. If every response requires expert review, you've built an expensive autocomplete, not an intelligent assistant.

The Solution: Grounding AI in Enterprise Context

The only reliable way to reduce enterprise AI hallucination is to give models access to the information they need to answer accurately. This is the domain of retrieval-augmented generation (RAG) and, more broadly, context engineering.

The core insight is simple: instead of asking a model to answer from memory, retrieve the relevant documents and include them in the prompt. The model reasons over provided information rather than generating from patterns.

When a customer asks about a device specification, the system first retrieves the relevant datasheet, then asks the model to answer based on the retrieved content. The model has the information it needs; hallucination risk drops dramatically.

Why Retrieval Works

Retrieval-augmented systems ground model responses in source documents. The model's job shifts from "generate an answer" to "synthesize an answer from provided information." This is a fundamentally different task—and one models excel at.

Retrieval also enables attribution. When the system retrieves specific documents and the model generates a response based on them, you can show users exactly where information came from. This builds trust and enables verification.

The Accuracy Imperative

Not all retrieval systems are equal. If retrieval surfaces irrelevant or outdated documents, the model will still generate poor responses—just grounded in the wrong information. Effective enterprise RAG requires precise retrieval that surfaces exactly the information needed for each query.

This is where many implementations struggle. Basic RAG tutorials demonstrate retrieval on clean, simple document sets. Enterprise reality is messy: millions of documents, complex formats, overlapping and conflicting information, strict access controls. Building retrieval that works at enterprise scale requires purpose-built infrastructure.

Building Hallucination-Resistant Enterprise AI

Organizations serious about reducing AI hallucination invest in several key areas.

Comprehensive Data Ingestion

AI can only retrieve information it has access to. Hallucination risk increases whenever relevant information exists but isn't indexed. Effective systems connect to all relevant data sources—document repositories, databases, ticketing systems, knowledge bases—and keep indices current as information changes.

Enterprise-Grade Parsing

Technical documents contain tables, figures, cross-references, and complex layouts that basic parsers mishandle. When parsing fails, retrieved content is garbled or incomplete, and the model generates responses based on corrupted context. Investing in robust parsing pays dividends in response quality.

Precision Retrieval

The quality of retrieved content directly determines response quality. Systems need hybrid retrieval (combining semantic and keyword search), learned reranking (scoring results for relevance to specific queries), and query reformulation (improving searches when initial results are poor). Every improvement in retrieval precision reduces hallucination risk.

Confidence and Attribution

Well-designed systems communicate confidence and show sources. When the system retrieves highly relevant documents, responses can be confident. When retrieval is uncertain, the system should say so rather than guess. Citations let users verify information and build appropriate trust.

Guardrails and Boundaries

Some questions shouldn't be answered, even with good retrieval. Effective systems include guardrails that prevent responses on topics outside the system's scope, flag potentially sensitive queries for human review, and enforce output policies. Knowing when not to answer is as important as answering well.

Continuous Evaluation

Hallucination risk evolves as data changes, usage patterns shift, and edge cases emerge. Organizations need ongoing evaluation—automated testing, user feedback loops, and periodic audits—to maintain quality over time. A system that worked well at launch can degrade without monitoring.

The Real Problem Isn't the Model

It's tempting to blame AI hallucination on model limitations. But the models are remarkably capable. GPT-5, Claude, and other frontier models can reason, synthesize, and generate high-quality responses—when they have the information they need.

The gap isn't intelligence; it's context. Enterprise AI fails when we ask models to answer questions about information they don't have access to. The solution isn't waiting for better models; it's building systems that connect models to enterprise knowledge.

This reframing changes how organizations approach enterprise AI. Instead of evaluating models in isolation, evaluate the complete system: data ingestion, parsing, retrieval, generation, and governance. Instead of fine-tuning and hoping, build infrastructure that ensures models have what they need.

The organizations seeing real results from enterprise AI aren't using magic models. They're investing in context engineering—the systems that make AI work for expert-level tasks.

From Hallucination to Trust

The goal isn't eliminating hallucination entirely—even humans make mistakes. The goal is building systems that are reliable enough to trust with real work, with appropriate guardrails and verification mechanisms.

This means AI that admits uncertainty rather than guessing. AI that shows sources so users can verify. AI that operates within defined boundaries rather than attempting to answer everything. AI that improves through feedback and evaluation.

When organizations build these systems—grounding AI in enterprise context with robust retrieval and appropriate guardrails—enterprise AI transforms from a liability to an asset. The same technology that hallucinated confidently now accelerates expert work, turning 8-hour tasks into 10-minute tasks while maintaining accuracy.

The question isn't whether enterprise AI can work. It's whether organizations invest in the context infrastructure required to make it work.

Build Enterprise AI That Stays Grounded in Your Data

Contextual AI provides the context layer that eliminates enterprise AI hallucination. Our platform connects AI to your technical documentation, specifications, and institutional knowledge—with the retrieval accuracy and governance enterprise requires.

Contact SalesFAQ

Why does AI hallucinate in enterprise settings? Enterprise AI hallucination occurs because models don't have access to company-specific information—technical documentation, product specifications, customer records. Without this context, models generate plausible-sounding but incorrect responses based on patterns from training data.

How can you prevent AI hallucination? The most effective way to prevent AI hallucination is retrieval-augmented generation (RAG), which retrieves relevant documents and includes them in the prompt before generation. This grounds model responses in actual information rather than patterns.

Is AI hallucination a model problem? AI hallucination is primarily a context problem, not a model problem. Frontier models are highly capable when given the right information. Hallucination typically occurs when models are asked to answer without access to relevant enterprise data.

Can fine-tuning eliminate AI hallucination? Fine-tuning alone does not eliminate AI hallucination. While fine-tuning helps models learn domain vocabulary, it doesn't give them access to specific documents at inference time. Retrieval-augmented approaches are more effective for grounding responses in current enterprise data.

Related Articles

Making Search Agents Faster and Smarter

Optimize your search agents for speed and accuracy. Our two-axis approach—reranker improvements and RL-trained planners—reaches 60.7% accuracy.

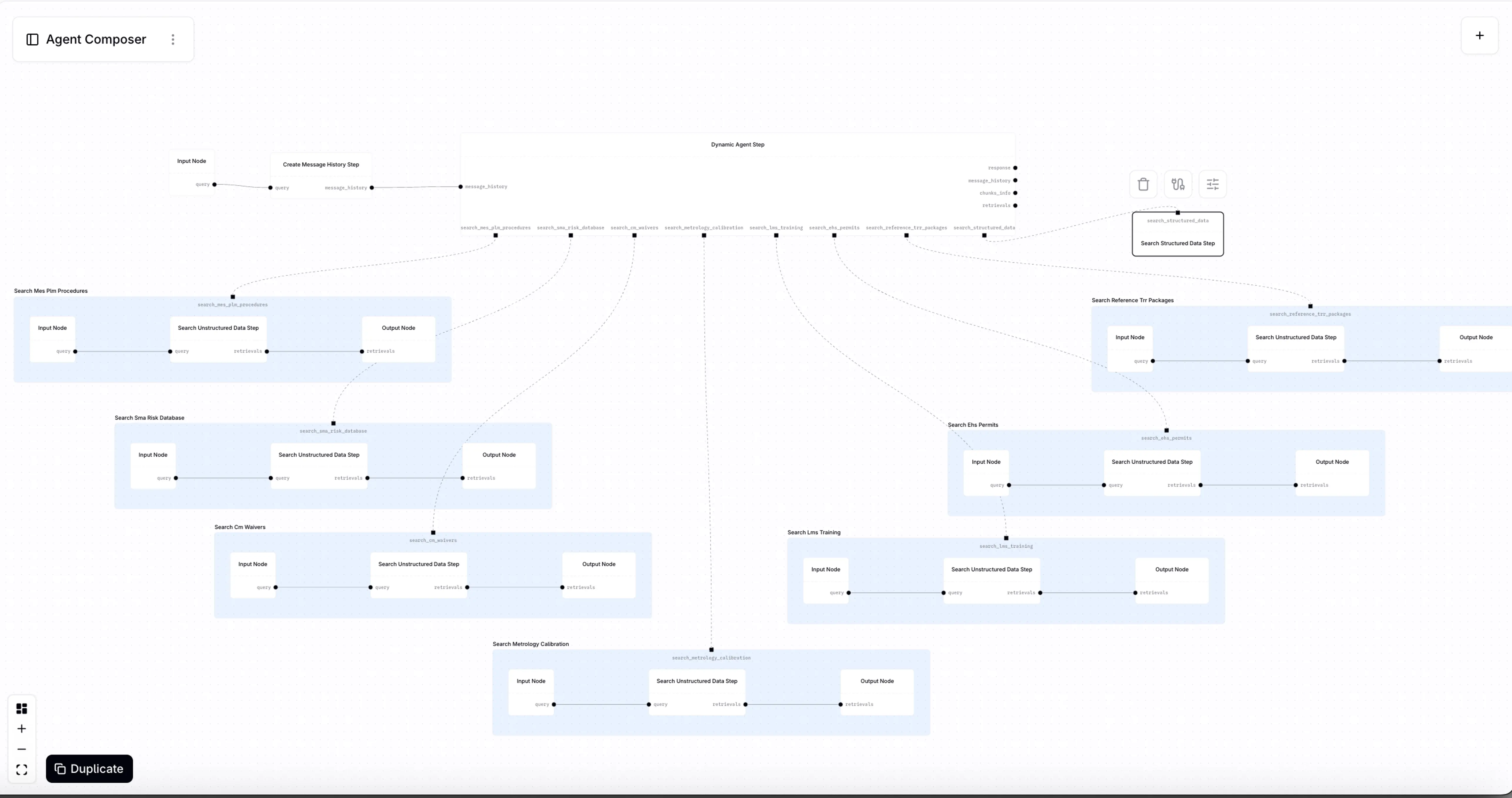

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.