RAG vs Fine-Tuning: Which Approach is Right for Enterprise AI?

When building enterprise AI applications, teams face a fundamental architecture decision: retrieval-augmented generation (RAG) or fine-tuning? Both approaches adapt large language models to work with domain-specific information, but they work in fundamentally different ways—with different tradeoffs for accuracy, cost, maintenance, and use cases.

This guide breaks down both approaches to help you make the right choice for your enterprise AI project.

Understanding the Two Approaches

What is Retrieval-Augmented Generation (RAG)?

RAG retrieves relevant documents from a knowledge base and includes them in the prompt when generating responses. The model reasons over retrieved content rather than relying solely on information learned during training.

When a user asks a question, the system searches for relevant documents, retrieves the most pertinent content, and passes it to the model along with the query. The model generates a response grounded in the retrieved information.

RAG keeps the base model unchanged. It works by providing context at inference time rather than modifying the model itself.

What is Fine-Tuning?

Fine-tuning trains a model on domain-specific data, adjusting its weights to encode new knowledge and behaviors. The model "learns" from examples and incorporates that learning into its parameters.

Organizations fine-tune by providing training examples—typically question-answer pairs or documents—and running additional training passes. The resulting model has modified weights that reflect the training data.

Fine-tuning changes the model itself. The new knowledge becomes part of the model's parameters rather than being provided at inference time.

Head-to-Head Comparison

| Consideration | RAG | Fine-tuning |

|---|---|---|

| How it works | Retrieves relevant documents at query time | Trains knowledge into model weights |

| Data freshness | Always current (retrieves live data) | Static (requires retraining to update) |

| Setup cost | Moderate (build retrieval infrastructure) | High (training compute, expertise) |

| Ongoing cost | Lower (retrieval + inference) | Higher (periodic retraining) |

| Accuracy on specifics | High (retrieves exact documents) | Variable (depends on training coverage) |

| Attribution | Easy (can cite retrieved sources) | Difficult (knowledge is in weights) |

| Maintenance | Update documents, index refreshes | Retrain model when data changes |

| Hallucination risk | Lower (grounded in retrieved content) | Higher (generates from learned patterns) |

| Best for | Factual Q&A, documentation, research | Style, tone, specialized vocabulary |

When to Use RAG

RAG excels when your application requires accurate, current, attributable information from enterprise data sources.

Factual Question-Answering

When users need specific facts from documentation—product specifications, policy details, technical parameters—RAG retrieves the authoritative source and grounds the response in it. The model doesn't need to "know" the answer; it needs to find and synthesize it.

Example: A customer asks about a semiconductor device's operating temperature range. RAG retrieves the relevant datasheet section and generates a response with the exact specification and a citation.

Fast-Changing Information

When enterprise data changes frequently, RAG automatically incorporates updates. New documents are indexed; retrieval surfaces current information. No retraining required.

Example: A company updates its HR policies quarterly. With RAG, the AI assistant automatically retrieves current policy documents. With fine-tuning, the model would need retraining each quarter.

Large Knowledge Bases

RAG scales to millions of documents without proportional increases in cost. The retrieval system indexes content; the model only sees relevant chunks at query time.

Example: A technical support system covers 50,000 product documents. RAG retrieves the relevant few for each query. Fine-tuning on this volume would be expensive and the model couldn't reliably recall specific details.

Auditability Requirements

RAG enables attribution—showing users exactly where information came from. This matters for compliance, legal, and high-stakes applications where decisions must be traceable to source documents.

Example: A compliance AI flags potential policy violations and cites the specific regulation and internal policy document supporting each finding.

Multi-Tenant Applications

RAG supports query-time access controls, showing different users information based on their permissions. A single system can serve multiple user groups with different data access.

Example: A customer support AI serves multiple product lines. Sales reps see information relevant to their accounts; support engineers see technical documentation they're authorized to access.

When to Use Fine-Tuning

Fine-tuning excels when you need to change how the model behaves, writes, or reasons—rather than what specific facts it knows.

Specialized Style or Tone

When outputs need to match a specific writing style, voice, or format consistently, fine-tuning encodes these patterns into the model's weights.

Example: A company wants all customer communications to follow a specific brand voice. Fine-tuning on approved examples teaches the model this style.

Domain-Specific Vocabulary

When a domain uses specialized terminology, abbreviations, or jargon that the base model handles poorly, fine-tuning helps the model use these terms naturally.

Example: A legal AI needs to use precise legal terminology correctly. Fine-tuning on legal documents improves the model's facility with domain language.

Structured Output Formats

When outputs must follow specific formats consistently—particular JSON structures, report templates, or data schemas—fine-tuning can improve reliability.

Example: An extraction system must output data in a specific schema. Fine-tuning on examples improves format compliance.

Reasoning Patterns

When the model needs to follow specific reasoning approaches or methodologies, fine-tuning can encode these patterns.

Example: A diagnostic AI should follow a particular troubleshooting methodology. Fine-tuning on examples of this reasoning pattern improves consistency.

The Case for RAG in Enterprise Settings

For most enterprise AI applications, RAG offers significant advantages over fine-tuning.

Accuracy on Specific Facts

Enterprise applications typically require accurate responses about specific facts: What does this specification say? What is the policy on this? What did this customer order? RAG retrieves the source and grounds the response; fine-tuned models must recall from parameters and often hallucinate details.

Studies consistently show RAG outperforms fine-tuning on factual accuracy for knowledge-intensive tasks. When the answer exists in a document, retrieval finds it; fine-tuning hopes the model learned it.

Maintainability

Enterprise data changes constantly—new products, updated policies, revised documentation. RAG adapts automatically: update the source documents, refresh the index, and the system uses current information.

Fine-tuning requires retraining to incorporate changes. For organizations with frequently changing information, this becomes a significant operational burden. Many fine-tuning projects stall because teams can't maintain freshness.

Cost Efficiency

RAG infrastructure has upfront costs but scales efficiently. Adding documents to the knowledge base is inexpensive; retrieval costs are modest; inference uses standard model pricing.

Fine-tuning has high upfront costs (training compute, data preparation, expertise) and recurring costs (retraining as data changes). For large knowledge bases, fine-tuning compute costs can be substantial.

Transparency and Trust

RAG enables attribution—every response can cite its sources. Users can verify information. Auditors can trace decisions. This transparency builds appropriate trust.

Fine-tuned models are black boxes for specific facts. When a model generates a specification or policy detail, there's no way to verify where it came from. Did the model learn it correctly? Is it hallucinating? Attribution is impossible.

Governance and Control

RAG respects enterprise data governance. Access controls are enforced at query time. Sensitive documents stay in controlled repositories. Audit trails track what information was accessed.

Fine-tuning bakes information into model weights, complicating data governance. Once trained, controlling access to specific information is difficult. Models may leak training data in unexpected ways.

The Hybrid Approach: RAG + Fine-Tuning

Some applications benefit from combining both approaches.

RAG for Facts, Fine-Tuning for Style

Use RAG to retrieve accurate information and a fine-tuned model to generate responses in the appropriate style. The retrieval system provides facts; the fine-tuned model provides voice.

Example: A customer support AI retrieves product documentation (RAG) but generates responses in the company's specific brand voice (fine-tuning).

Fine-Tuning for Improved Retrieval Use

Fine-tuning can improve how models use retrieved context—better extraction, more faithful summarization, improved reasoning over documents.

Example: Fine-tune on examples of high-quality responses that correctly synthesize retrieved content. The model learns patterns for effective retrieval use.

Domain Adaptation + Knowledge Retrieval

Light fine-tuning adapts the model to domain vocabulary and conventions; RAG provides specific knowledge. This combines fine-tuning's strength (style/vocabulary) with RAG's strength (specific facts).

Making the Decision

Use this framework to choose your approach:

Start with RAG If:

- Your application requires accurate facts from enterprise documents

- Information changes frequently (monthly or more often)

- You need attribution and source citations

- Multiple user groups need different data access

- Audit and compliance requirements exist

- You have large document collections (thousands or more)

- Time-to-production is important (RAG deploys faster)

Consider Fine-Tuning If:

- You need consistent style, tone, or voice

- Domain vocabulary requires significant adaptation

- Output format must be highly consistent

- You're building on a smaller, stable knowledge base

- Reasoning patterns need to follow specific methodologies

Consider Hybrid If:

- You need both factual accuracy AND specific style

- RAG retrieval quality could benefit from model adaptation

- Your use case has distinct fact and style requirements

Common Mistakes to Avoid

Mistake 1: Fine-Tuning for Factual Knowledge

Organizations often try fine-tuning when they need the model to "know" enterprise information. This rarely works well. Fine-tuned models hallucinate when asked about specific details they didn't learn well. RAG is almost always better for factual knowledge.

Mistake 2: Underinvesting in Retrieval Quality

RAG quality depends on retrieval quality. Many projects implement basic RAG, see mediocre results, and conclude RAG doesn't work. The problem is usually poor retrieval—wrong documents surfaced, important content missed, irrelevant chunks included. Investing in retrieval precision pays enormous dividends.

Mistake 3: Ignoring Maintenance Requirements

Both approaches require ongoing maintenance, but the nature differs. RAG needs index updates, retrieval tuning, and document freshness management. Fine-tuning needs periodic retraining and training data management. Budget for ongoing maintenance, not just initial deployment.

Mistake 4: Overcomplicating the First Implementation

Start simple and iterate. Basic RAG often outperforms elaborate fine-tuning on factual tasks. Get a simple system working, measure results, then optimize. Many projects fail by overengineering before validating the approach.

The Enterprise Reality

In practice, RAG has become the dominant approach for enterprise AI applications. The reasons are practical: enterprises need accurate responses about their specific data, that data changes frequently, and accountability requires traceability.

Fine-tuning remains valuable for specific use cases—style, vocabulary, output format—but it's rarely sufficient alone for enterprise knowledge applications. Most successful enterprise AI systems use RAG as the foundation, sometimes enhanced with light fine-tuning for style or retrieval optimization.

The question isn't really "RAG or fine-tuning?" For enterprise knowledge applications, RAG is the starting point. The question is how to build RAG infrastructure that delivers enterprise-grade accuracy, security, and maintainability.

Build Enterprise RAG That Actually Works

Contextual AI provides the enterprise RAG platform that delivers accuracy without the infrastructure burden. Purpose-built parsing, precision retrieval, enterprise security, and continuous optimization—so your team can focus on applications, not plumbing.

Contact SalesFAQ

What is the difference between RAG and fine-tuning? RAG (retrieval-augmented generation) retrieves relevant documents at query time and includes them in the prompt. Fine-tuning trains domain-specific knowledge into model weights. RAG provides context at inference; fine-tuning modifies the model itself.

Is RAG better than fine-tuning? For enterprise knowledge applications requiring factual accuracy, RAG typically outperforms fine-tuning. RAG retrieves exact source information and enables attribution. Fine-tuning is better for style, tone, and vocabulary adaptation where specific facts matter less.

When should I use fine-tuning instead of RAG? Use fine-tuning when you need consistent style or tone, domain-specific vocabulary, structured output formats, or specific reasoning patterns. Fine-tuning excels at changing how the model writes and reasons rather than what specific facts it knows.

Can I use both RAG and fine-tuning together? Yes, hybrid approaches combine RAG for factual knowledge with fine-tuning for style and vocabulary. This leverages RAG's accuracy on facts with fine-tuning's ability to adapt tone and domain language.

Why does RAG reduce hallucination more than fine-tuning? RAG grounds responses in retrieved documents—the model synthesizes from provided content rather than generating from patterns. Fine-tuned models must recall facts from parameters and often hallucinate details that weren't learned well during training.

Related Articles

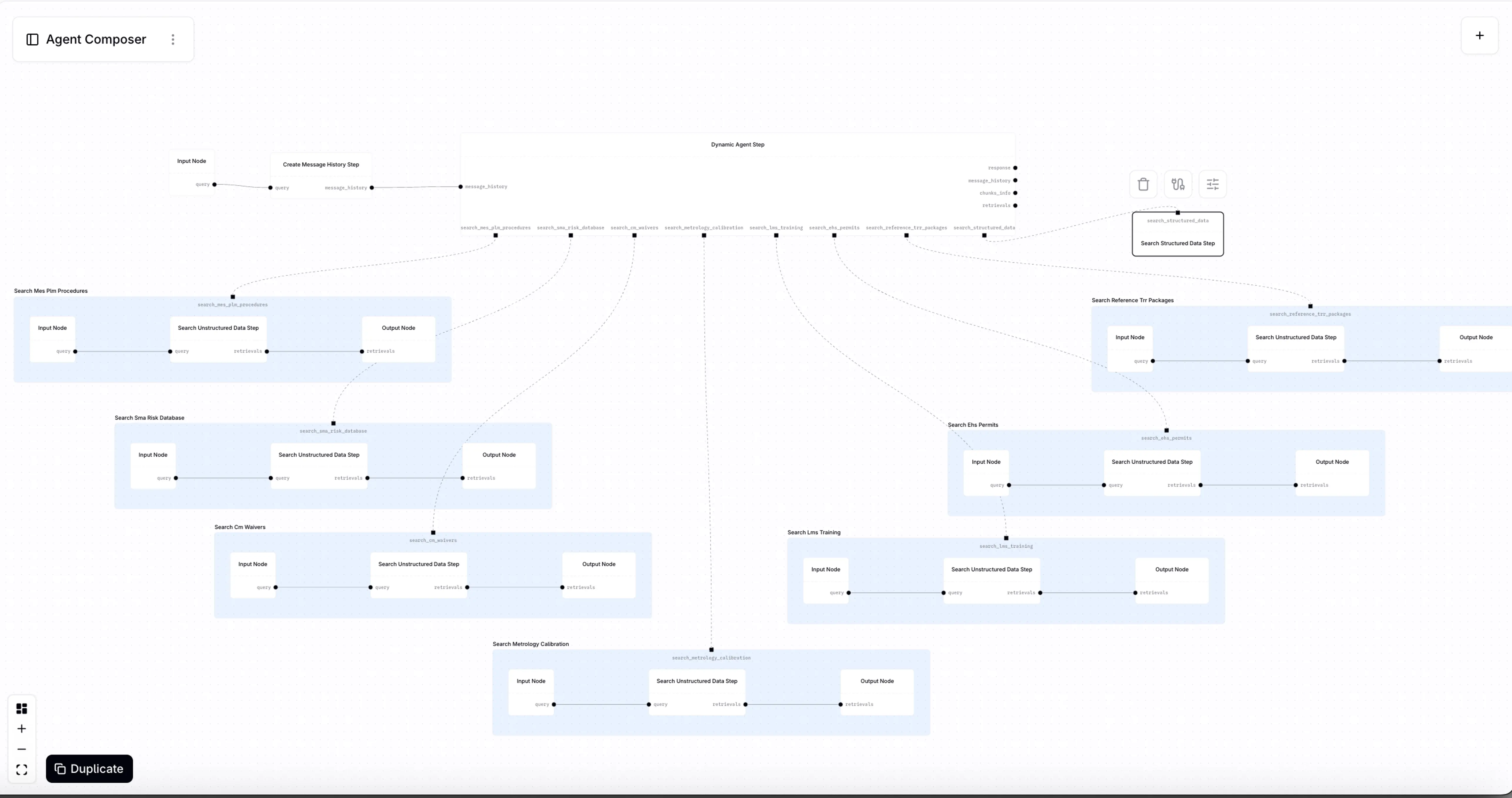

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?