Aligning language models with human preferences is a critical component in Large Language Model (LLM) development, significantly enhancing model capabilities and safety.

In production-grade enterprise AI systems, specialized AI models need to be precisely aligned to the needs and preferences of individual customers and use cases. We have found that conventional alignment methods are underspecified, making specialization challenging.

Today, we share solutions that address underspecification along two predominant axes: alignment data and alignment algorithms. This helps us very effectively tailor LLMs to specific use cases and customer preferences. Compared to conventional methods, we’ve observed a ~2x performance boost using our methods.

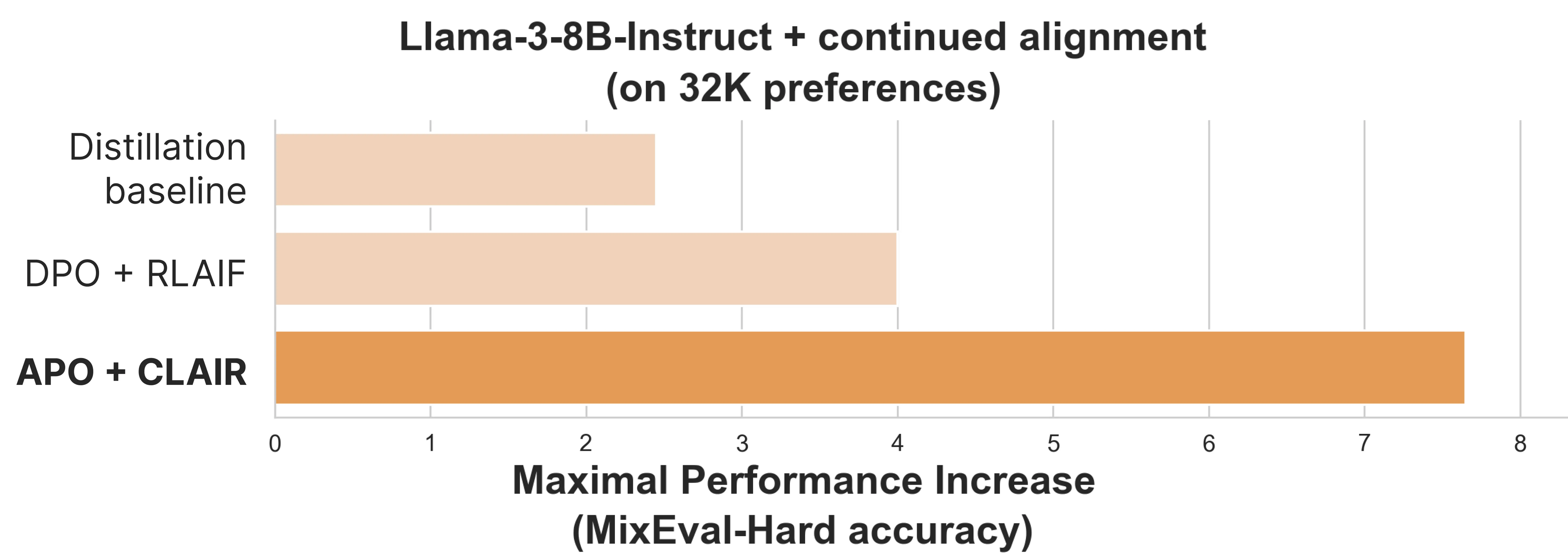

Our methods (APO and CLAIR) result in a ~2x performance increase for continued alignment of Llama-3-8B-Instruct compared to conventional alignment methods (DPO and RLAIF).

We offer a detailed description of these methods in our new paper. In this blog post, we outline our methods conceptually and discuss how they impact alignment:

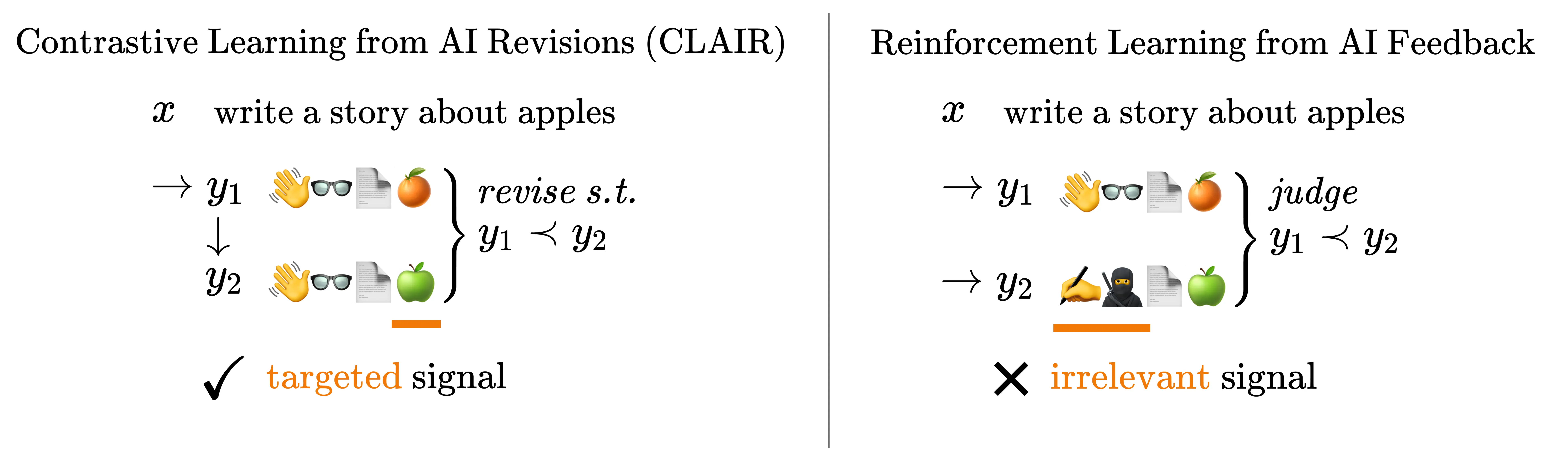

- We introduce Contrastive Learning from AI Revisions (CLAIR). CLAIR is a new data paradigm that captures preferences in more detail, giving AI developers precise control over alignment data.

- We introduce Anchored Preference Optimization (APO). APO is a new training algorithm that better specifies how a model should learn from preferences, giving AI developers precise control over model changes.

Precise Preferences

Preferences can be captured in statements such as “for this task, solution A was worse than solution B”, summarized as “A<B”. These paired solutions are used to update the language model in correspondence with the preferences.

In practice, this approach to preference data presents a problem. If the solutions A and B are not really comparable, it becomes very challenging to conclude what precise aspect of solution A made it worse than B.

This has important ramifications for alignment, a model trained on such pairs may not exhibit the exact behavior the developer intended and may instead pick up on irrelevant differences between A and B. This phenomenon is sometimes referred to as “Reward Hacking”.

To solve this, we introduce Contrastive Learning from AI Revisions (CLAIR). CLAIR uses a secondary AI system to minimally revise a solution A→A’ such that the resulting preference A<A’ is much more contrastive and precise. This gives AI developers more control over the exact signal expressed in their preference data.

Contrastive Revisions (CLAIR) lead to a targeted preference signal, whereas judged preferences (e.g., RLAIF) can contain irrelevant signals.

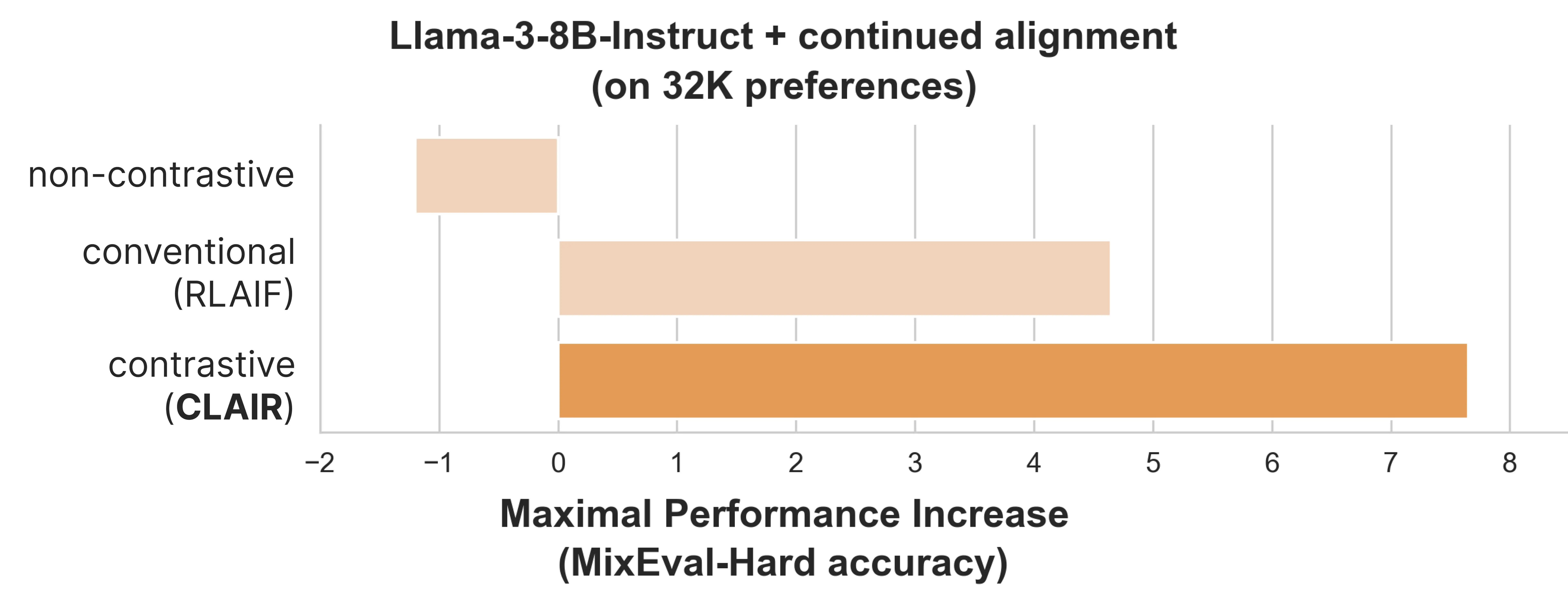

We use CLAIR to further align the very strong Llama-3-8B-Instruct model across a range of instructions. We compare it to a conventional method of creating preferences, and a non-contrastive baseline where solutions A and B are less contrastive. We measure the performance increase on MixEval-Hard, a challenging benchmark that correlates highly with human opinion on which model is stronger. For the same amount of data, CLAIR improves the model the most, and the contrastiveness of CLAIR is a major driver of performance:

Contrastive preference pairs lead to the greatest performance increase when continuously aligning Llama-3-8B-Instruct.

Anchored Alignment

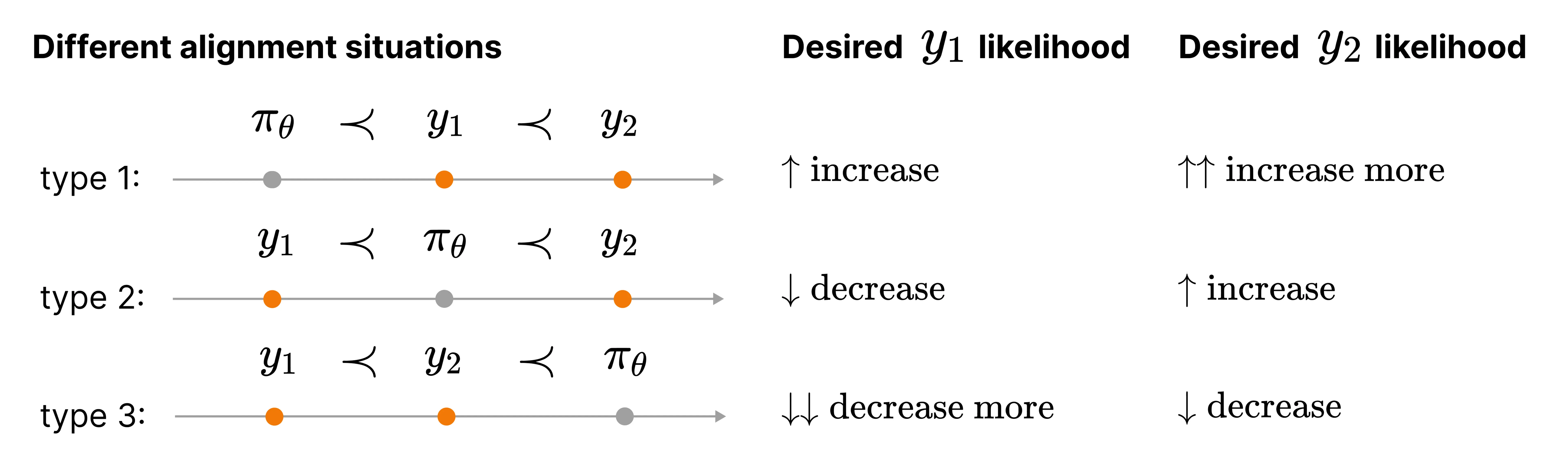

Given a model M, alignment training may look considerably different depending on whether M is generally stronger or weaker than either solution A or B in the preference pair. For example, a strong model can still learn meaningful things from the A<B preference, even if both solutions A & B are generally worse than the model. We find that it is essential to account for this model-data relation during training. Conventional alignment methods don’t capture this information and inadvertently hurt performance.

The relation between model and output quality creates different alignment scenarios. The desired likelihood change during training differs between alignment scenarios.

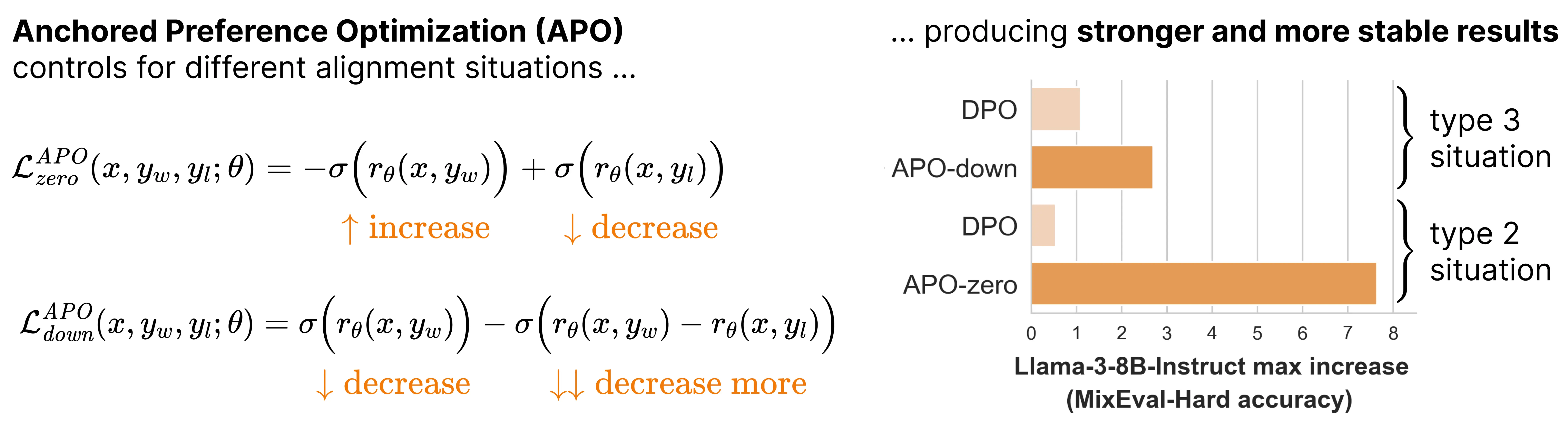

To tackle this, we introduce Anchored Preference Optimization (APO). APO uses simple constraints during training to account for the relationship between the model and preference data. This allows AI developers to specify how exactly they want the model M to learn from preference pairs during training.

We consider two APO variants, tailored to alignment situation 2 & 3 as outlined in the figure above. Aligning Llama-3-8B-Instruct with these objectives produces stronger and more stable results compared to using an underspecified alignment objective, which is not tailored to any specific situation.

Anchored Preference Optimization (APO) variants use simple controls to account for different alignment situations, achieving stronger and more stable alignment results.

Get Started

We thank our collaborators at Ghent University (Chris Develder, Thomas Demeester) and Stanford University (Christopher Potts). We’re releasing our work on CLAIR and APO, read the paper or get started with the code:

If you want to learn more about alignment-research at Contextual AI, check out the KTO blogpost.