Announcing our $20m seed round to build the next generation of language models

Large language models, or LLMs, are going to radically change the way we work, and in many ways they are already starting to do so. With AI going fully mainstream this year, however, we are also getting more clarity on its shortcomings for real-world usage, especially in enterprise use-cases. In our view, the current generation of LLMs is amazing as a first generation technology, but these LLMs are still largely “NSFW”: they are not suitable for work quite yet. At Contextual AI, we want to change that, and build AI that works for work.

Our Journey

In our journeys as research leaders at top AI institutions, both in industry (Microsoft Research, Meta/Facebook AI Research, Hugging Face) and academia (Cambridge, NYU, Stanford), we have always been attracted to the most difficult problems. We first started working together at Facebook in 2016 where we built a multimodal framework, synthesizing information from text, images and video to help deal with especially difficult problems, such as detecting hate speech in memes, catching the sale of illicit goods and fighting misinformation. After Facebook, we both went to Hugging Face in early 2022, where we worked on large language model technology, multimodality, and pushed the envelope on model evaluation.

Together we’ve been involved in many high-impact projects over the years. Researchers and practitioners in AI might know us from our work in foundation models (RAG, FLAVA, UniT, Bloom), dense retrieval (Hallucination reduction, MDR, Unsupervised QA), evaluation (SentEval, GLUE, SuperGLUE, Dynabench, Eval on the Hub), multimodality (Hateful Memes, Winoground, TextVQA, MMF) and representation learning (InferSent, GroundSent, Poincare embeddings).

We have seen firsthand how AI can have a positive impact on the world, and how gratifying it is to help state-of-the-art AI research turn into something concrete that actually makes the world a better place. We are excited to continue that journey at Contextual AI.

The round

We are emerging from stealth today with $20m in seed funding, in a round led by Bain Capital Ventures (BCV) and with participation from Lightspeed, Greycroft, SV Angel and well-known angel investors including Elad Gil, Lip-Bu Tan, Sarah Guo, Amjad Masad, Harry Stebbings, Fraser Kelton, Sarah Niyogi and Nathan Benaich.

We were selective and thoughtful about what investors we wanted to work with and why. Beyond the dollar investment, it was important to us that we work with those who shared our belief that AI for business requires responsible stewards and exceptional attention to precision. We are grateful for their continued support of our mission and vision, especially to Bain Capital Ventures for leading our round.

What problems are we solving?

Real-world AI deployments require trust, safety, data privacy, low latency and a high degree of customizability. Sending valuable private data to external API endpoints can have unintended consequences for enterprises because they have very little control over the LLM that powers the application. We are most excited for Contextual AI to solve the following problems for enterprise needs:

- Data privacy: In the modern era, and even more so in the future, data moats are one of the only clear differentiators for an enterprise. Companies want to safeguard that raw data, not be forced to send it to somebody else to process.

- Customizability: LLMs should be easily adaptable to new types of data, new data sources and new types of use cases. But current LLMs are hidden behind an API endpoint, with almost no control for the end user.

- Hallucination: LLMs make things up, and what’s worse, they tell you the wrong answer with a high degree of confidence and can’t tell you how they arrived at that conclusion. That’s unacceptable in most workplaces.

- Compliance: There is no clear-cut way to remove information from an LLM, or even just to revise it, which makes them risky from a compliance perspective.

- Staleness: ChatGPT does not know anything that happened after September 2021, and the most downloaded model on the HuggingFace hub is BERT, which still thinks that Obama is president. LLMs should be able to always be up to date and handle fresh data as it comes in, in real time.

- Latency and cost: State of the art systems are too slow and/or too expensive for many applications, partially because they require a round-trip to somebody else’s servers, and partially because the models themselves are just too slow.

LLMs designed for the enterprise

Thinking about language models from first principles, with these challenges in mind, leads you to a different kind of model. Rather than cramming everything into the parameters of one giant, generalist model that does “AGI” (artificial general intelligence), what companies really need is “ASI”—artificial specialized intelligence. Why waste parameters, money, latency and compute on a model that knows Shakespeare and quantum physics, when all it should really do is solve your company’s problem?

The best approach for this is “Retrieval Augmented Generation”, or RAG, where a generative model is made to work together with a retrieval-based data store, like a vector database, to adapt a model to a given use case and custom data. We are deeply confident in this approach, given that we pioneered the first effort around RAG when we were at Facebook AI Research and published the first papers and open-source models on it in 2020.

In the RAG paradigm, an LLM is made to work together with an external memory, which yields a system that is less hallucinatory and more customizable to new data sources. In other words, it has the potential to overcome many of the issues we listed above. However, the current attempts at retrieval-augmenting GPTs – where indie developers cobble together a VectorDB and an LLM – suffers from many of the same shortcomings that we listed above, and inherits many of the issues from the underlying LLM that powers most of that chain.

Research in augmented language models has not stood still, however, and it is possible to build LLM models and platforms that are much more closely integrated, specialized, efficient and optimized. In our view, decoupling the memory from the generative capacity of LLMs, with different modules taking care of data integrations, reasoning, speaking, and even seeing and listening, all while being closely integrated and jointly optimized, is the future of these models and what will unlock their true potential for enterprise use cases in particular.

With a technology as important and disruptive as LLMs, we believe there should be more players, and that it is very dangerous to have this technology only in the hands of a few incumbent big tech corporations. Our approach both supports and is supported by open source. We plan to leverage open source software as much as we can and to give back to the community in return. Open source, and open science, should help democratize the technology, for the benefit of all.

This journey is 1% finished

One of our favorite slogans at Facebook was always, “this journey is only 1% finished”. The same is true for AI in general: the world is only just discovering its power. As founders, we are humbled by the support from our amazing team of investors, as well as our core team of rock star engineers, scientists and others.

Our seed round will allow us to kickstart our journey and scale up the team. We’re hiring for many different roles (Bay Area, in-office as much as possible). Please see our Careers portal if you’re interested in joining our team.

We’re excited to go on this journey to build the next generation of LLMs, designed from first principles with the enterprise use case in mind. If you’d like to learn more, please send us a note.

Our press release in full is below:

Contextual AI emerges from stealth to build the next generation of language models, for the enterprise

Founded by former Hugging Face and Meta AI researchers, Contextual AI raised $20 million in seed funding from Bain Capital Ventures, Lightspeed and Greycroft.

June 7, 2023 – Palo Alto, CA – Contextual AI, emerged from stealth today with $20 million in funding led by Bain Capital Ventures (BCV) with participation from Lightspeed, Greycroft, SV Angel and well-known angel investors including Elad Gil, Lip-Bu Tan, Sarah Guo, Amjad Masad, Harry Stebbings, Fraser Kelton, Sarah Niyogi and Nathan Benaich. The company is creating large language models (LLMs) that are purpose-built for enterprises, such that they respect data privacy, are safer, more trustworthy, more customizable and more efficient than the current generation of LLMs.

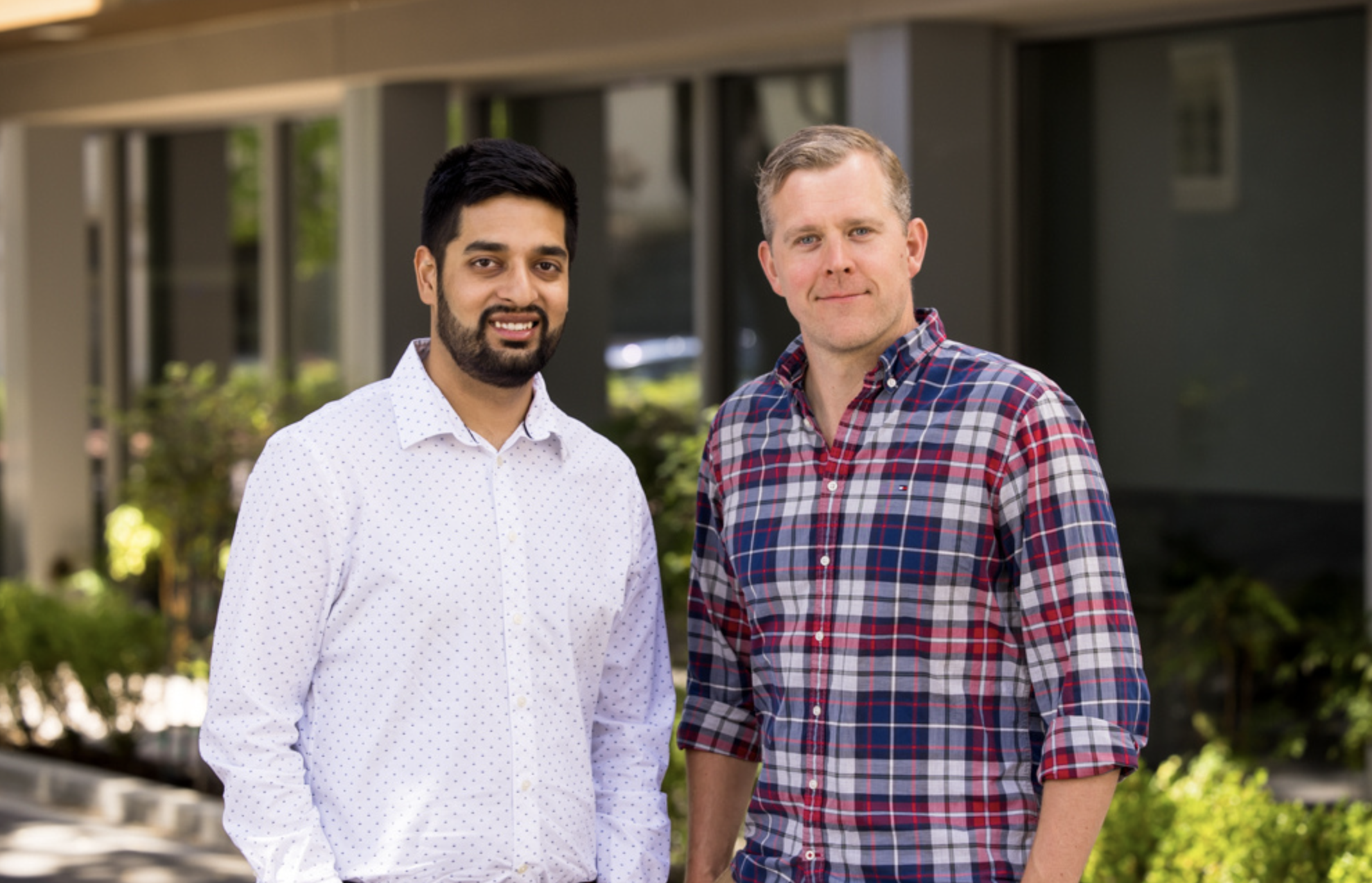

Co-founders Douwe Kiela and Amanpreet Singh both have deep and tenured backgrounds in artificial intelligence research. The two first met as research leaders at Meta AI in 2016 and continued to build their relationship and shared passion for cutting edge research at Hugging Face. Kiela is also currently an adjunct professor at Stanford University.

Contextual AI’s proprietary research is building on the already established Retrieval Augmented Generation (RAG) method, that Kiela helped pioneer while at Meta. The current generation of LLMs, while very impressive, is still NSFW – not suitable for work. In particular, the company will solve the following enterprise challenges:

- Hallucination: LLMs are known to make up information, and what is worse, they do so with very high confidence.

- Attribution: We have no real way of knowing why LLMs say what they say, so we can’t easily double-check their outputs or correct their mistakes.

- Compliance: There is no clear-cut way to remove information from an LLM, or even just to revise it, which makes them risky from a compliance perspective.

- Customization: LLMs should generalize to and be connectable with new data sources on the fly, as the data becomes available.

- Data privacy: Companies should not have to send their valuable private data to somebody else’s API endpoint.

“With 10+ years of experience as researchers in AI, natural language processing and machine learning, my cofounder Amanpreet and I are on a mission to take this powerful technology to the next step, where it can be used in real-world enterprise applications,” said Douwe Kiela, co-founder and CEO of Contextual AI. “Knowledge workers of the future need LLMs that work accurately, efficiently and effectively over huge private datasets, in a way that companies can trust.”

“Douwe and Aman are exceptional technologists who have been on the leading edge of AI research for many years. We’re excited to partner and help them bring AI to the many enterprises who are balancing its benefits with the need for security and data privacy,” said Aaref Hilaly, partner at Bain Capital Ventures.

Several Fortune 500 companies are currently in talks about piloting the company’s contextual language models on their own data. To join the waitlist, visit contextual.ai.

About Contextual AI

Founded in 2023 by Douwe Kiela and Amanpreet Singh, Contextual AI offers a pioneering approach to generative AI for the workplace. With the ability to customize models to each company’s individual data sources, Contextual AI offers a more secure, accurate and efficient way to empower knowledge workers to do their best work with greater ease and efficiency.

Homepage

Homepage