Introducing OLMoE: A small, fully open, and state-of-the-art mixture-of-experts LLM

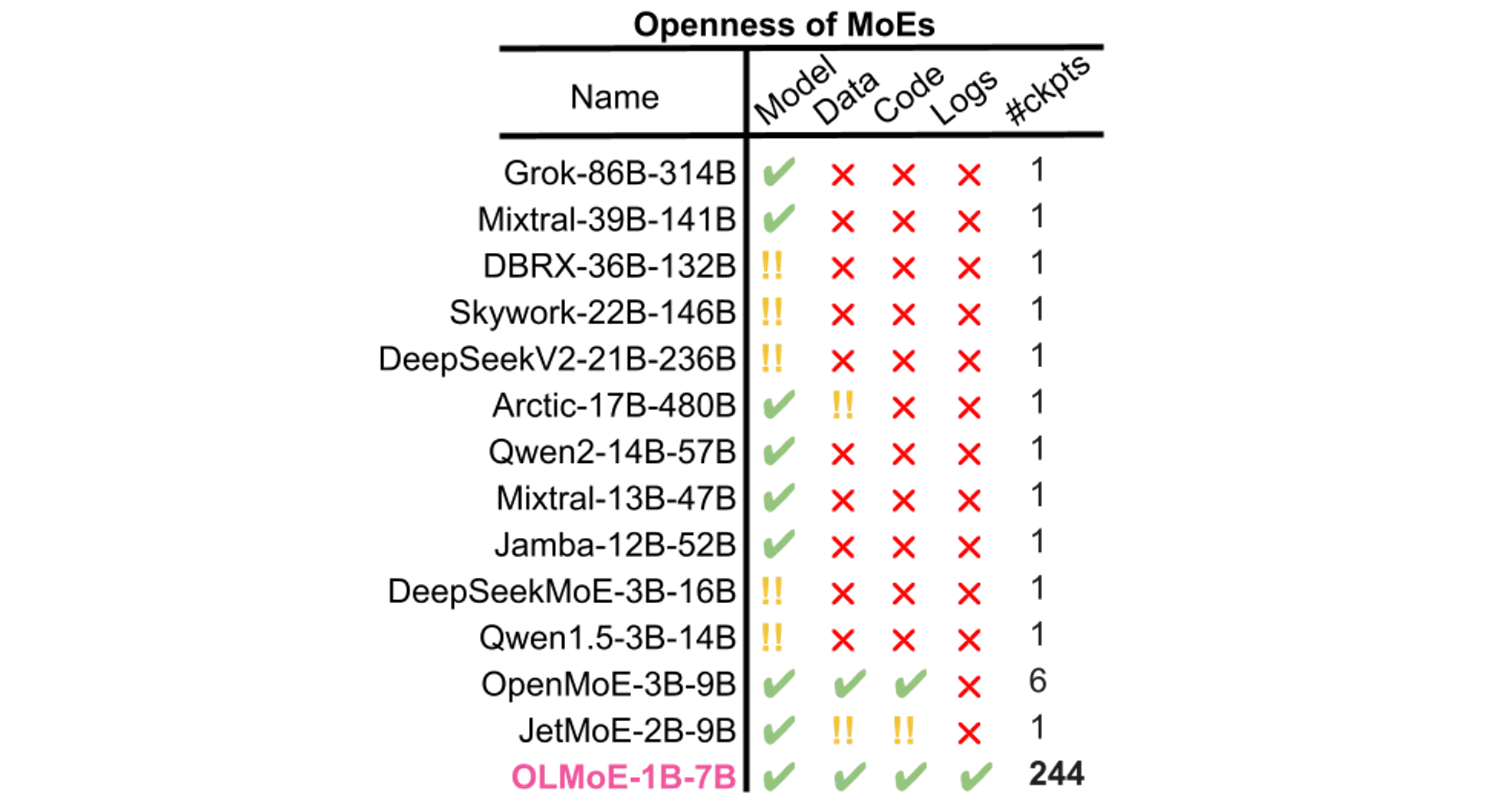

Today, together with the Allen Institute for AI, we’re announcing OLMoE, a first-of-its-kind fully open-source mixture-of-expert (MoE) language model that scores best in class when considering the combination of performance and cost. OLMoE is pre-trained from scratch and released with open data, code, logs, and intermediate training checkpoints.

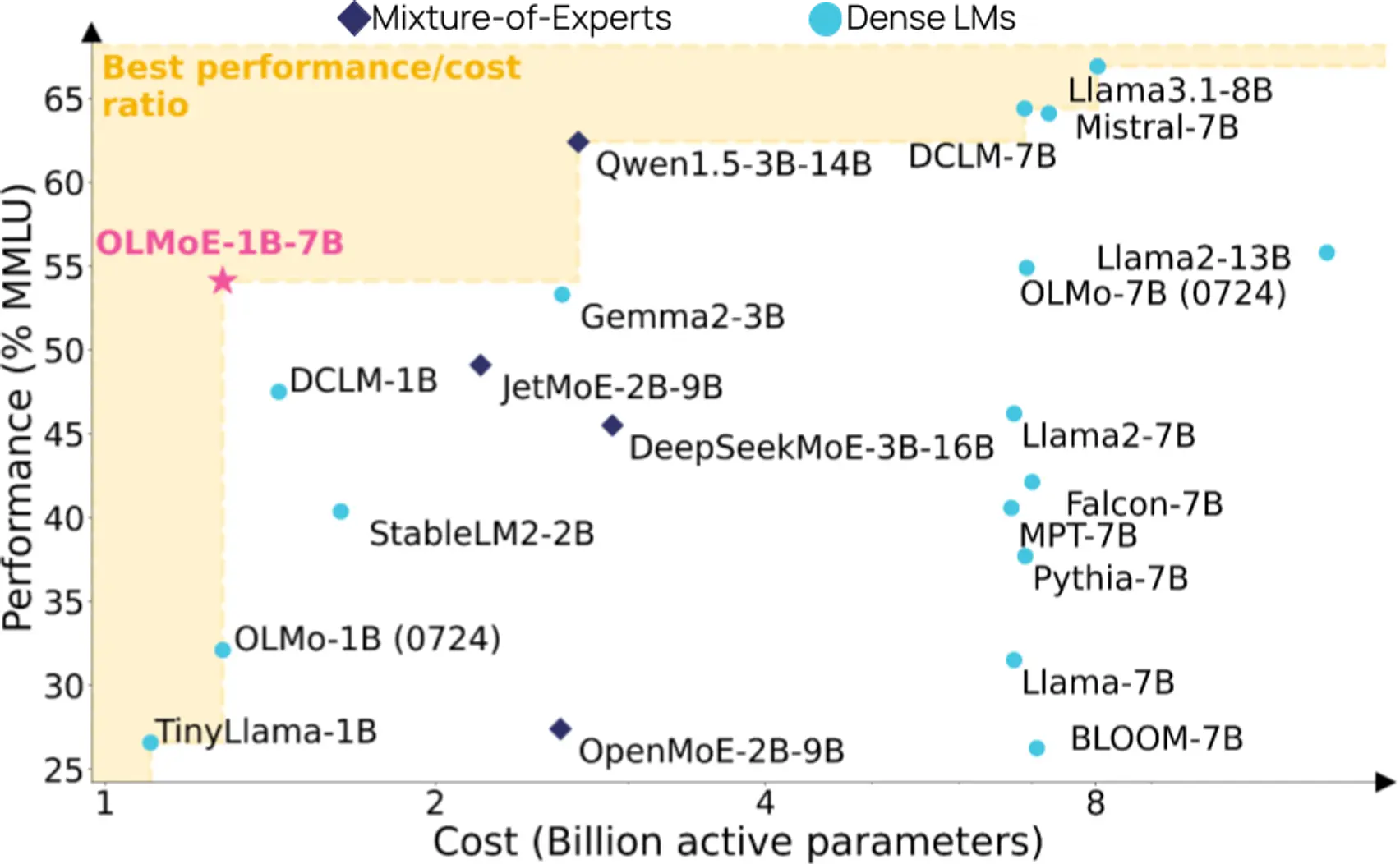

Typically, open-source language models require a large number of parameters in order to achieve acceptable performance on benchmarks, making them difficult to apply in low-latency, on-device, and edge deployment use cases. OLMoE is a sparse MoE model with 1 billion active and 7 billion total parameters, allowing it to run easily on common edge devices (e.g. the latest iPhone) while achieving similar or better MMLU performance when compared with much larger models.

With the introduction of OLMoE, AI researchers and developers now have access to a best-in-class, fully open-source MoE model that can be deployed almost anywhere. We believe OLMoE is ideal for developing faster RAG systems in latency-constrained use cases, often deployed on less powerful edge devices (e.g. mobile devices, vehicles, IoT). We have previously demonstrated significant efficiency gains for RAG with methods such as GRIT, with which OLMoE can be easily combined for even greater computational efficiency.

Because OLMoE not only includes the model itself, but also the training code, the training dataset, and logs, we hope developers can more effectively start experimenting and developing applications using this new model right away.

Training OLMoE

OLMoE is a decoder-only LLM with MoE transformer layers with 64 small unique experts of which 8 are activated. We pretrained OLMoE for 5.1 trillion tokens with PyTorch FSDP and mixed-precision training for 10 days on 256 NVIDIA H100 Tensor Core GPUs with router z-loss and load balancing loss. We observe that MoEs train 2x faster than dense LLMs with equivalent active parameters. We discovered many insights about MoE pre-training by running 18 pretraining ablations on LLMs and MoEs; see the full paper on arXiv for more details. We also release an OLMoE model adapted through instruction tuning followed by preference tuning.

Results

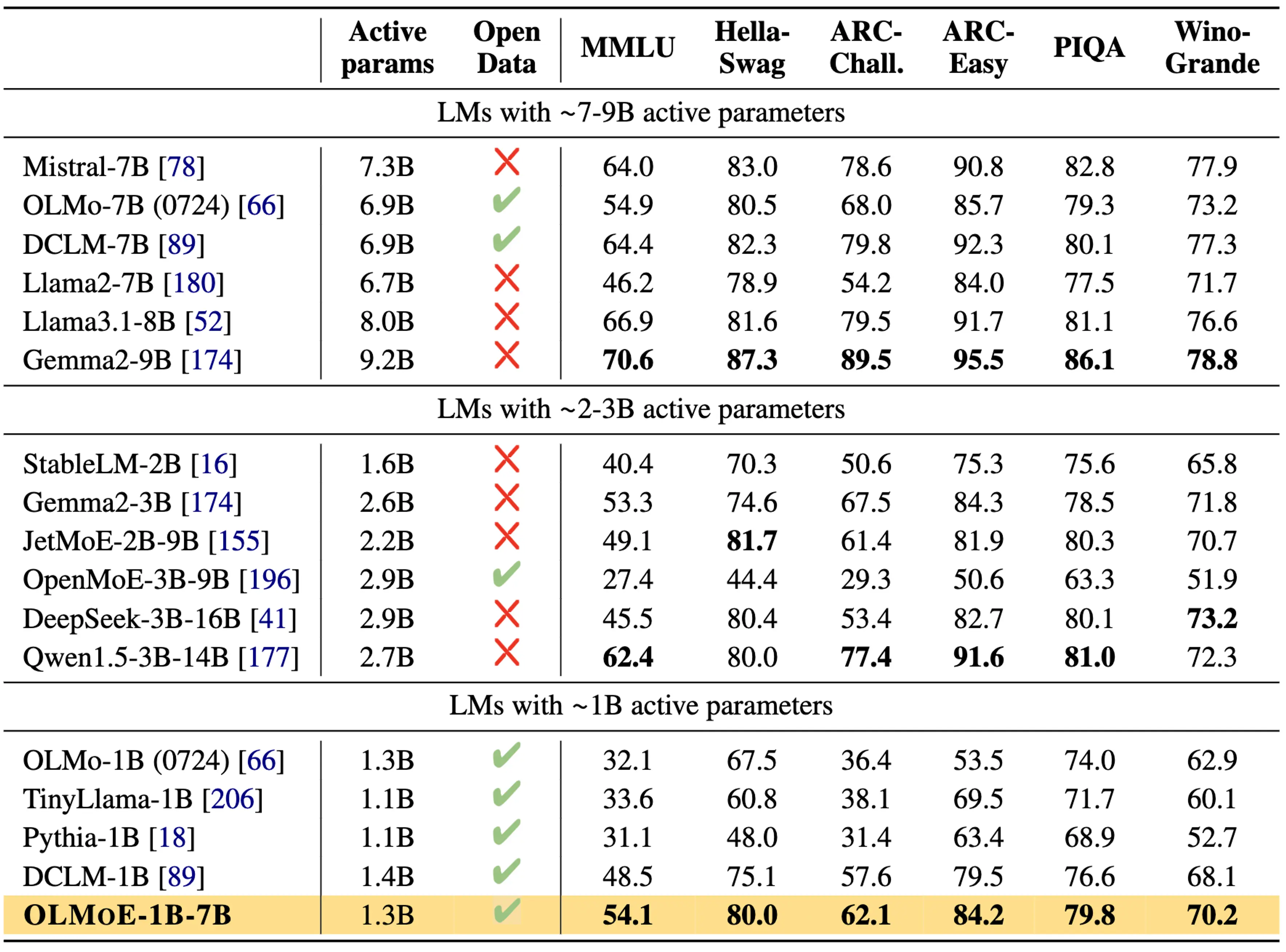

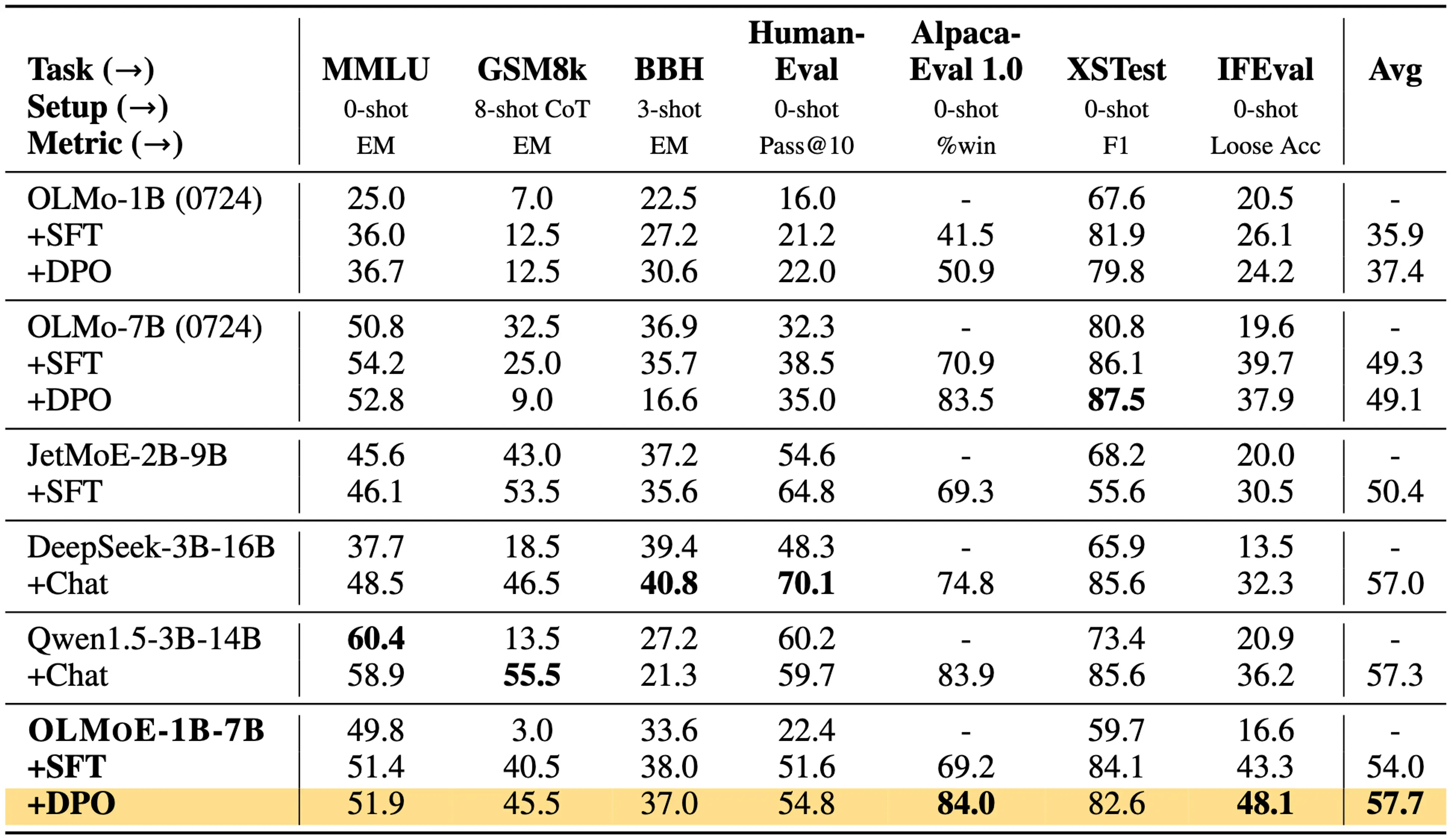

The OLMoE base model performs best among models with less than 2B active parameter range and consistently outperforms many LLMs in 7B parameter range despite using 6-7x less compute. After adaptation (+DPO model), OLMoE outperforms various larger MoE models (JetMoE, Qwen, DeepSeek) and all prior dense OLMo models.

Opening up future research

Aside from getting state-of-the-art results on important benchmarks and leading to substantial efficiency gains with a minimal number of parameters, we believe that our fully open release will help the community explore other open questions that weren’t possible before, for example: Do MoEs saturate earlier than dense models when overtraining? Do MoEs generally work better for GRIT-style models? Can OLMoE be jointly optimized with the retriever to build a more efficient RAG pipeline?

At Contextual AI we are strong supporters of open source and open science. We thank our friends at the Allen Institute for AI for a wonderful collaboration.

See the links below for additional resources.