Open-Sourcing the Best Local Text-to-SQL System

Lessons from BIRD and the Path to Enterprise-Scale

Introduction

Today, we’re open-sourcing Contextual AI’s Text-to-SQL system – the best fully-local solution on the BIRD benchmark. While currently in the top 5 (behind API-based systems using Gemini and GPT-4o), our system held the overall #1 spot in February 2025 and demonstrates that local models can compete with closed-source giants on this critical enterprise task.

Why does this matter? While unstructured data comprises the majority of enterprise information, mission-critical operational data – financial records, customer transactions, inventory metrics – lives primarily in structured databases. Accessing this data requires SQL expertise, creating bottlenecks between business stakeholders and the insights they need. Text-to-SQL systems promise to bridge this gap by automatically translating natural language queries into executable SQL statements, transforming questions like “show me Q4’s top 5 highest revenue customers by region” into queries that return actionable results.

The case for local models is particularly compelling here. Structured data often contains sensitive information (financial transactions, customers’ data, personal data, etc.), making privacy-preserving local AI systems highly desirable. Unlike black-box API models, open-source local models can be customized and further optimized for specific use cases. Our system demonstrates that with the right approach, local models can achieve competitive performance without sacrificing data privacy or control.

Recent advances in language models have opened new possibilities for Text-to-SQL tasks, with benchmarks like BIRD and SPIDER 2.0 providing valuable signals for measuring progress. Through our investigation at Contextual AI, we’ve uncovered key insights about what makes these systems work – and how to push their boundaries.

In this technical blog post, we dive deep into the design decisions and insights behind our pipeline, connecting our findings to the broader literature. We’ll explore how inference-time scaling through parallel candidate generation enables local models to compete with larger API models, why context remains crucial for accuracy, and what our experiments with thinking models revealed about different approaches to scaling compute. While our current system relies on generating multiple candidates (which can be computationally expensive), the use of local models opens doors to further optimization through techniques like parallelization for increased throughputs and reinforcement-learning (RL) training for more efficient sampling.

For those ready to build, we’ve also created a step-by-step Colab Notebook (link) that serves as a primer for exploring the full system. The notebook walks beginners through implementing the core ideas from our solution, providing a foundation to understand and experiment with the complete pipeline. Whether you’re a technical AI builder looking to solve enterprise data access challenges or a researcher exploring the frontiers of Text-to-SQL, this post provides both practical tools and theoretical insights to advance your work.

Contextual-SQL

Existing SoTA Text-to-SQL frameworks like Chase-SQL and Xiyan-SQL often rely on various components like Schema Linking (retrieving tables and columns relevant to the query), Value Retrieval, Chain of Thoughts (CoT) Reasoning, Query Fixer, etc. to generate SQL queries.

While value retrieval and schema linking are promising techniques for reducing the query’s complexity as well as reducing the context length of the schema description, imperfect recall from these stages propagate and become a performance bottleneck.

On the other hand, chain of thoughts reasoning and query refinement are natural choices for scaling inference-time computation. However, the reliability and effectiveness of these approaches are not entirely understood.

We instead aim to investigate the full potential of scaling inference-time computation via sampling, where parallelization and input context caching are benefits of sampling over more sequential methods like CoT or query refinement. We show that by scaling up a relatively simple candidate generation pipeline and selecting promising candidates carefully, we can achieve a top score on the BIRD benchmark.

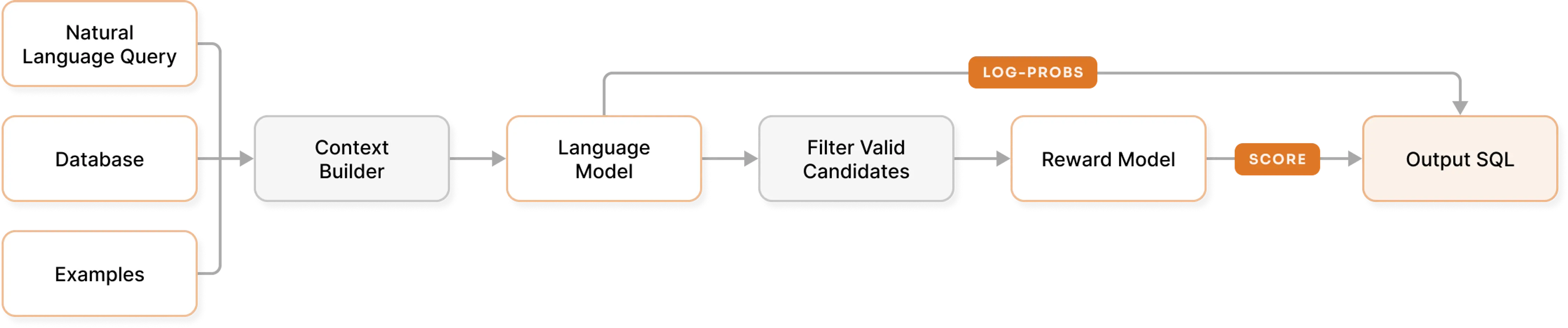

Overview of Contextual-SQL

The core idea behind Contextual-SQL is a 2 stage approach: generate candidates and then identify good ones. More specifically, we provide an informative context to generate a diverse set of candidates, and then select the best candidate by filtering and then ranking candidates.

This pipeline captures 2 important principles in building AI systems: (1) The importance of a good context, and (2) The power of inference-time scaling. We give a brief overview of these 2 ideas below and provide more details in the next section.

The Importance of Context

Context is often the key to solving difficult problems with AI. This principle holds with Text-to-SQL. The main input to an LLM for Text-to-SQL, along with the natural language query, is a textual description of the database that includes the tables’ names, descriptions, their columns, and other useful metadata.

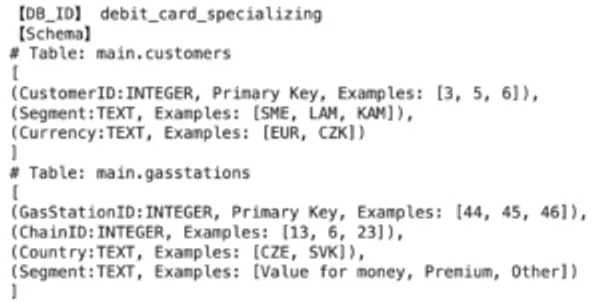

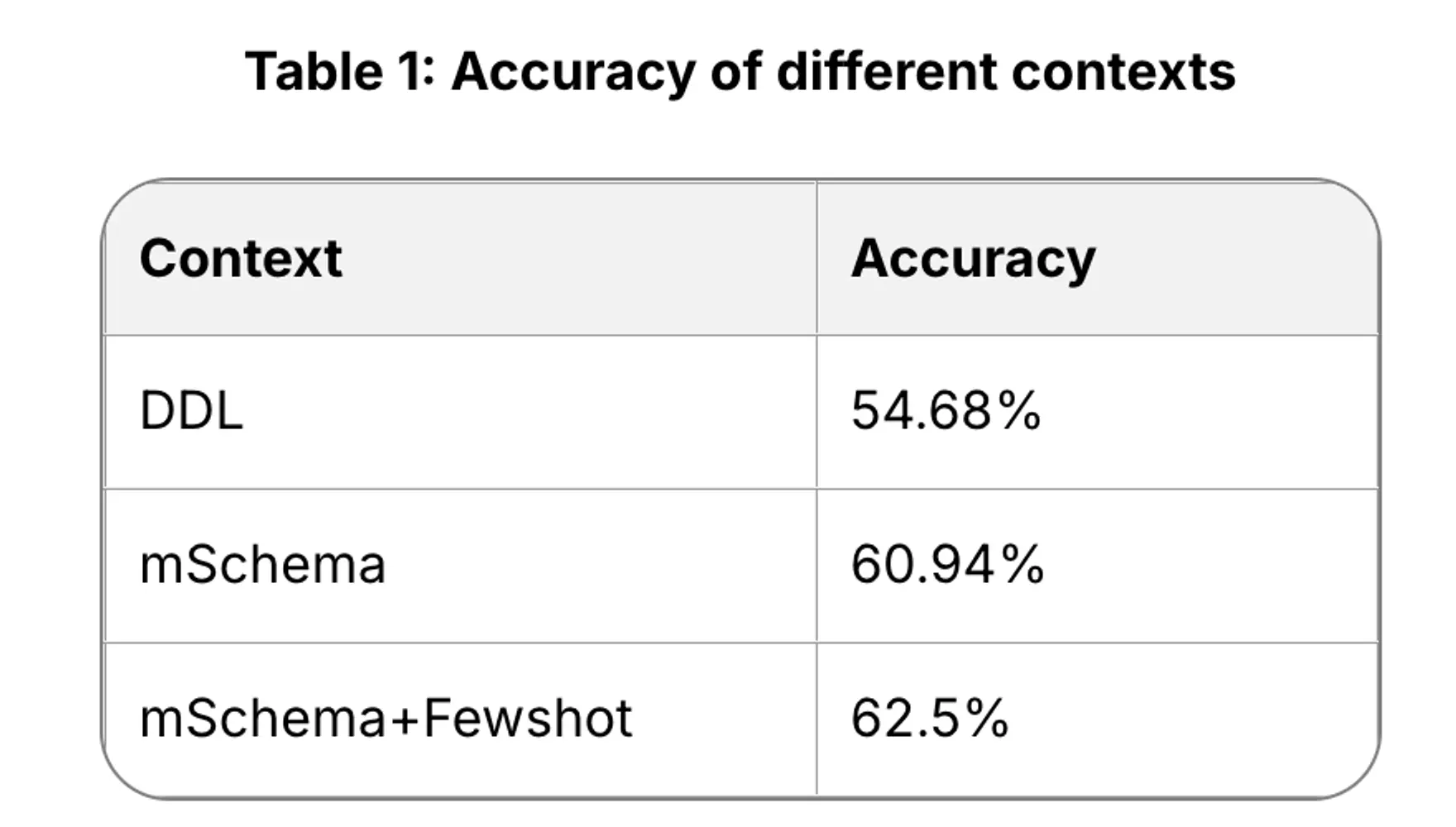

We examine providing context via Data Definition Language (DDL), M-Schema, and adding few-shot examples, and show improved performance with more informative context in Table 1.

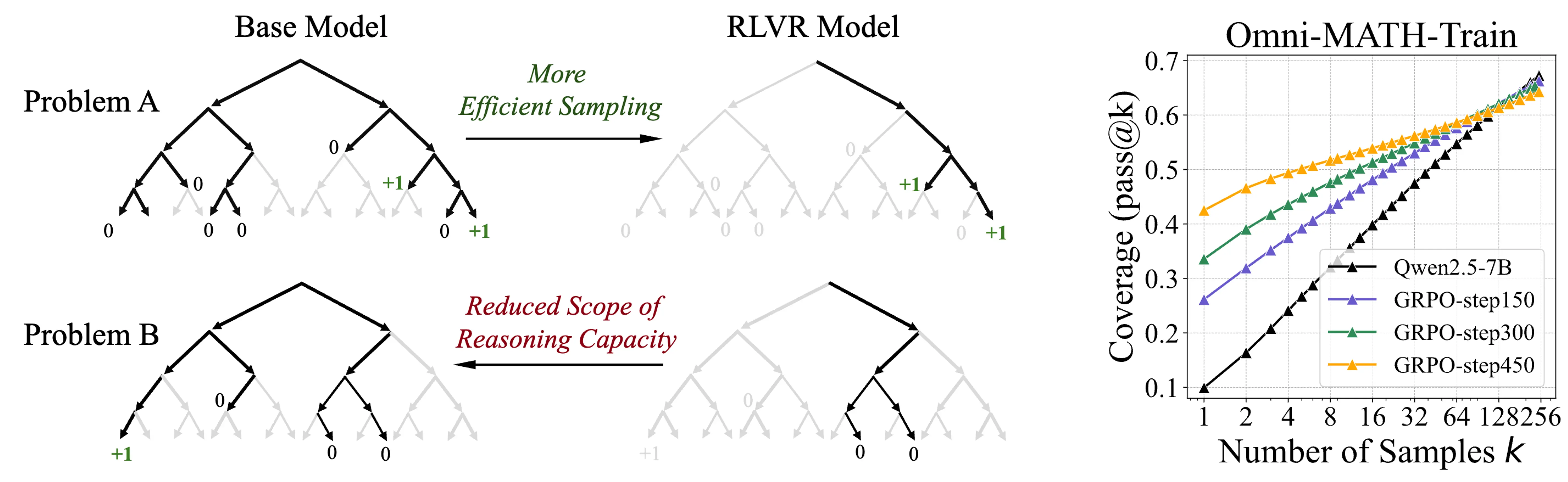

The Power of Inference-time Scaling

Recent research has shown that one can recover a lot of the gains from RL-tuned models on just the base model (without RL) if one samples enough from the base model and somehow selects the right candidate. For example, [Yue et al’ May 25] Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model? demonstrates comparable performance of the base model with the RL-trained model with a high enough number of samples, where at K>=128 (right figure), the base model starts to achieve a higher recall than GRPO-tuned models.

Image Credit: [Yue et al’ May 25] Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model?

Hence, while RL can induce more efficient sampling towards higher reward regions, one can also achieve similar performance gains by sampling enough diverse candidates and searching for promising ones.

Getting Started: The Importance of a Good Context

In this section, we introduce 3 ways of providing context and perform experiments (provided in this Google Colab notebook) to demonstrate the importance of context: Data Definition Language (DDL), mSchema (by XiYan-SQL), and adding few-shot examples. Check out the notebook if you want to follow along with our results in this Section.

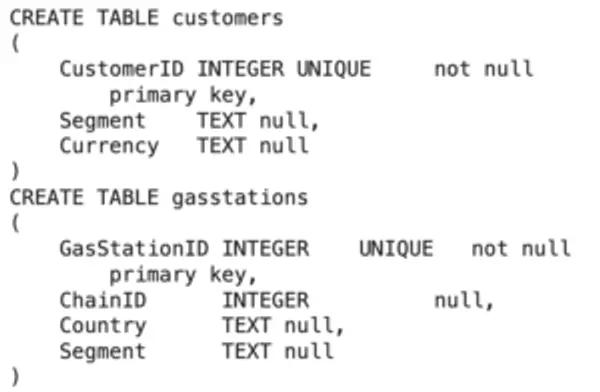

A Basic Schema Description: DDL

One of the most basic ways of describing a database schema to provide context to a language model is via a Data Definition Language or DDL. A DDL is a subset of SQL that defines and manages database schema structures through statements like CREATE, ALTER, and DROP, specifying tables, columns, data types, constraints, indexes, and relationships within a database. This information provides valuable context to the model for understanding table names, column names, data types, foreign key relationships, and constraints to produce SQL queries that align with the actual database structure. Examples:

Adding More Schema Context with Reflection

M-Schema is an attempt at creating a more informative context on top of a more LLM-friendly presentation of the database’s schema. The key idea behind mSchema is leveraging SQLAlchemy’s reflection to provide connections between tables by including foreign key relationships between tables as well as including examples for each column for improving the model’s comprehension. The example below shows how representative examples are added to each column on top of the column’s name and type.

In-Context Learning and Few-Shot Demonstration

Few-shot examples enable in-context learning for text-to-SQL by providing demonstration pairs of natural language questions and corresponding SQL queries within the prompt. These examples provide hints on what a typical question answer pair looks like and how the model should respond. In the Colab notebook, we show that providing just 1 example demonstration improves the model’s performance.

Experiments: The Importance of Context

In the accompanying Google Colab notebook, step by step implementations of building the context for DB schema along with experiments on `credit_card_specialization` subset of the BIRD-eval set (64 questions of varying difficulty) are provided. All you need is a Google API to use Gemini to see the impact of context on the model’s ability to generate the final SQL. The results below demonstrate this point:

In the next section, we scale up this recipe to achieve top scores on BIRD.

Towards SoTA on BIRD: Inference-time Scaling and Candidate Selections

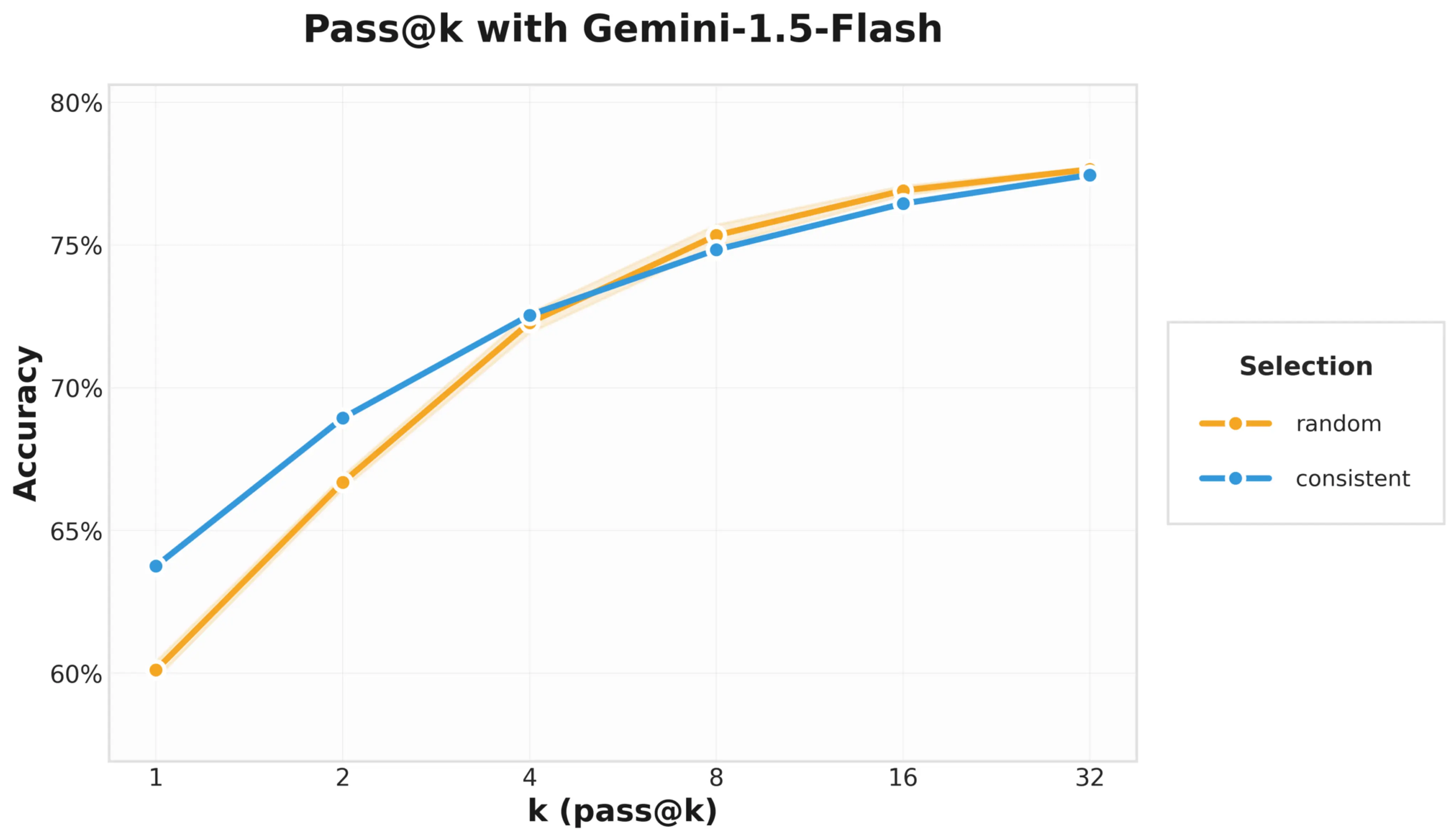

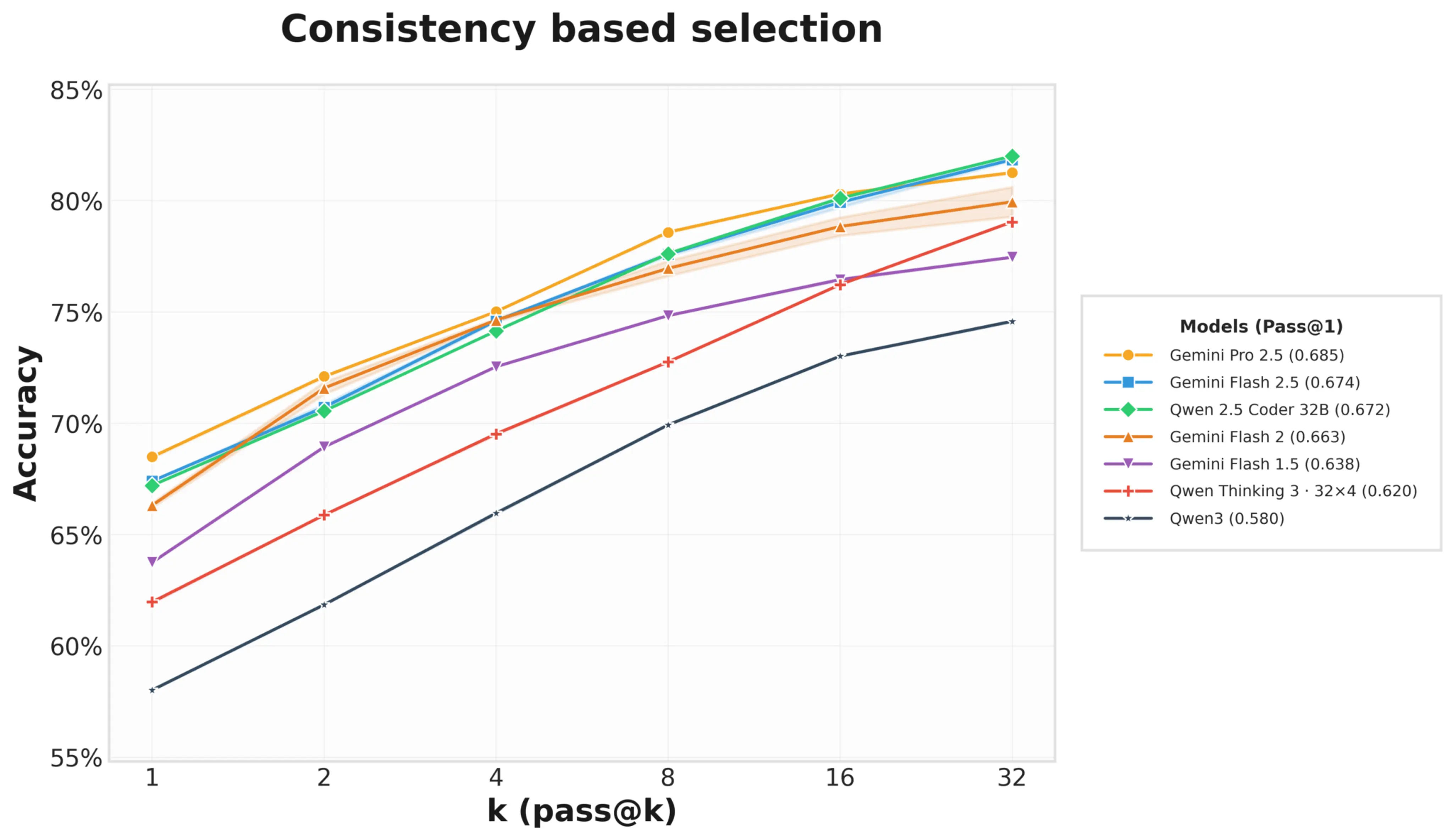

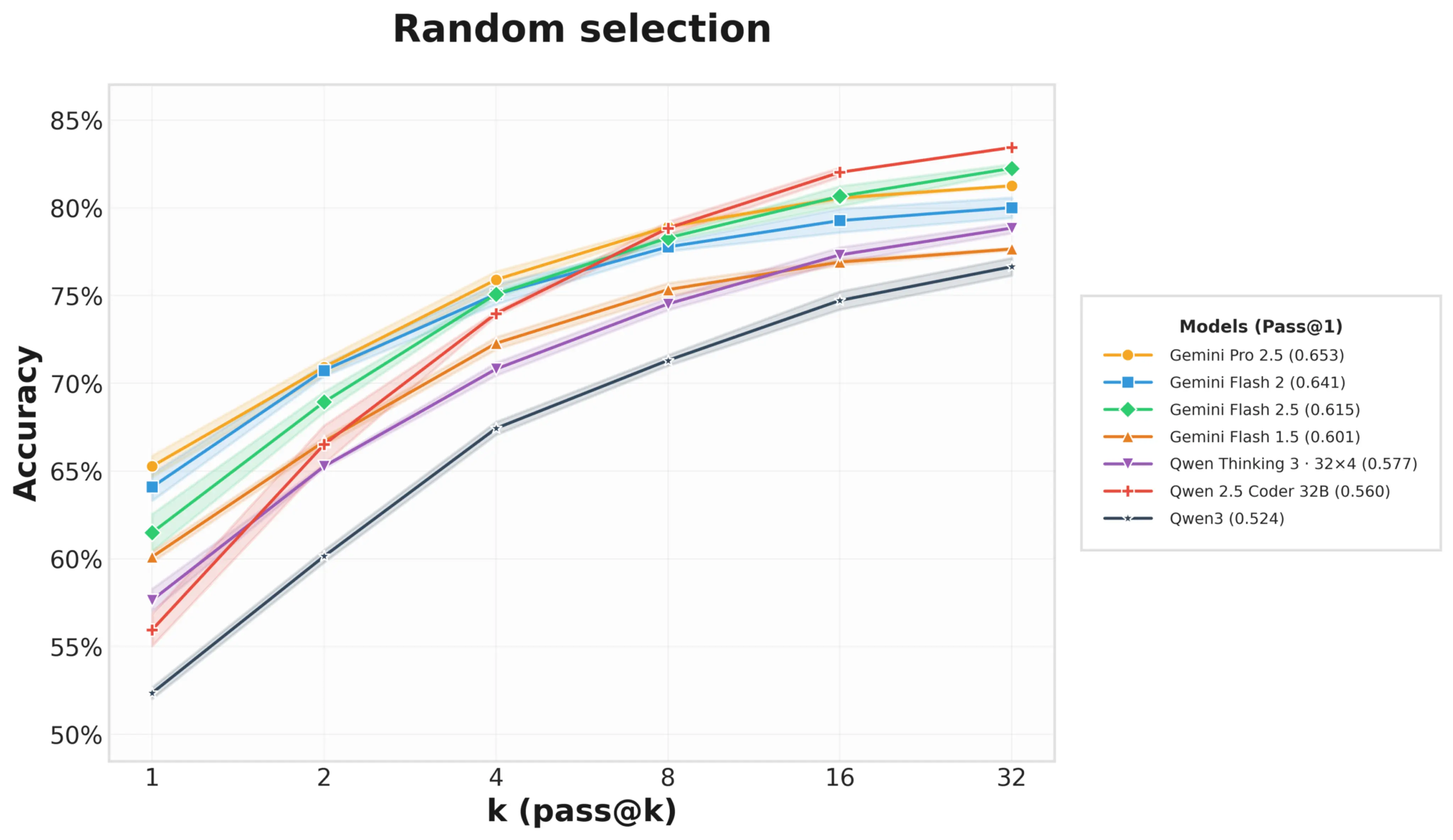

Pass@k for execution accuracy evaluates whether at least one of k generated SQL candidates produces the correct result. Increasing k with diversity-enhancing techniques such as higher sampling temperatures or variations in the prompt (via reordering or changing few shot examples) reveals the model’s latent capability to generate correct queries even when the top-1 prediction fails.

When pass@k demonstrates favorable scaling behavior (substantial improvement as k increases), this indicates the model’s generations contain the correct answer but suffer from high variance. In this scenario, inference-time scaling strategies can be highly effective – specifically, generating numerous diverse candidates and applying filtering mechanisms such as execution success filtering, consistency-based scoring through majority voting, or learned reward models that rank candidates based on query’s output to select the best SQL query from the candidate pool.

In the Figure below, on Gemini-1.5-Flash with the mSchema+example prompting, we generate 1024 candidates by generating 32 samples each (with temperature 1) across 32 different few-shot examples. Then we measure the pass@k performance of random selection of valid non-duplicated candidates versus consistent selection (top valid SQL candidates with the most votes), where valid candidates mean SQL queries that execute successfully (no execution error).

There, we see that the performance improves quite a bit as the number of candidates increases. This suggests that further improvements can be extracted by having a better candidate selection strategy.

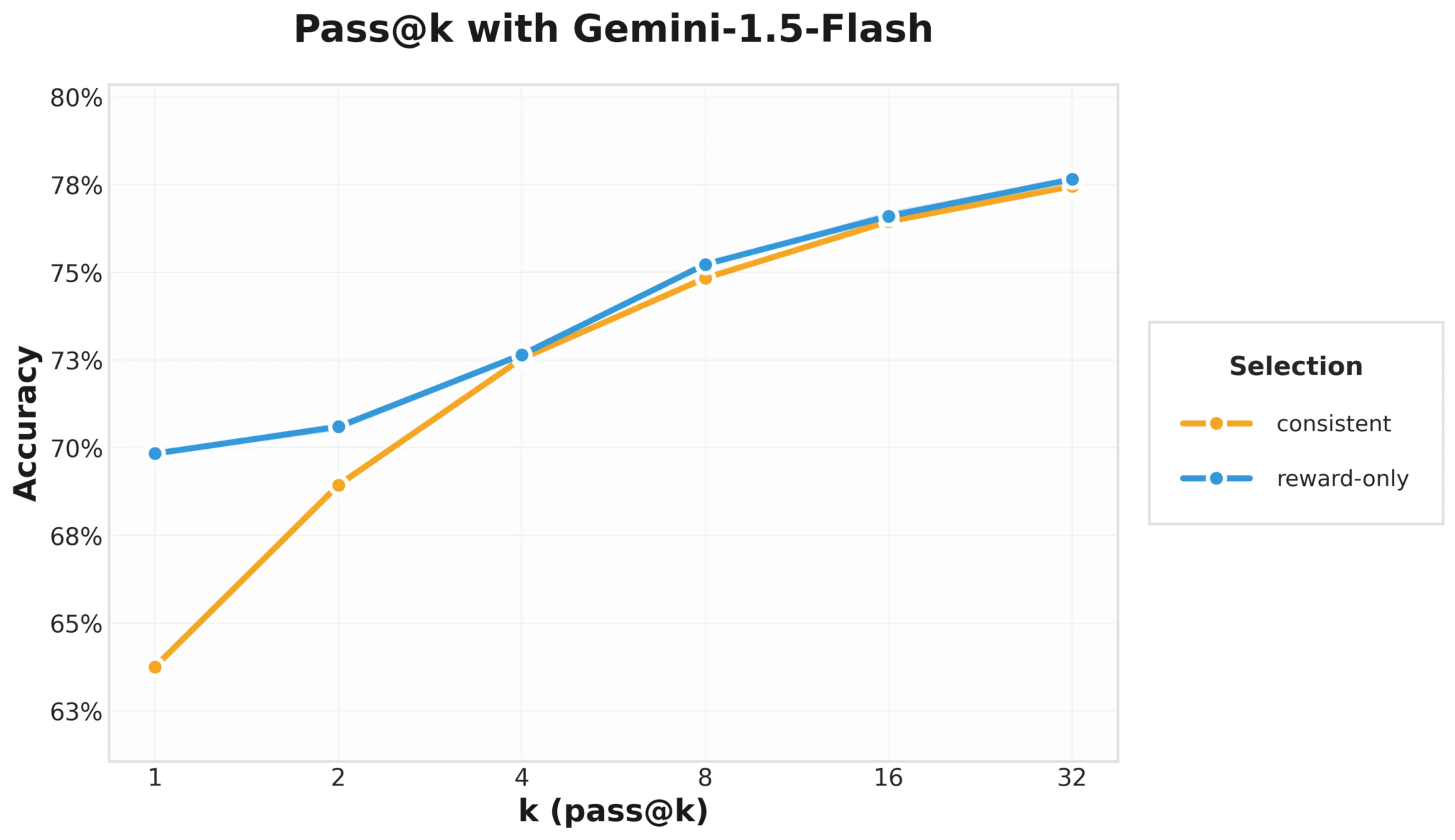

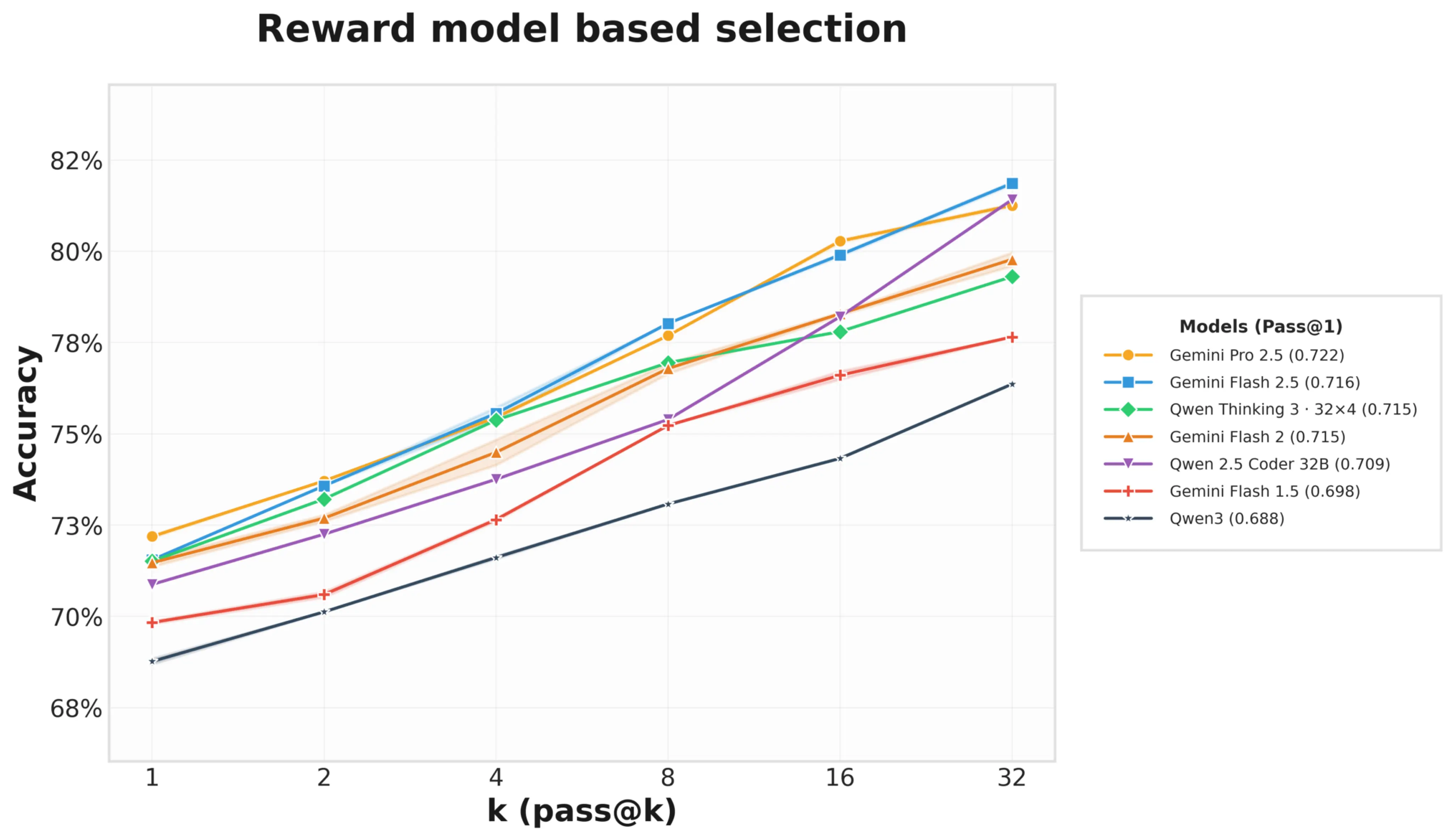

Ranking Candidates with a Reward Model

A natural strategy for selecting candidates is to train a scoring model to rank candidate SQLs’ execution outputs given the DB information and user’s query.

For our pipeline, we train a base Qwen-2.5-32B model on the train split of BIRD to output the probability of a candidate SQL query and its outputs being correct given the DB schema and query as context.

In more details, a large pool of distinct candidate SQL queries is generated for every training question, sorted by their generation likelihood. Every query is then labeled: positives for execution outputs matching the ground‑truth answer and negatives otherwise. During training, for each question within a mini-batch, a positive sample is drawn at random and paired with 15 incorrect candidate SQL queries with the highest likelihood as hard negatives. The training objective for the reward model is to classify correct SQL from the pool of 16. This was implemented with the same codebase we used to train our state‑of‑the‑art reranker.

Indeed, as in the Figure below, selecting top valid candidates with the highest scores given by the reward model improves the pass@1 score of Gemini-1.5-Flash dramatically over consistent (majority voting) and random (among valid non-duplicated candidates) selection strategies:

On the BIRD-dev set, the strategy presented so far already achieves an execution accuracy of 70%, which is already within the top scores.

Improving Consistency: Log-Probs and Fine-Grained Confidence

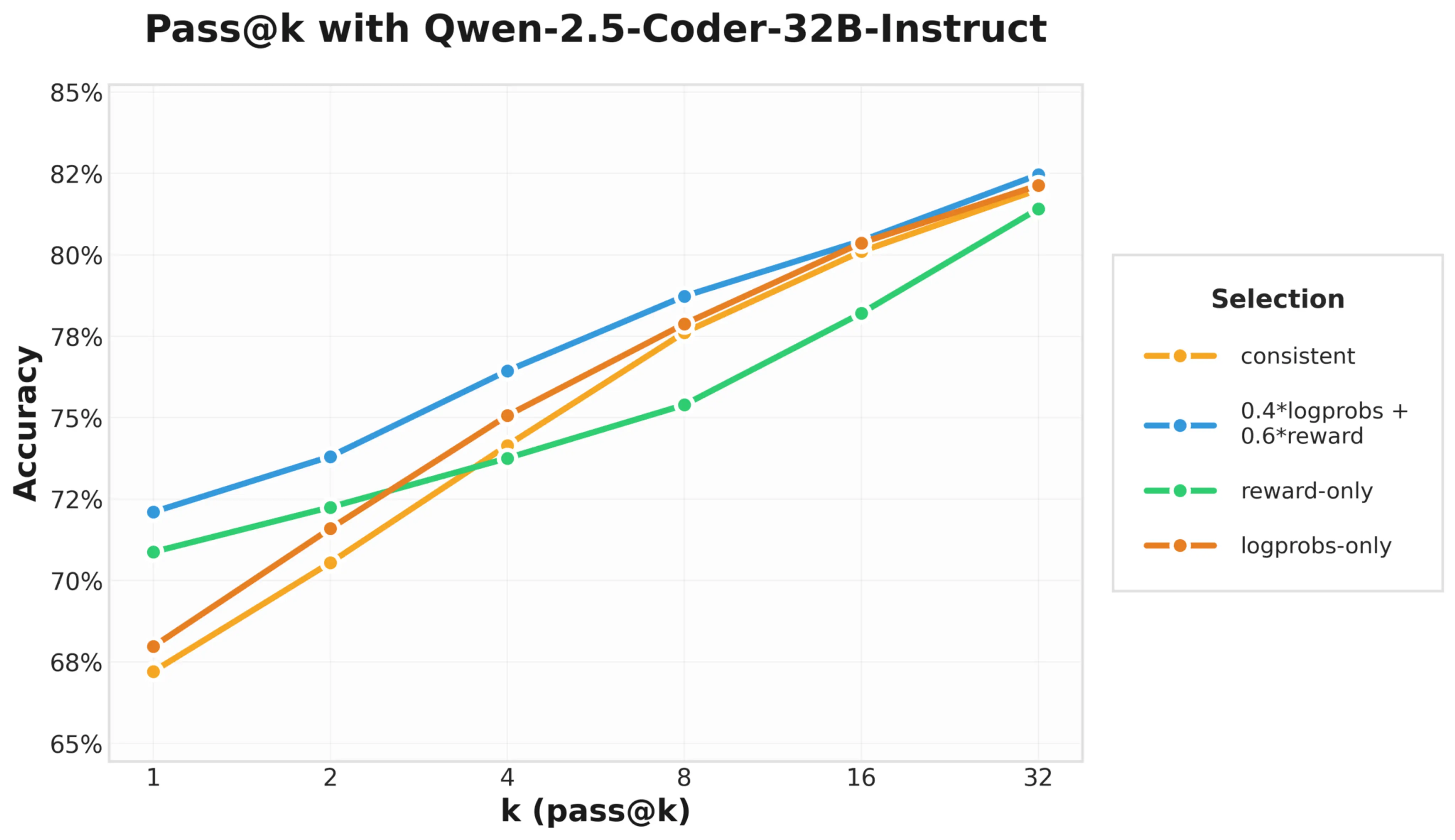

From the consistency result in the previous section, we see that a model’s “confidence” (here, measured by majority voting) in its output can correlate with the output being correct. Another more fine-grained way to measure a model’s confidence in its outputs’ tokens is via its probability assigned to each of its outputs’ tokens.

More specifically, if the output SQLs of a model corresponds to tokens , then the model’s conditional likelihood for the output is or more simply by taking the log of the probability (log-probs):

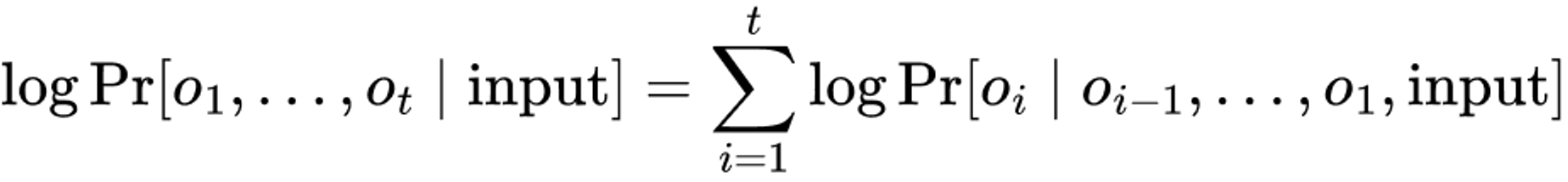

Since API models in general do not provide the tokens’ log-probs (nor logits), we perform a similar experiment as before with Qwen2.5-coder-32b, where we use the conditional likelihood score given by the cumulative log-probs:

Here, we see that indeed log-probs provide a slightly better signal than consistency alone at small k.

Combining Confidence with Reward for Ranking

A natural progression is to combine the log-probs and the reward score together for a better signal. Note that since the reward model is trained to output the probability P(Y|X, Q) of whether the output Y of a SQL candidate X given the query Q is correct or not, combining that with the probability of the SQL candidate P(X|Q) would give us a joint likelihood of the pair: .

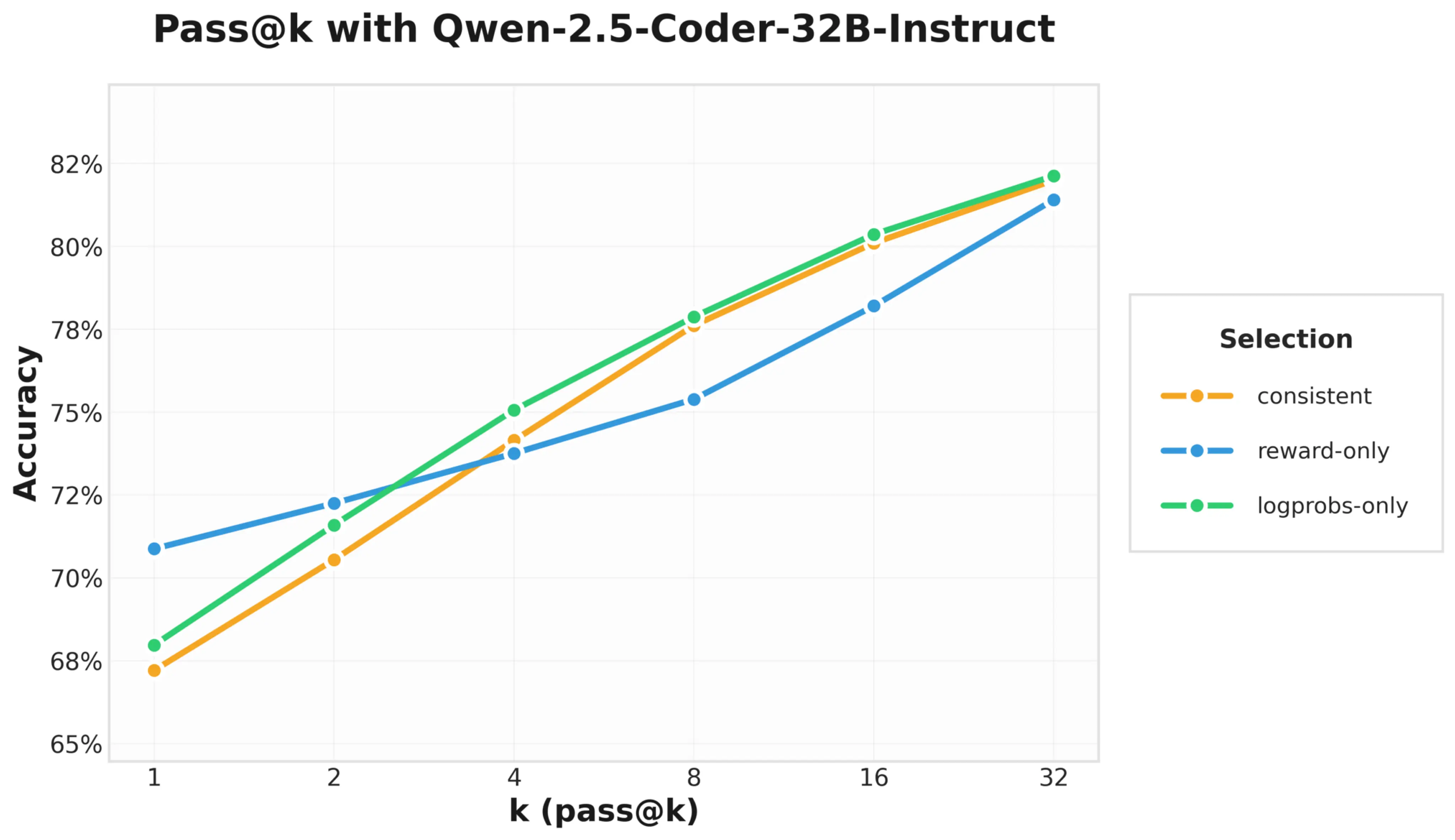

Hence, we can create a score as a weighted average between the log probs of the SQL candidate along with its reward. More specifically, parameterized by a weight α, we compute

Note that α=0 and α=1 correspond to log-probs only and reward only, respectively. Sweeping through , we find that 0.4 gives improved performance on a subset of the dev set.

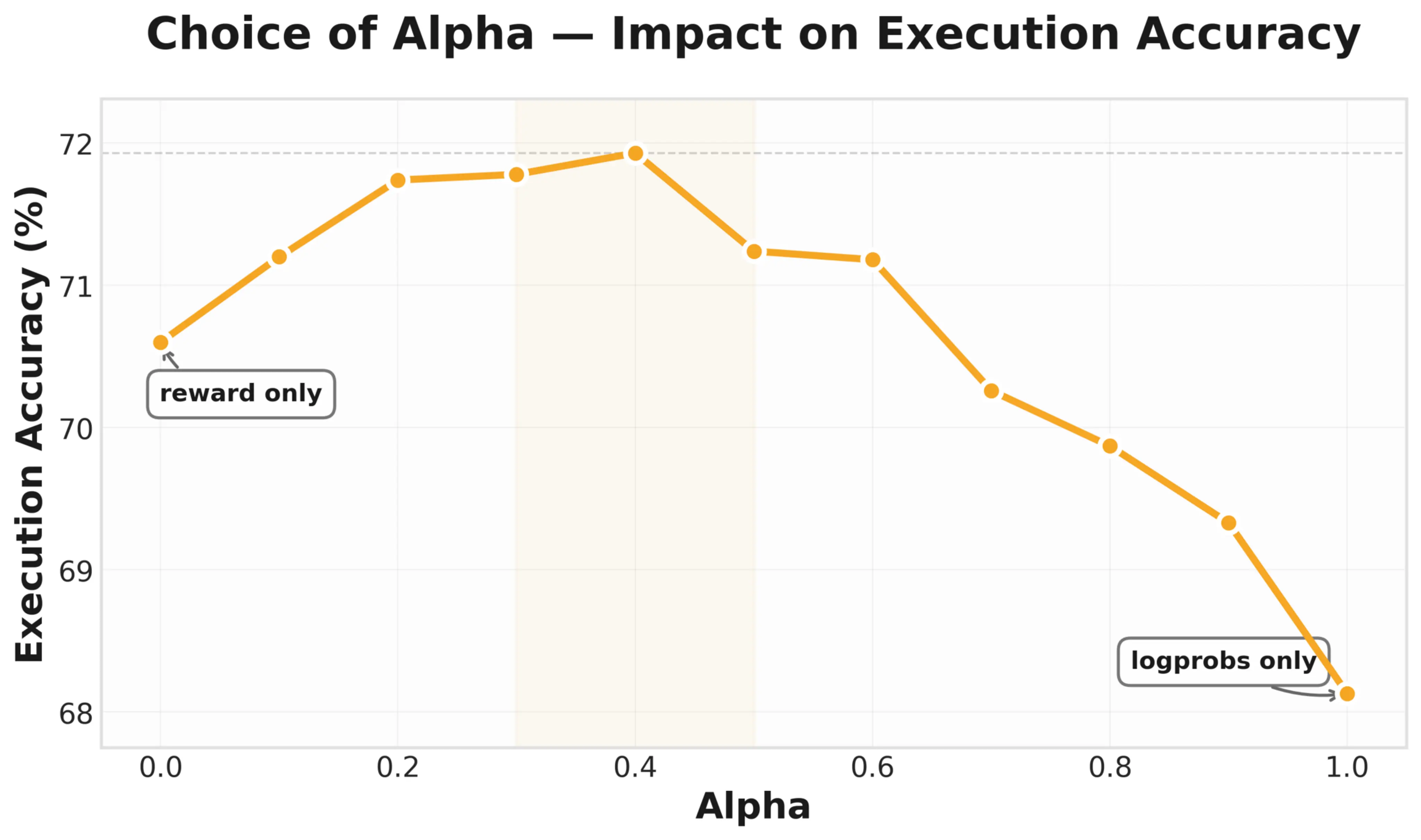

Performing the same experiment indeed shows a lift in the final score:

At this point, we have achieved a close to SoTA score on the BIRD-dev (around 73% execution accuracy) set with this recipe. This is the main recipe for the full Contextual-SQL submission that achieved SoTA on BIRD.

The Contextual-SQL Pipeline

With the key components outlined above, we summarize again the full pipeline here:

- Providing the context as mSchema along with 1 example sampled from the train set.

- Using a temperature of 1, sample n candidates.

- Repeat step 1 and 2 m times with different few-shot examples to generate a total of n*m candidates.

- Execute the candidates to keep only the valid ones (no SQL execution error).

- Finally, use the trained reward model along with the log-probs for selecting the candidate with the highest score.

The full open-source implementation can be found at https://github.com/ContextualAI/bird-sql.

Diving Deeper: Comparing Generator Models’ Quality

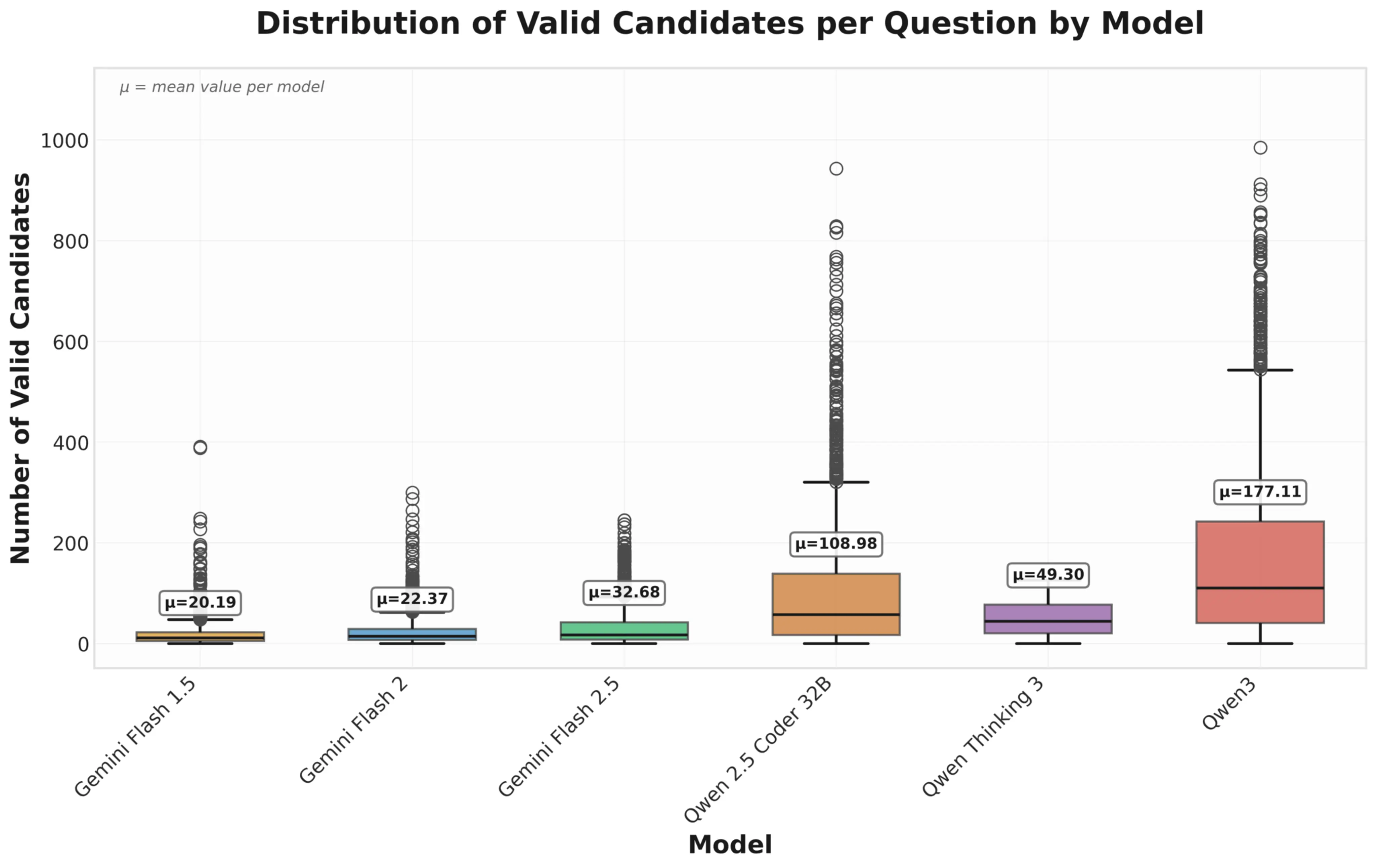

This section provides additional experimental results comparing different base models for generating SQL candidates with different temperature settings across different selection methods: Qwen-2.5/3, and Gemini-1.5/2/2.5-flash/pro. The pass@1 score is also shown in a legend box.

Here, we see that models of increasing capabilities (e.g. Gemini-2.5-pro) achieve better pass@1 performance. The thinking models (e.g. Qwen3-thinking) do not seem to outperform the non-thinking ones.

Furthermore, note that models that can generate diverse candidates like Qwen-2.5 observes better pass@k performance at higher k:

While higher pass@k might mean that there is more performance to extract during inference-time, there is no guarantee that we can fully recover that performance.

As newer benchmarks like SPIDER 2.0 demand longer context and more computational resources, more efficient approaches are needed beyond simple inference-time scaling.

Conclusion

Our journey with BIRD taught us valuable lessons about Text-to-SQL fundamentals – from the importance of context to the power of inference-time scaling. These insights enabled us to build a system that demonstrates local models can compete while preserving data privacy and enabling customization. The computational costs of candidate generation in our approach could be offset by the flexibility of local models, which enable both parallel processing to boost throughput and reinforcement learning methods to streamline the sampling process—optimizations that point toward potential efficiency gains.

Looking forward, our experiences with customers uncovered that enterprise deployments present an entirely different scale of challenge: intricate schemas, massive tables with thousands of columns, messy data, and complex multi-step queries that go far beyond single SQL generation. This is precisely what makes SPIDER2 a critical benchmark – with its 632 real-world workflow problems, databases exceeding 1,000 columns, and production cloud environments (BigQuery, Snowflake), it captures the complexity enterprises actually face. The performance gap speaks volumes: GPT-4o drops from 86.6% accuracy on Spider 1.0 to just 10.1% on SPIDER2, with even o1-preview reaching only 17.1%.

Applying insights from our BIRD research to tackle these enterprise-grade challenges is our next challenge. By open-sourcing our BIRD system today – which remains the best fully-local solution – we’re sharing the foundation that’s shaping our approach to the next generation of text-to-SQL systems.

Stay tuned as we push beyond single-query optimization toward solutions that can handle the multi-dialect SQL workflows and complex reasoning that production environments demand. The gap between current benchmark performance and real-world utility is substantial, but it’s precisely where the most impactful innovations await.

In the meantime, we hope our BIRD system and the insights shared in this post accelerate your own work in this space.

Related Articles

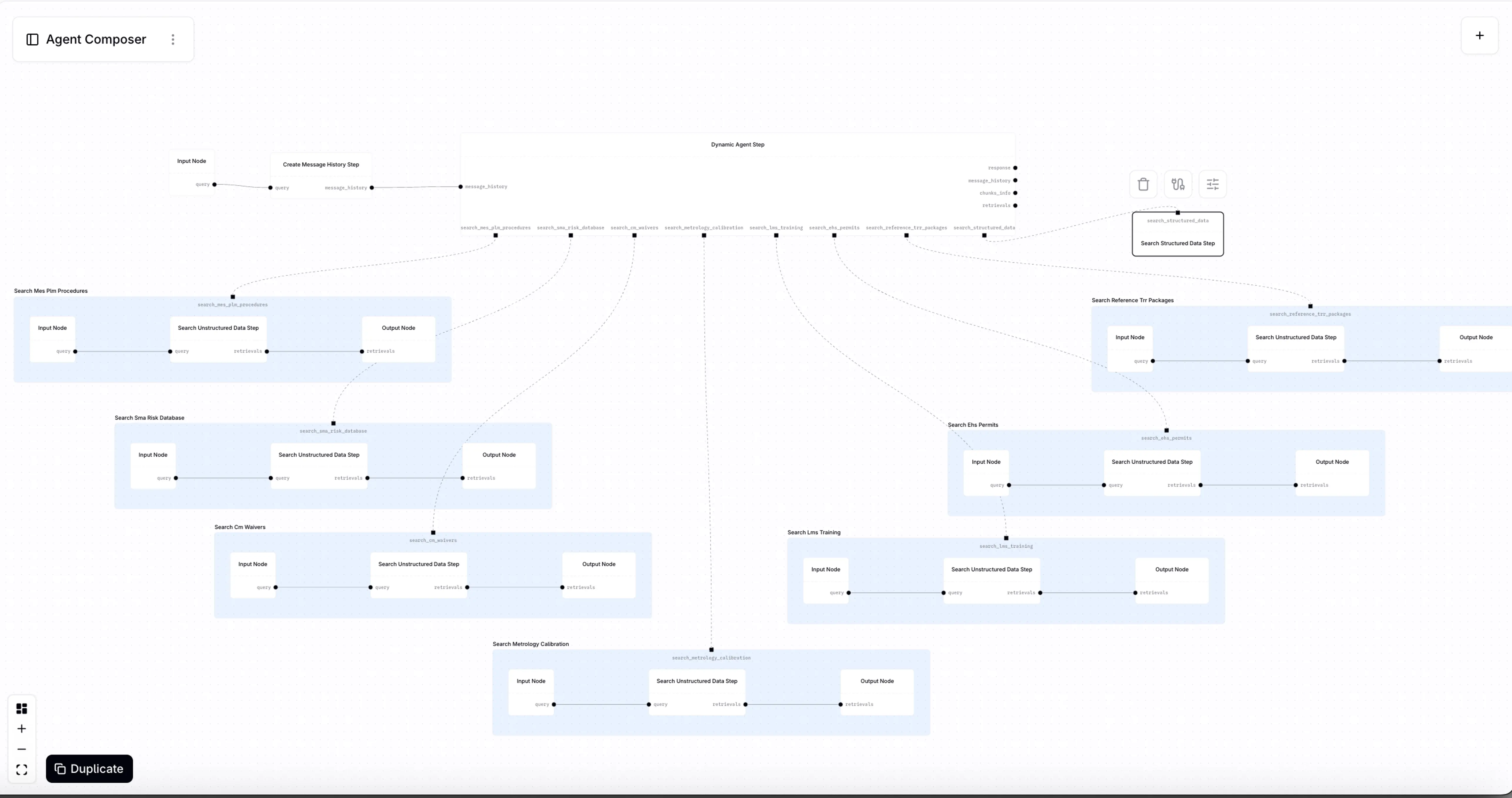

Introducing Agent Composer—AI for when it IS rocket science

Introducing Agent Composer: Expert AI for complex engineering. Cut hours of specialized, technical work down to minutes.

What is Context Engineering?